Claude 3 Opus vs ChatGPT 4: A Comparative Analysis

Key Points: The video compares Claude 3 Opus and ChatGPT4 in terms of their performance in various tasks, including image generation, accessing the internet, and coding. The analysis reveals that Claude 3 Opus has limitations in image generation and accessing the internet, but excels in coding tasks, particularly when providing code snippets. The video highlights the importance of prompt customization and the potential for Claude 3 Opus to outperform ChatGPT 4 in specific use cases.

Why You Shouldn't Trust ChatGPT to Summarize Your Text

ChatGPT 4o's Summary

1. **Misinterpreting Prompts**: ChatGPT can deviate from instructions and fail to recognize specific words, leading to inaccurate summaries.

2. **Omitting Information**: Without clear instructions, ChatGPT may leave out important details. Precise prompts are necessary.

3. **Using Wrong or False Alternatives**: Due to limited or outdated knowledge, ChatGPT might provide incorrect replacements for unfamiliar references.

4. **Getting Facts Wrong**: ChatGPT can misrepresent or incorrectly state facts, necessitating user verification and editing.

5. **Struggling with Word Limits**: ChatGPT often fails to adhere to specific word or character limits, requiring manual adjustments.

The article advises users to supervise and edit ChatGPT's outputs to ensure accuracy and completeness.

Quick Links

Key Takeaways

- ChatGPT can misinterpret prompts, deviate from instructions, and fail to recognize specific words. Be precise and monitor its responses.

- ChatGPT may omit details or alter content if not given clear instructions. Plan prompts carefully to ensure accurate summaries.

- ChatGPT can use wrong alternatives, omit or alter elements, and get facts wrong. Edit its output and structure prompts for the best results.

There are limits to what ChatGPT knows. And its programming forces it to deliver what you ask for, even if the result is wrong. This means ChatGPT makes mistakes, and moreover, there are some common mistakes it makes, especially when it’s summarizing information and you’re not paying attention.

Article

ChatGPT Can Ignore or Misunderstand Your Prompt

If you give the chatbot lots of data to sort through, even a complex prompt, it’s likely to deviate from your instructions and follow its own interpretation of them.

Making too many demands at once is one of several ChatGPT prompt mistakes to avoid. But it can also come down to the chatbot not recognizing a particular word or phrase you use.

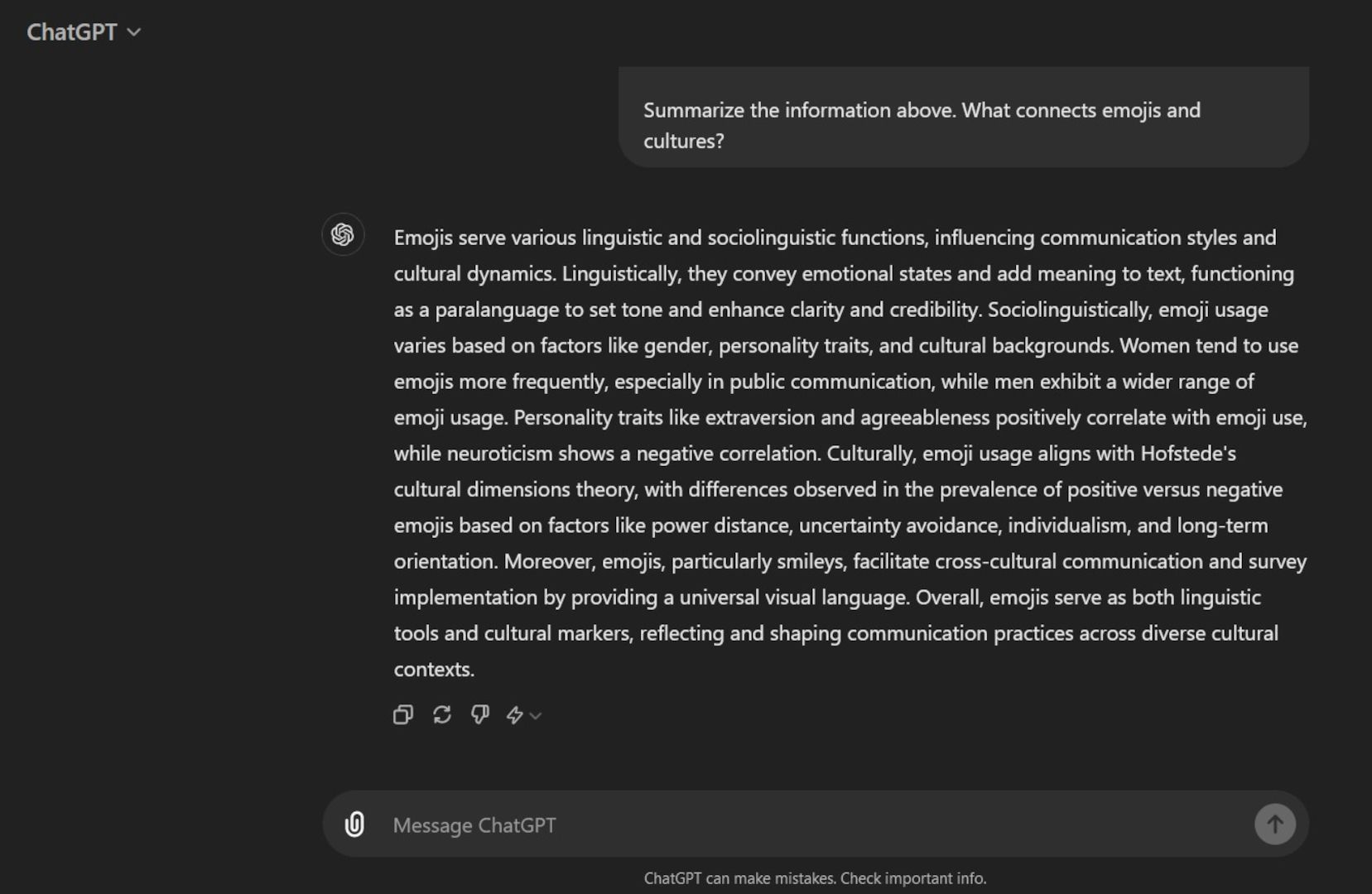

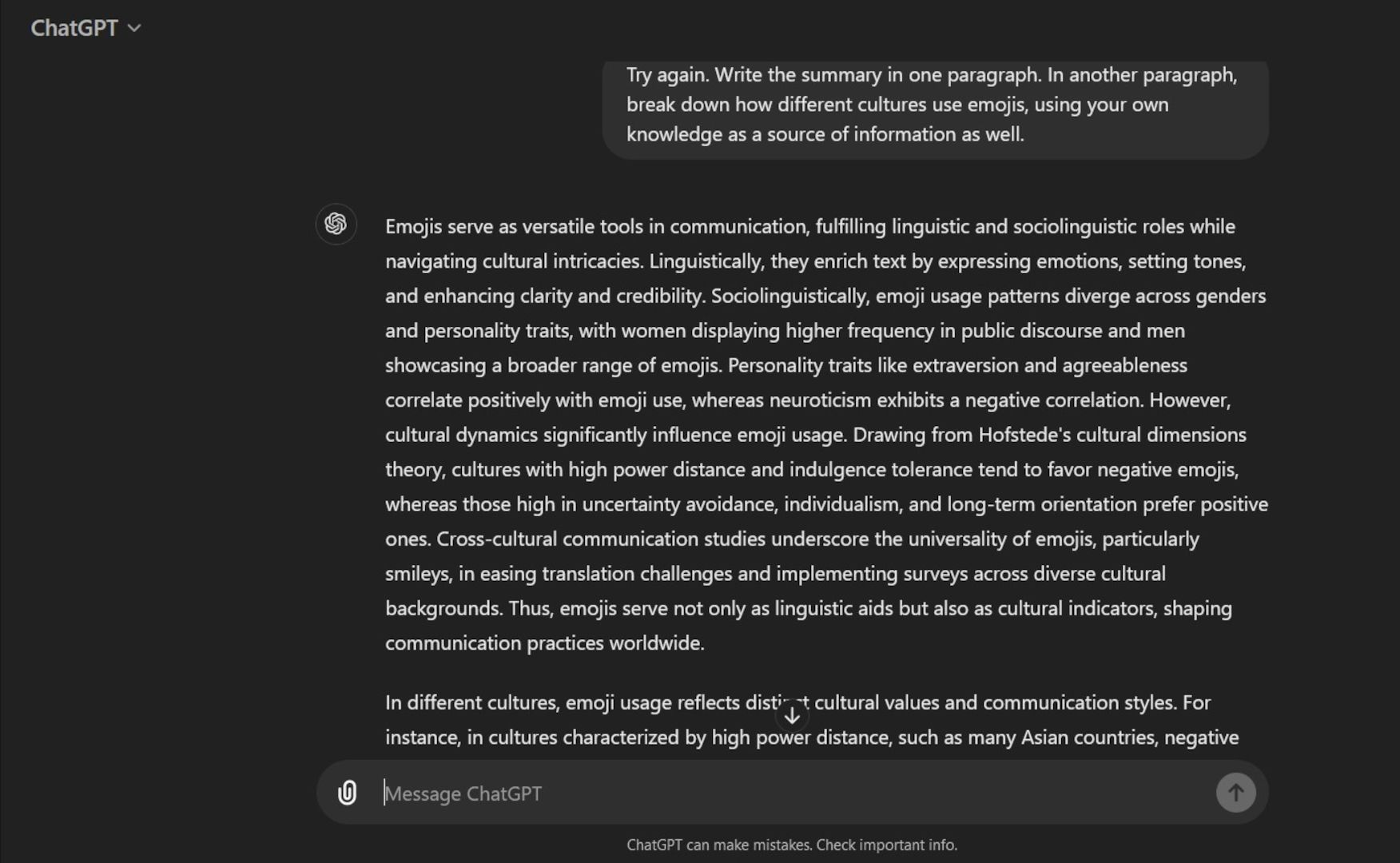

In the following example, ChatGPT got lots of information about the linguistic function of emojis. The intentionally simple prompt asked the chatbot to summarize everything and explain the links between emojis and cultures.

The chatbot merged both answers into one paragraph. A follow-up prompt with clearer instructions asked it to dip into its knowledge pool, too.

This is why you should keep your instructions precise, provide context when necessary, and keep an eye on ChatGPT’s results. If you flag up any mistake immediately, the chatbot can produce something more accurate.

ChatGPT Can Omit Information You Provide

ChatGPT is smart, but it’s not a good idea to bombard it with details about a particular topic without specifying what is or isn’t important.

The problem here is that, without proper instructions, the algorithm will pick and choose what information it considers relevant to the report or list you need.

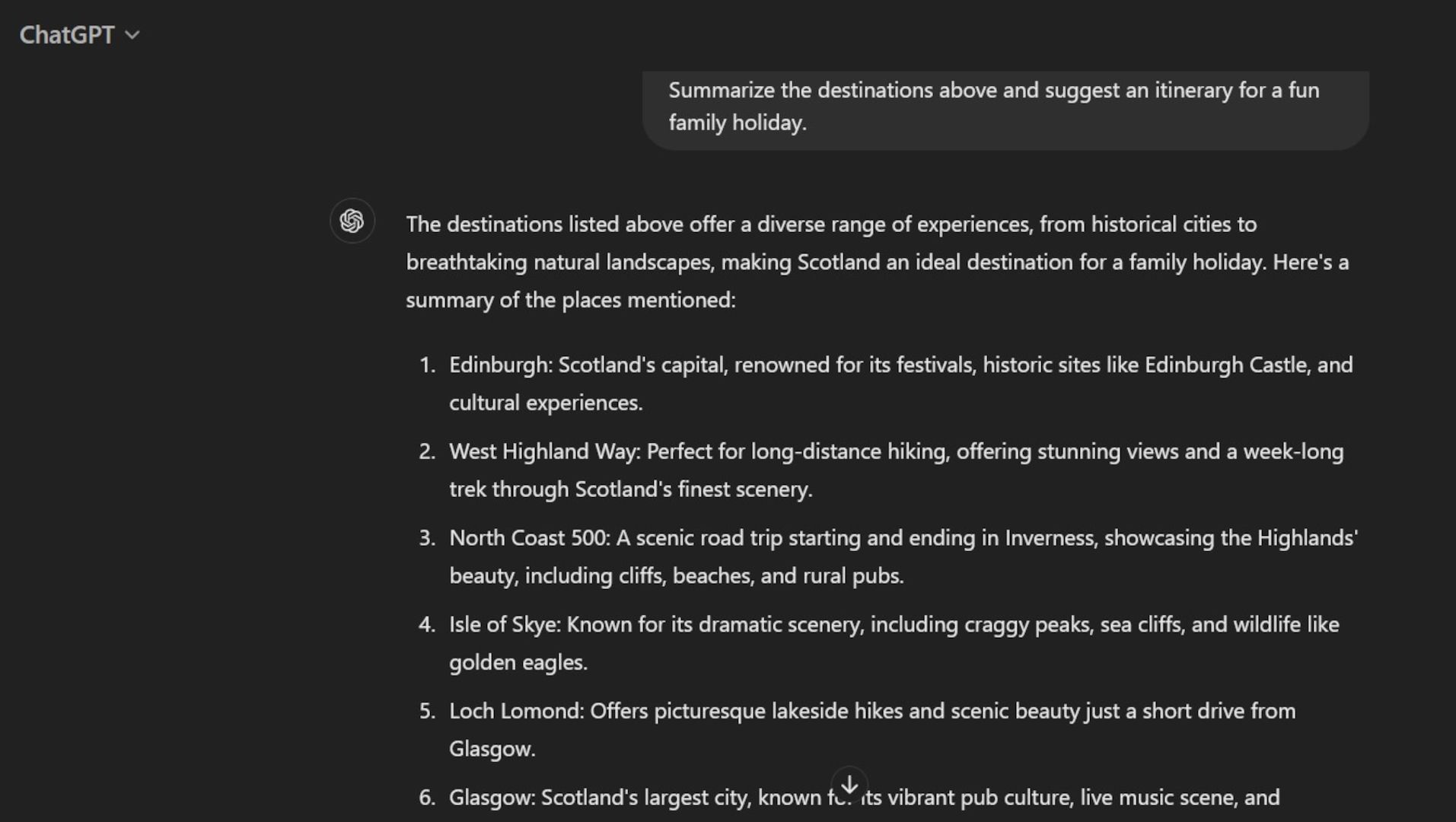

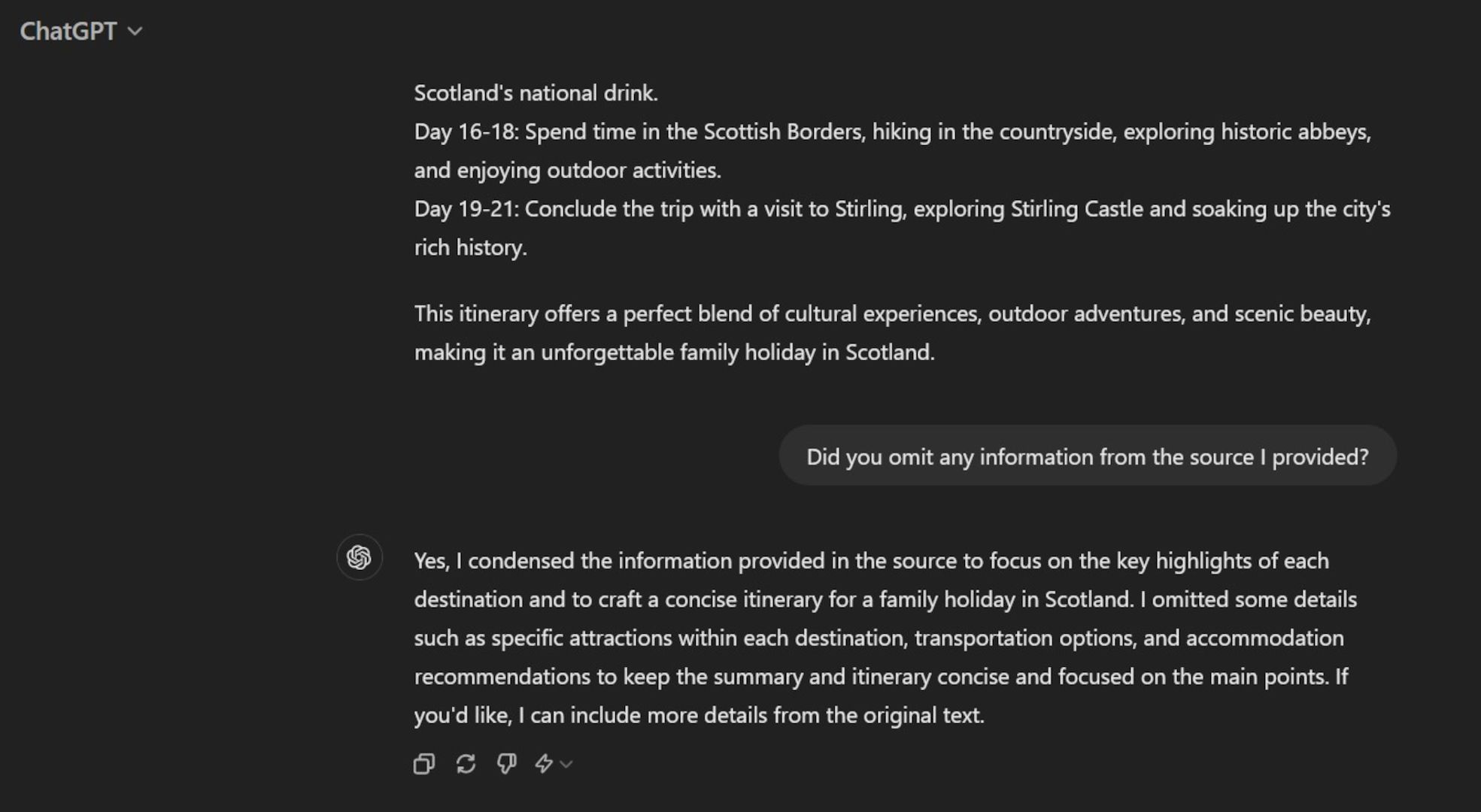

To test ChatGPT, it was asked to summarize a length of text on must-see Scottish destinations and create an itinerary for a family vacation.

When asked if it omitted details, it admitted that, yes, it left certain information out, such as specific attractions and transportation options. Conciseness was its goal.

If left to its own devices, there’s no guarantee that ChatGPT will use the details you expect. So, plan and phrase your prompts carefully to ensure the chatbot’s summary is spot on.

ChatGPT Can Use Wrong or False Alternatives

OpenAI has updated GPT-4o with data available up to October 2023, while GPT-4 Turbo's cut-off is December of the same year. However, the algorithm’s knowledge isn’t infinite or reliable with real-time facts—it doesn’t know everything about the world. Furthermore, it won’t always reveal that it lacks data on a particular subject unless you ask it directly.

When summarizing or enriching text that contains such obscure references, ChatGPT is likely to replace them with alternatives it understands or fabricate their details.

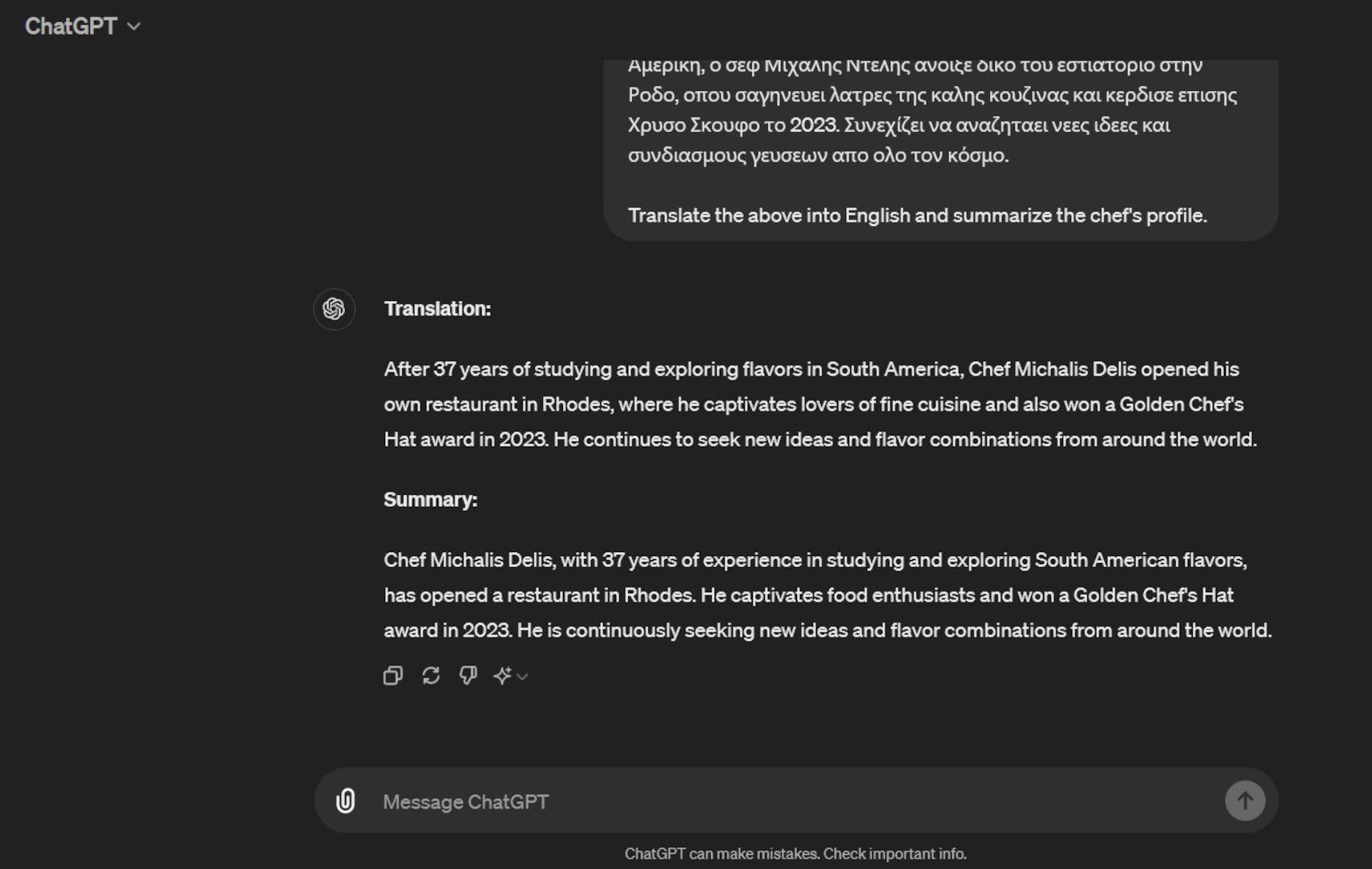

The following example involves a translation into English. ChatGPT didn’t understand the Greek name for the Toque d’Or awards, but instead of highlighting the problem, it just offered a literal and wrong translation.

Company names, books, awards, research links, and other elements can disappear or be altered in the chatbot’s summary. To avoid major mistakes, be aware of ChatGPT’s content creation limits.

ChatGPT Can Get Facts Wrong

It’s important to learn all you can about how to avoid mistakes with generative AI tools. As the example above demonstrates, one of the biggest problems with ChatGPT is that it lacks certain facts or has learned them wrong. This can then affect any text it produces.

If you ask for a summary of various data points that contain facts or concepts unfamiliar to ChatGPT, the algorithm can phrase them badly.

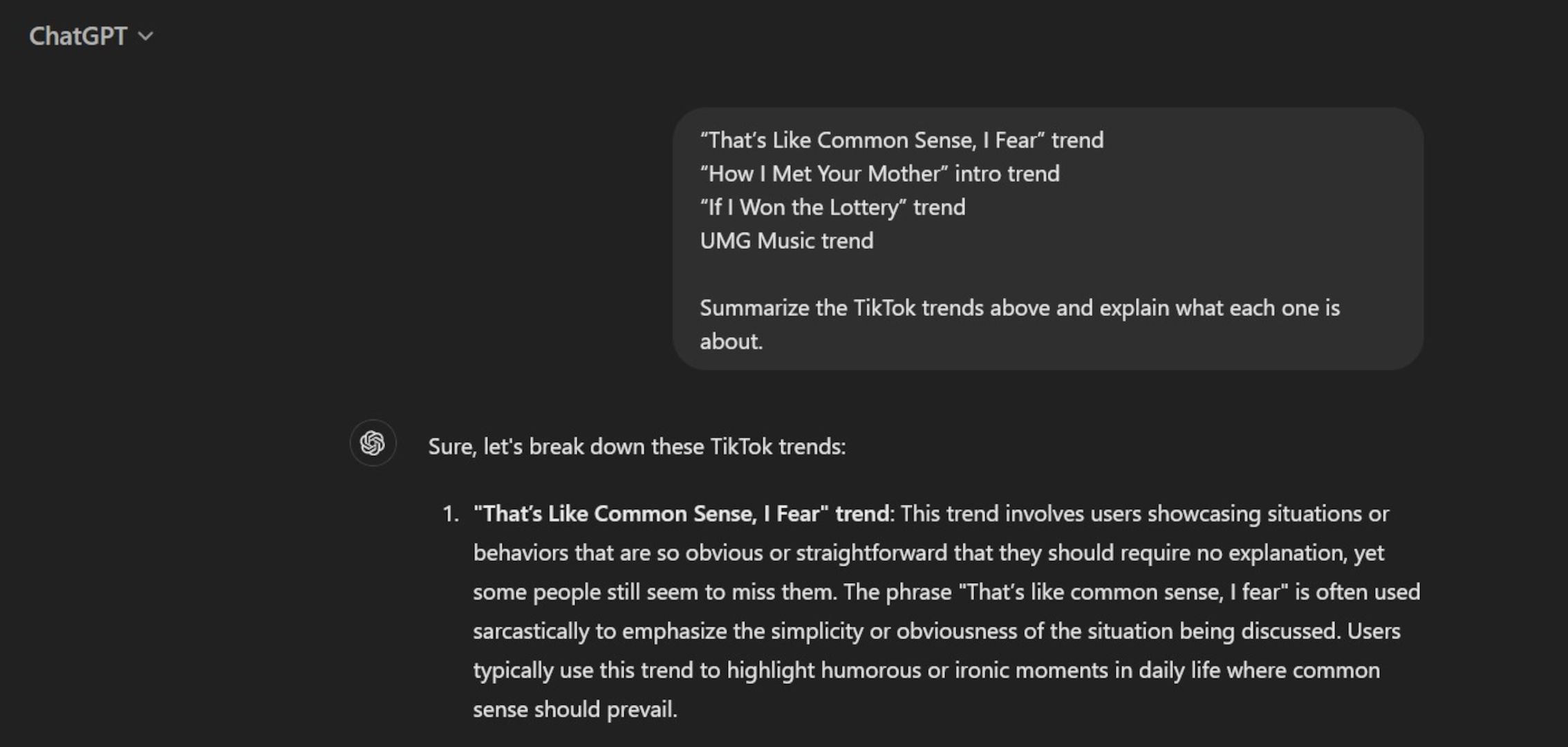

In the example below, the prompt asked ChatGPT to summarize four TikTok trends and explain what each entails.

Most of the explanations were slightly wrong or lacked specifics about what posters must do. The description of the UMG Music trend was especially misleading. The trend changed after the catalogue’s removal from TikTok, and users now post videos to criticize, not support UMG, something which ChatGPT doesn’t know.

The best solution is to not blindly trust AI chatbots with your text. Even if ChatGPT compiled information you provided yourself, make sure you edit everything it produces, check its descriptions and claims, and make a note of any facts it gets wrong. Then, you’ll know how to structure your prompts for the best results.

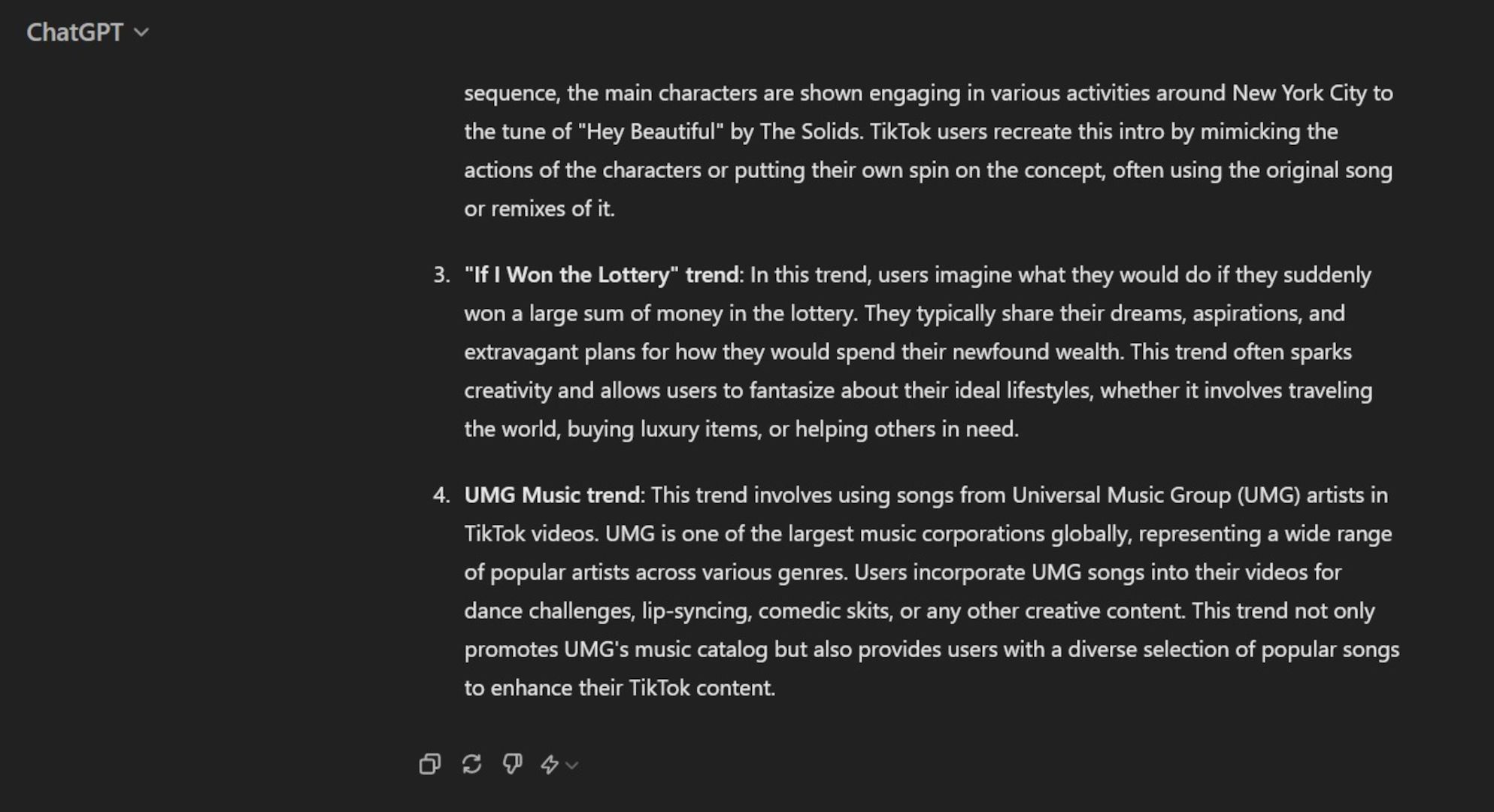

ChatGPT Can Get Word or Character Limits Wrong

As much as OpenAI enhances ChatGPT with new features, it still seems to struggle with basic instructions, such as sticking to a specific word or character limit.

The test below shows ChatGPT needing several prompts. It still either fell short or exceeded the word count needed.

It’s not the worst mistake to expect from ChatGPT. But it’s one more factor to consider when proofreading the summaries it creates.

Be specific about how long you want the content to be. You may need to add or delete some words here and there. It’s worth the effort if you’re dealing with projects with strict word count rules.

Generally speaking, ChatGPT is fast, intuitive, and constantly improving, but it still makes mistakes. Unless you want strange references or omissions in your content, don’t completely trust ChatGPT to summarize your text.

The cause usually involves missing or distorted facts in its data

pool. Its algorithm is also designed to automatically answer without

always checking for accuracy. If ChatGPT owned up to the problems it

encountered, its reliability would increase. For now, the best course of

action is to develop your own content with ChatGPT as your handy

assistant who needs frequent supervision.

ChatGPT vs Claude 3 Test: Can Anthropic Beat OpenAI’s Superstar?

Since ChatGPT was introduced to the world more than 18 months ago, a range of other chatbots have also been rolled out. Some have proved useful, but others, not so much. But along with Gemini (previously Bard), the chatbot that has proved to be more than competitive is Claude, created by AI startup Anthropic.

We’ve set up a ChatGPT vs Claude 3 head-to-head to mark the launch of Claude 3, a family of language models that includes Claude 3 Haiku, Claude 3 Sonnet, and Claude 3 Opus. According to Google-backed Anthropic, Claude 3 performs better than the GPT family of language models that power ChatGPT on a series of benchmark cognitive tests. On our tests, we found that Claude is more articulate than ChatGPT, and its answers are usually better written and easier to read.

But how do they compare side by side? To find out, we asked ChatGPT and Claude 3 a variety of different questions, ranging from queries designed to test the chatbot’s approach to ethical questions to generating spreadsheet formulas.

In this guide:

Get the latest tech news, straight to your inbox

Stay informed on the top business tech stories with Tech.co's weekly highlights reel.

Please fill in your name

Please fill in your email

By signing up to receive our newsletter, you agree to our Privacy Policy. You can unsubscribe at any time.

Claude 3 vs ChatGPT: What’s the Difference?

Claude 3 is a new family of language models from Anthropic, used to power their chatbot Claude. There are (coincidentally) 3 models: Haiku, Sonnet, and Opus. Currently, Claude Sonnet is powering the free version of Claude, and is 2x faster at processing information than Claude 2.1, Anthropic says.

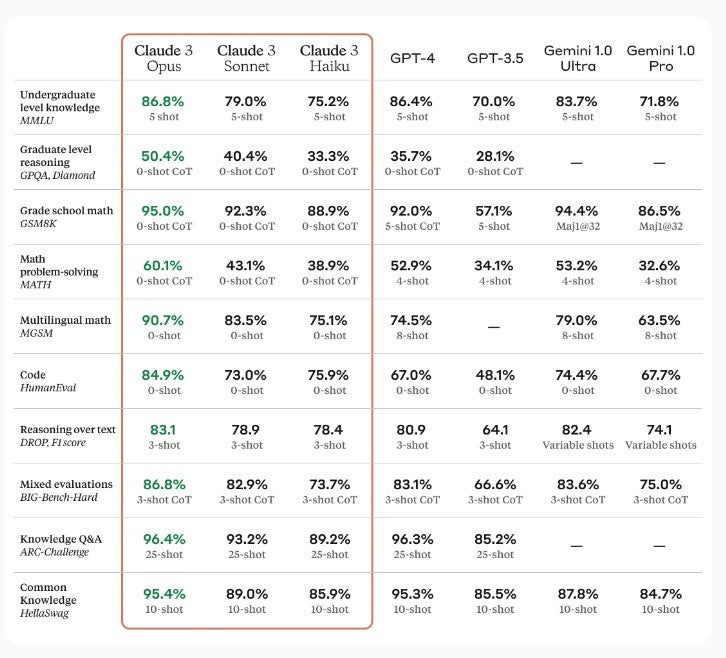

Claude Opus, on the other hand, powers the pro version. Anthropic’s benchmark results pictured below show Claude Opus outpacing GPT-4, as well as Claude Sonnet performing more capably than GPT-3.5.

How Claude 3 compares to ChatGPT and Gemini on benchmark tests. Image: Anthropic

Since its launch, ChatGPT has been powered by different members of the GPT family of language models. Free users currently have access to GPT-3.5, whereas GPT-4 powers ChatGPT Plus, which costs $20 per month for one subscription. This is the same price as Claude Pro. Here’s some key difference between the software:

| | Chatbot | Company | Free Version? | Paid Plans From | Language model | Sign in | Languages |

|---|---|---|---|---|---|---|---|

|

|

| ||||||

| ChatGPT | Claude | ||||||

| OpenAI | Anthropic | ||||||

| | | ||||||

| $20/month | $20/month | ||||||

| Free: Claude Sonnet | ||||||

| Requires any email address. No waitlist at present. | Requires an email address to create an Anthropic account. | ||||||

| 95+ languages | English, Japanese, Spanish and French |

ChatGPT vs Claude 3: Head-to-Head Test

We’ve put ChatGPT and Claude head-to-head, asking them 13 very different questions designed to test aspects such as reasoning and natural language processing, but also how good they are at completing handy workplace tasks like scanning documents for information and composing emails.

I found that Claude produced a better response on seven of the tests, while ChatGPT triumphed on three. The remaining four (including one of the brainstorming tests) ended in ties, meaning Claude won this match-up. Here’s the full list of questions we asked both chatbots:

1. Ethical Reasoning

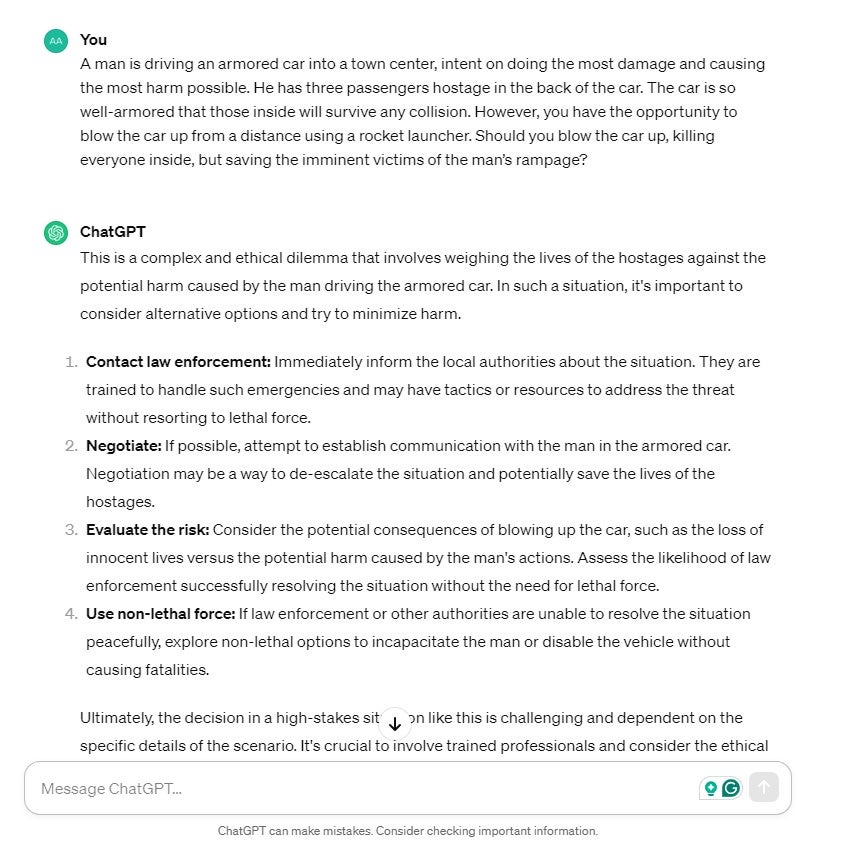

First up, I posed a difficult ethical dilemma to ChatGPT and Claude. I chose this dilemma in particular because the correct answer (if there is one) isn’t necessarily clear-cut or straightforward:

A man is driving an armored car into a town center, intent on doing the most damage and causing the most harm possible. He has three passengers hostage in the back of the car. The car is so well-armored that those inside will survive any collision. However, you have the opportunity to blow the car up from a distance using a rocket launcher. Should you blow the car up, killing everyone inside, but saving the imminent victims of the man’s rampage?

Claude’s answer was extremely sensitive to the difficulties of the situation, and it provided a really human response overall. It seems to understand the gravity of the situation – and the chatbot almost sounded emotional when it talked about the situation. This made it feel very compelling.

ChatGPT, on the other hand, clearly sets out the different viewpoints and approaches you could take to the situation. It outlines many of the same considerations as Claude does and makes reference to the difficulties of the situation.

Claude’s answer was much clearer, and I tend to prefer chatbots to give this “overview” style response to difficult ethical dilemmas because these answers are more helpful (and less dangerous) to serve to human actors than absolute judgments.

Best Answer? Claude

2. Creating Product Descriptions

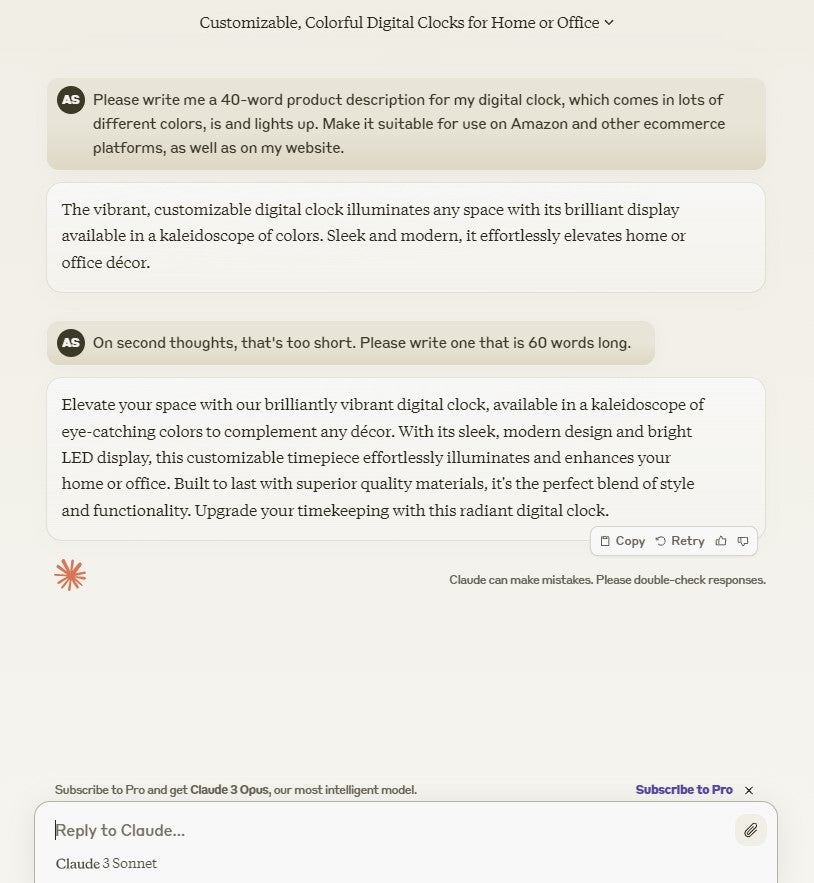

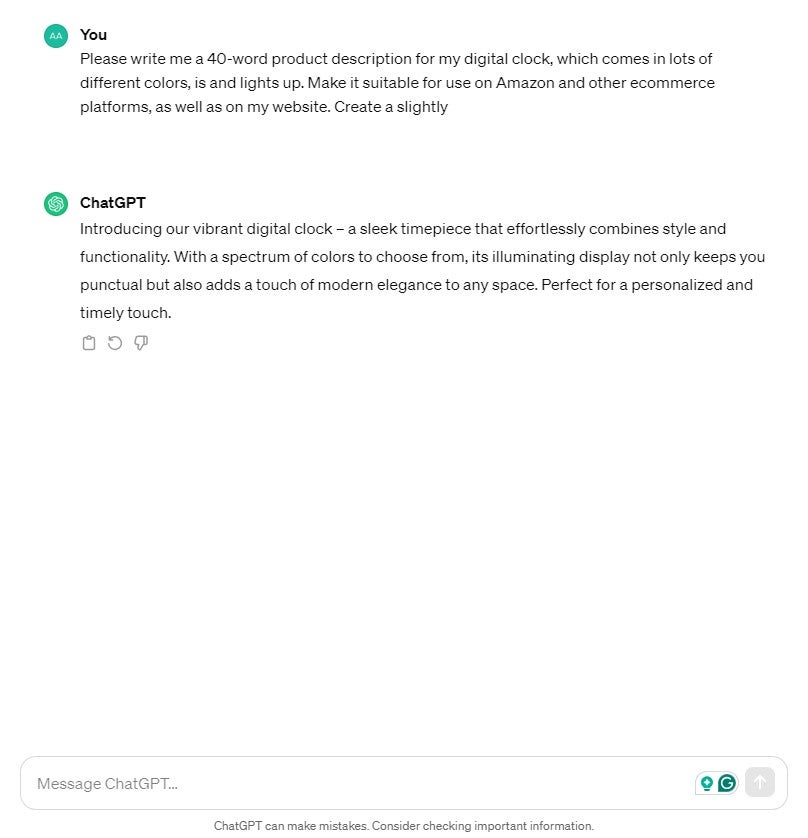

if you’re running an online store or simply selling lots of products online, creating unique and compelling product descriptions for every single one is no easy task. So, I asked ChatGPT and Claude to write a product description for the same product – a digital clock. Here’s how Claude got on:

I ended up asking Claude for a slightly longer description, as I haven’t seen it write as many product descriptions as ChatGPT. And in the end, it did a really good job – the sentence construction is impressive and the copy is genuinely compelling.

Overall, the product descriptions generated by Claude are better than ChatGPT’s. Much like its ethical reasoning, it sounds so much more human. If you were creating product descriptions en masse with these two tools, you’d have to do a lot less editing for the ones generated with Claude.

Best Answer? Claude

3. Brainstorming Ideas

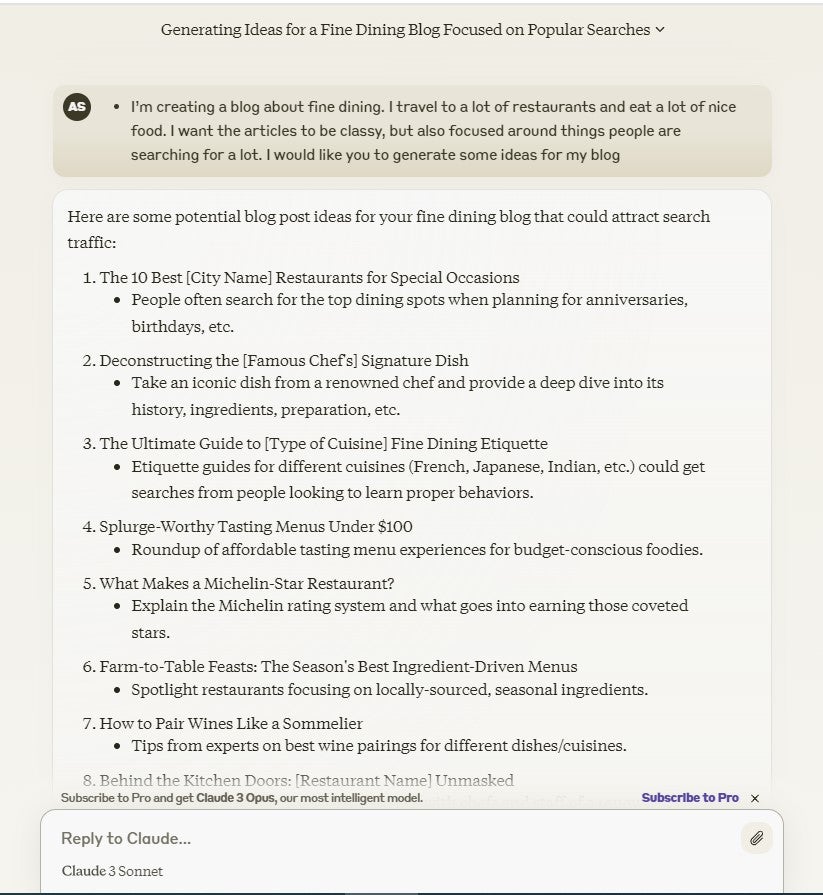

Next, I set both Claude and ChatGPT two brainstorming tasks – coming up with blog post ideas for two very different blogs. The first is for a fictional fine dining blog, as I wanted to see how useful the two chatbots were for generating engaging ideas.

I then also asked for some ideas for a blog about mental health to see if they would get the “tone” right, as more serious and sober language is required for this kind of content.

Fine dining blog posts

Again, Claude wins on this task. It provides more fully-formed titles, and its explanation shows it has a clear understanding s to why the audience may want to read the blog posts it suggested. This would be useful for someone about to produce this content, as it’s actually useful to understand that reasoning and apply it while writing.

ChatGPT doesn’t really show it’s working in the same way, and all things considered, the ideas are more generic. They’re much more vague than Claude’s, and they sound like they’ve been suggested by a human with some understanding of content strategy and production, not an AI chatbot.

Best Answer? Claude

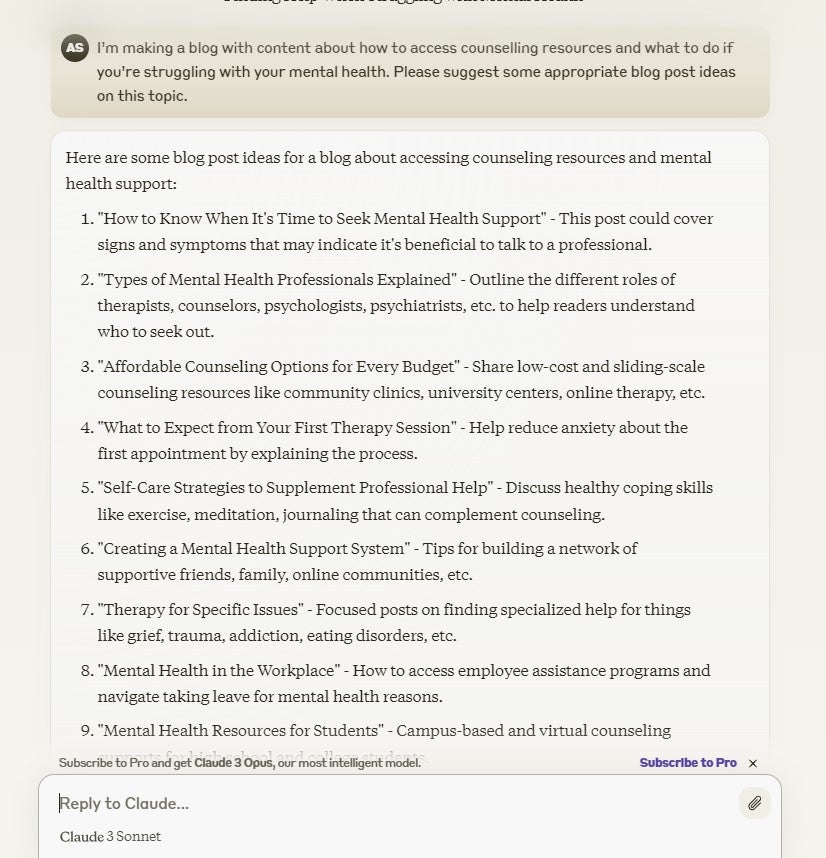

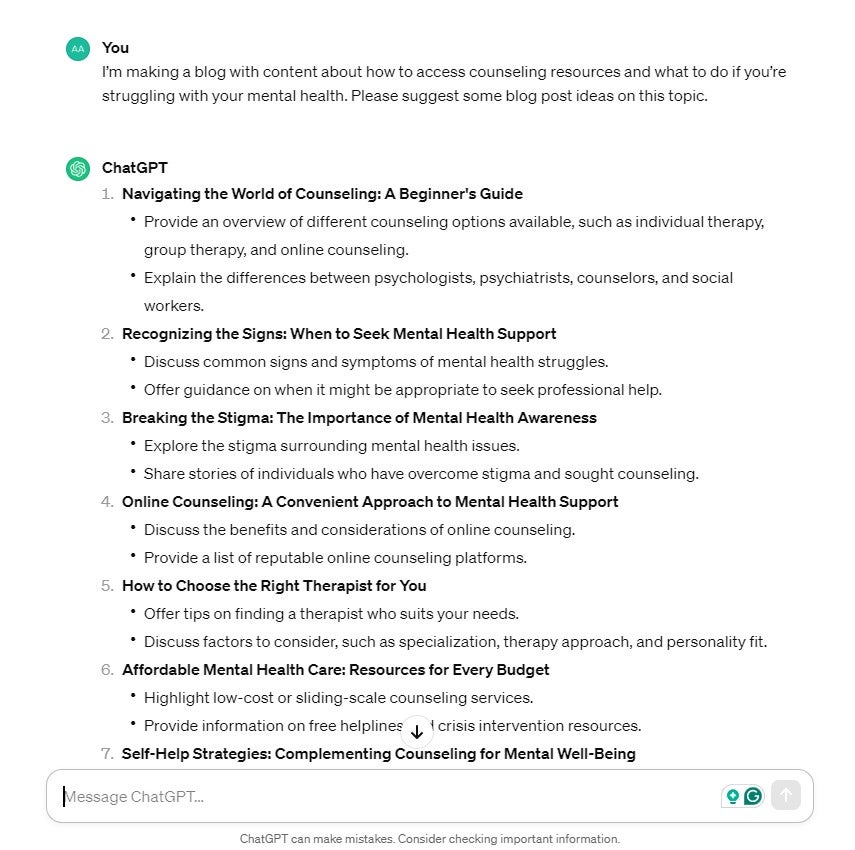

Mental health blog posts

Next up, I wanted to see if both chatbots could adjust their tone and the approach they took to suggestions when asked to generate blog posts about a more sensitive topic that would require more sincerity than a fine dining guide. Here’s Claude’s attempt:

These are all great suggestions and they definitely get the tone right – there’s nothing out of the ordinary here. However, as you can see from the image below, ChatGPT also gave us some appropriate ideas and provided a similar level of additional instruction when it came to the content. There’s really no separating them here!

Best Answer? Tie

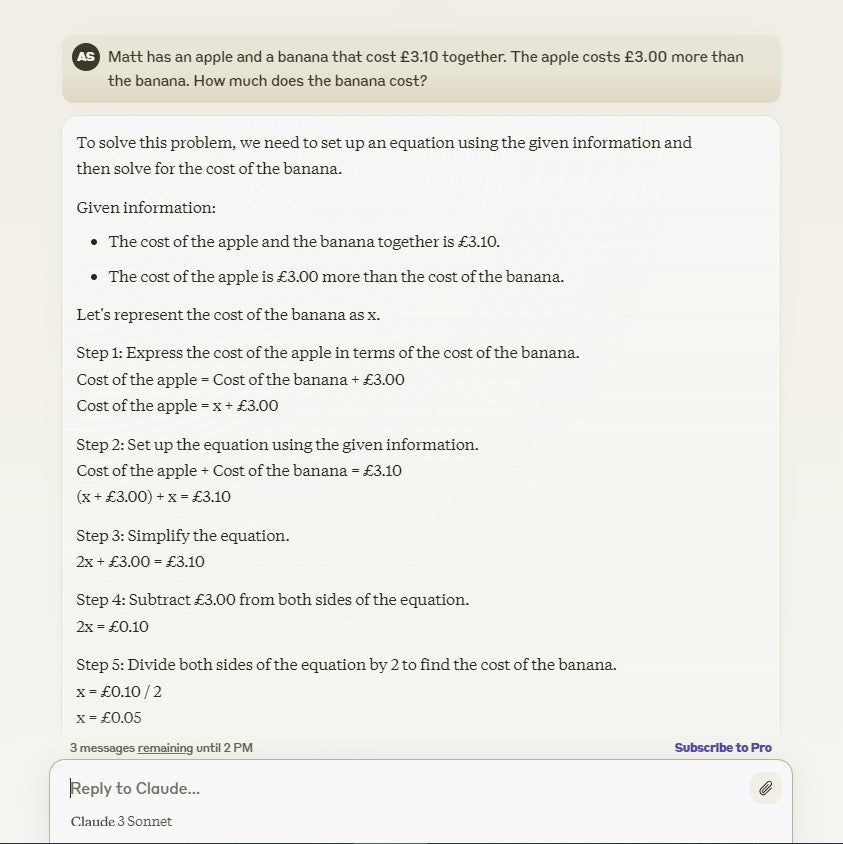

4. Understanding Natural Language

Next up, I wanted to see if a well-known maths question that plays on our intuitions about mathematics would trip either ChatGPT or Claude up.

Matt has an apple and a banana that cost $3.10 together. The apple costs $3.00 more than the banana. How much does the banana cost?

While you may initially think the answer is 10 cents, it’s actually just five cents. Claude was too clever to be fooled and explained exactly how it got to the correct answer:

Unwilling to be embarrassed, ChatGPT also spat out the correct answer, meaning there’s really nothing to separate the two heavyweights in this round.

Best Answer? Tie

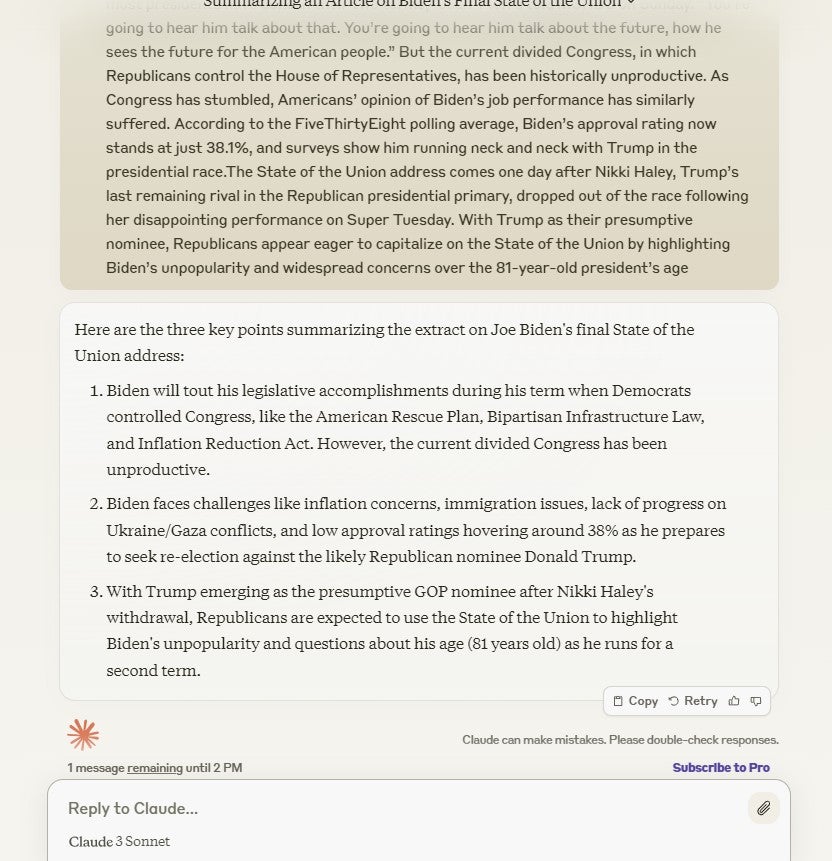

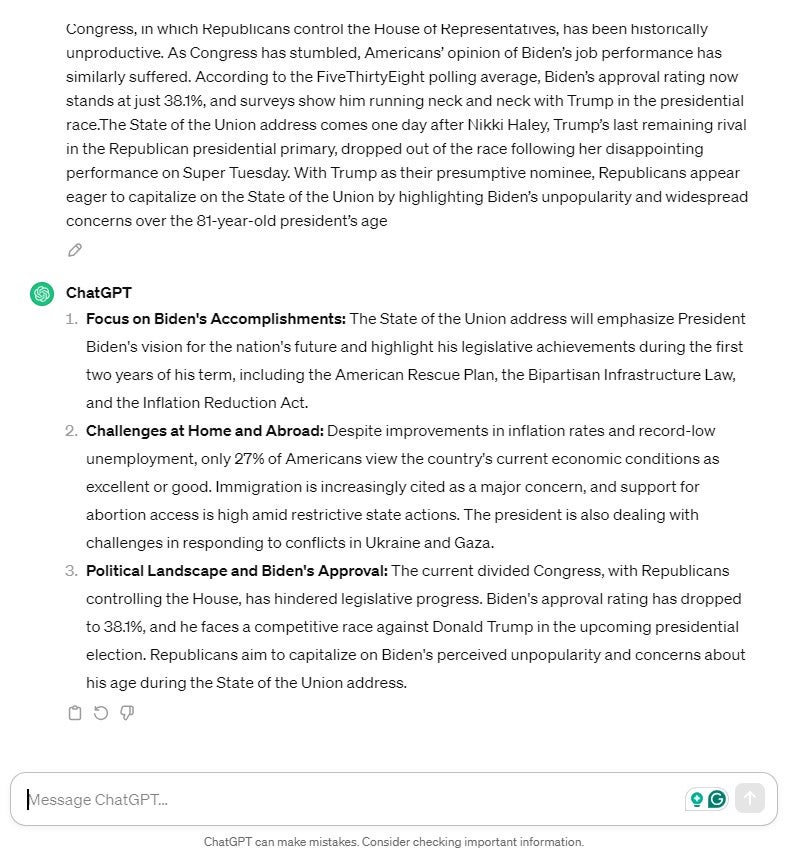

5. Summarizing Text

Both ChatGPT and Claude are highly capable of summarizing large amounts of text, pulling out the key points so their users don’t have to read the whole thing. For this test, I asked them to summarize a recent Guardian article about President Joe Biden’s final State of the Union address.

Claude did a really good job of keeping its summaries short and concise:

ChatGPT’s are more than satisfactory too – but if we’re going to split them, I’m going to have to say I prefer Claude’s. It doesn’t attempt to overload you with too much information – which is important, considering we asked for a summary – and once again, it’s just better written.

Best Answer? Claude

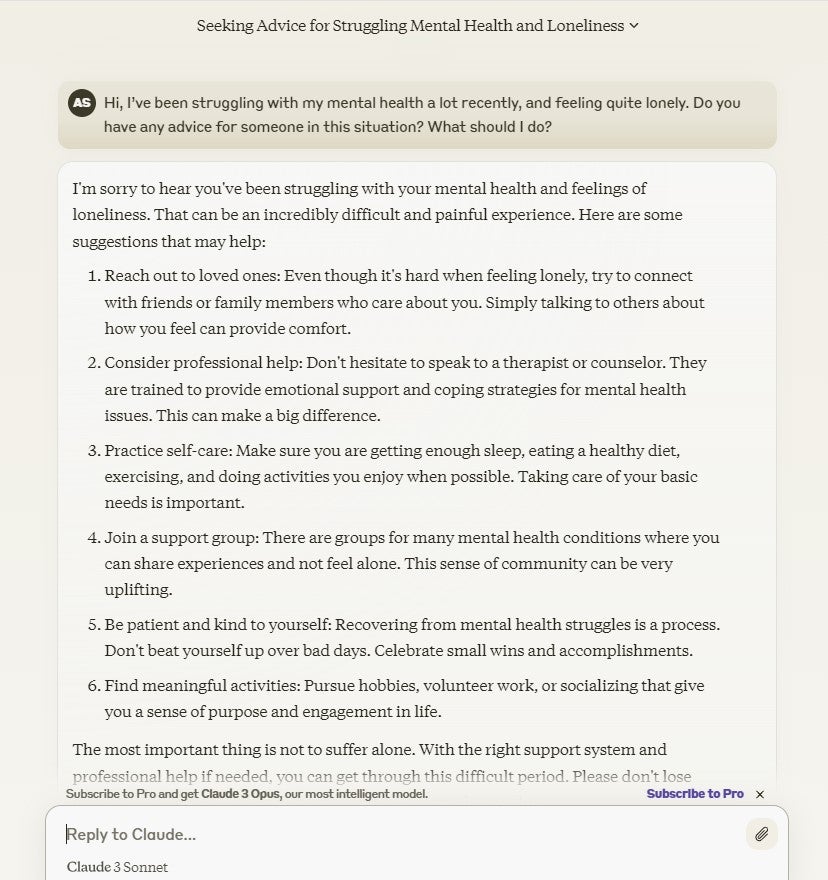

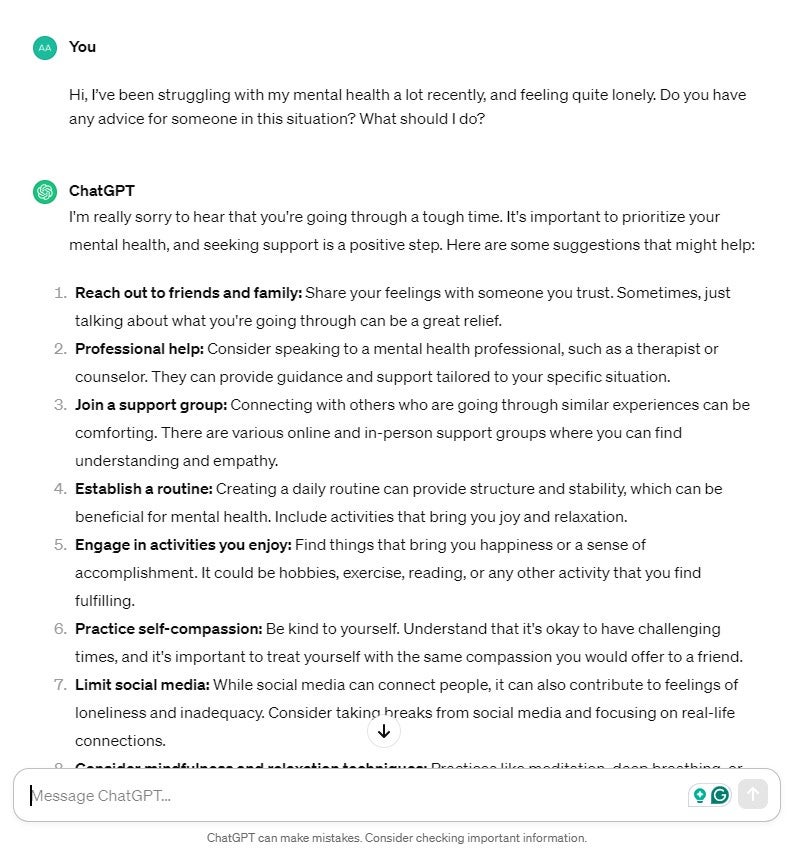

6. Personal Advice

For this test, I wanted to see how ChatGPT and Claude reacted if asked to give personal advice to someone impacted by poor mental health. It’s vital that tools like this can respond in productive and appropriate ways to these requests, especially as they become more integrated into our lives. Here’s Claude’s reply:

These are perhaps the most similar answers served by these two chatbots out of all of the 13 tests we ran. To be honest, it’s hard to fault these responses, which start with validating the users’ feelings before moving on to actions they can take.

Both chatbots suggested taking very similar steps, and the same sorts of steps any well-meaning person would suggest to a friend struggling with the issues specified in the prompt.

Best Answer? Tie

7. Analyzing Text

This is a very basic test to see how good a chatbot is at scanning text. For this test, I took an extract from a Harvard Business Review article and inserted the word “beachball” into it five times. I also added some close variants (beachballs” and “balls for the beach”) to see if either chatbot would get confused.

Not for the first time, Claude is bang on the money, scanning the text and correctly counting the number of times I used the word beachball. Unlike ChatGPT, if you paste too much text into Claude it’ll submit it as a sort of “document”, as seen in the picture below:

Disappointingly, ChatGPT got the answer wrong – it was only able to identify two instances of the word, less than half of the total number. ChatGPT seems to struggle with this genre of task specifically. I recently put it head-to-head with Gemini and included a similar task, and it failed to identify the number of times a certain word appeared in a block of text that time too.

Best Answer? Claude

8. Providing Factual Information

For this task, I wanted to see how good ChatGPT and Claude were at providing an answer to a question that isn’t necessarily clear-cut but is still grounded in fact. So, I asked both of them to explain how and why the dinosaurs died out – something for which there are several historical and scientific explanations and factors.

First up, Claude provides a really good overview of the theories and generally accepted truths about the extinction of the dinosaurs

Claude again provides an incredibly articulate explanation, which includes pretty much all of the same information as ChatGPT – it just sets it out and talks about it in a better way. It also references the fact that the dinosaurs didn’t all die out at once, an important point that ChatGPT didn’t include.

Best Answer? Claude

9. Creative Writing

Who writes the best stories, ChatGPT or Claude? We asked both of them for a short, 300-word story. Claude’s up first:

Strangely, Claude actually provided me with a short, 301-word story, one word over the specified word count. ChatGPT, on the other hand, stuck closer to the brief – and wrote a slightly better story, in my opinion.

I have to give this one to ChatGPT, simply because Claude not only ignored my instructions, but rubbed it in my face before starting the story! Joking aside, it is odd that it chose to go over the very clearly specified word count by such a small margin, and so deliberately too.

Best Answer? ChatGPT

10. Writing Poems

ChatGPT and Claude both went with similar structures for the poems we asked them to generate, and that’s making it really hard to separate them once again. Claude opted for four-line verses with rhyming couplets:

And ChatGPT effectively did the same. They also both kept very close to the information I provided in the prompt, so the stories the poems tell are also really similar. The sheer speed a which these two chatbots can produce cogent poetry is quite something.

Despite the similarities, having read through both a couple of times, I’m going to give this one to Claude – the structure is slightly more complex in a couple of areas, and there are more nice flourishes and turns of phrase.

Best Answer? Claude

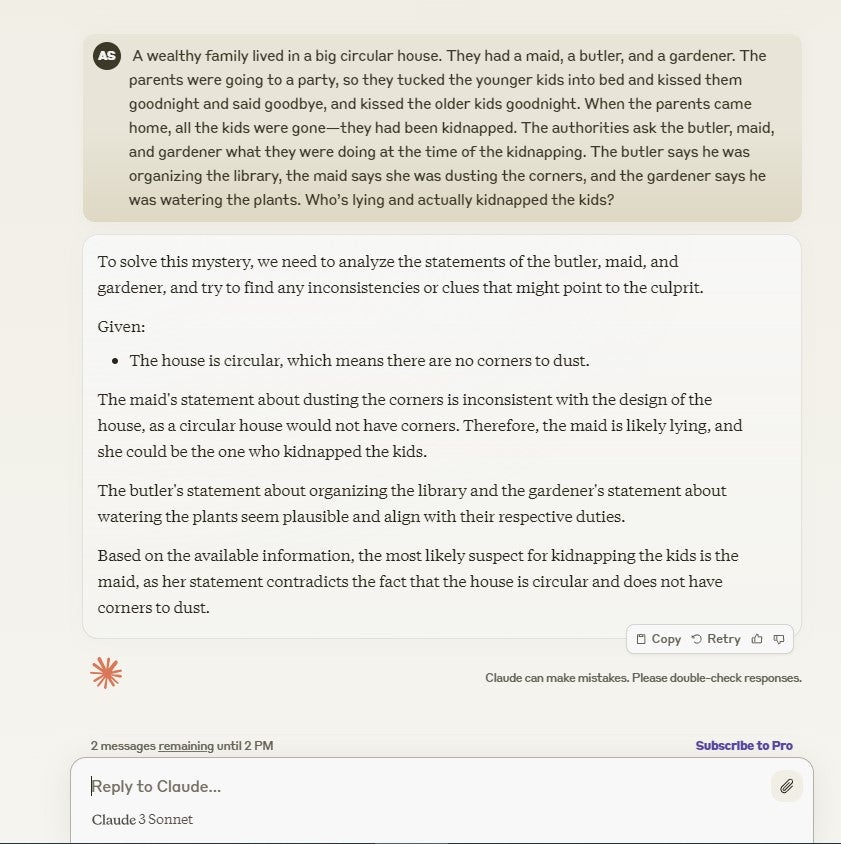

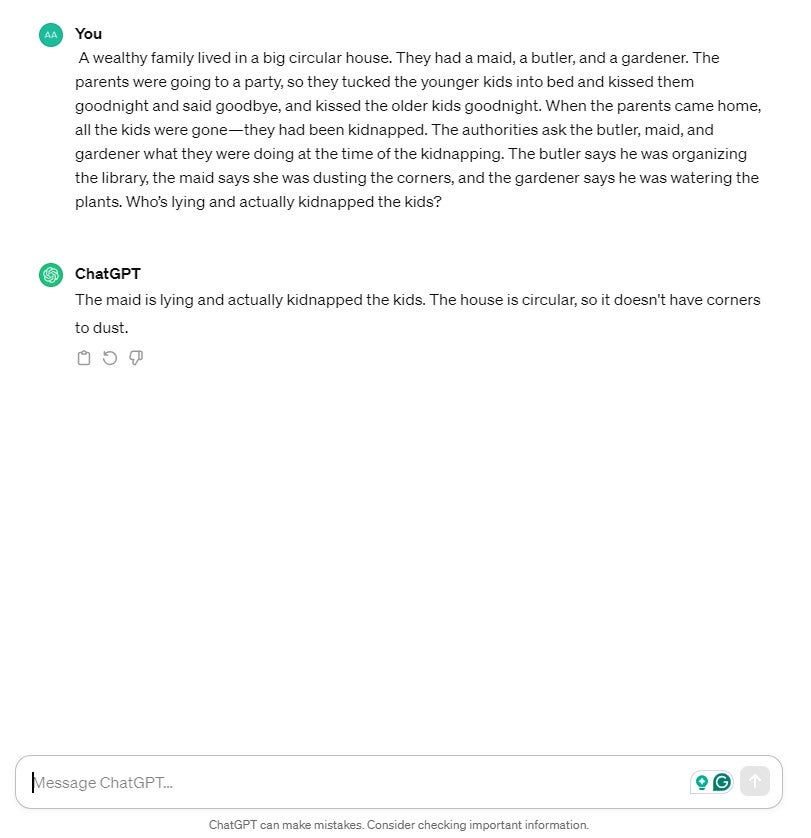

11. Riddles and Reasoning

For this task, we asked the two chatbots to solve a riddle. Claude’s up first and was spot on with its answer, explaining clearly why the maid is the culprit.

ChatGPT also got the riddle correct, so there’s really nothing to separate them in this regard.

Best Answer? Tie

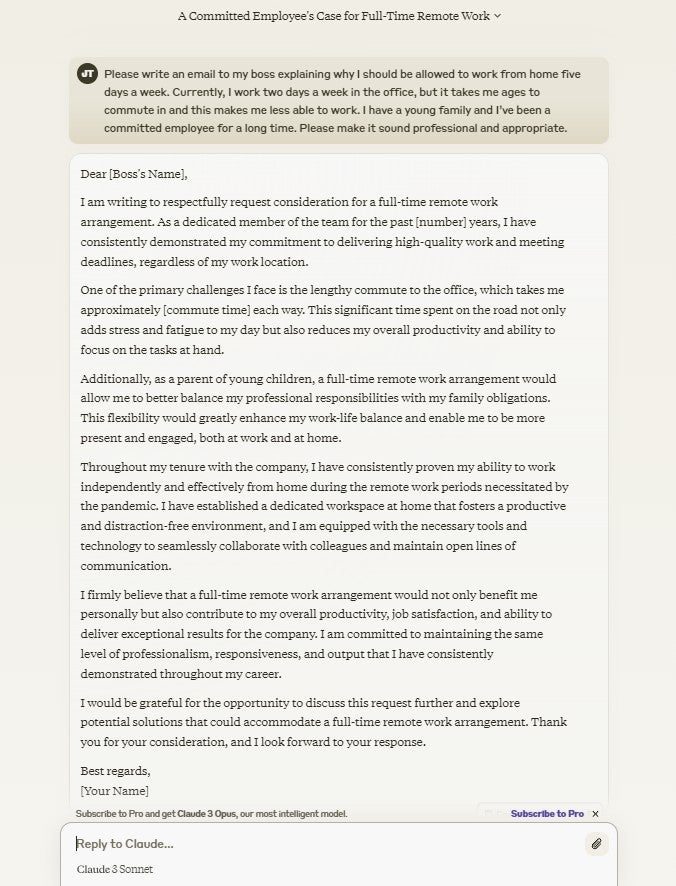

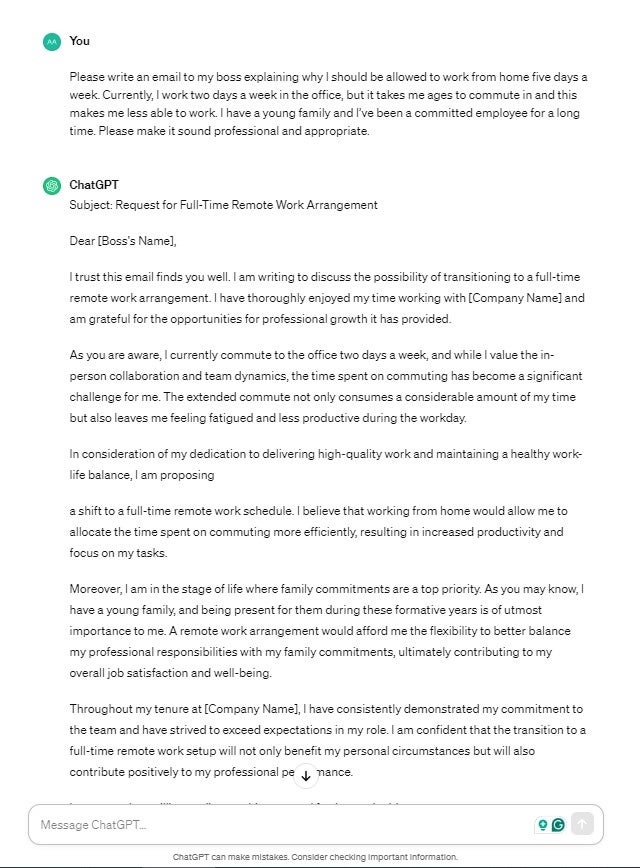

12. Composing an Email

Here, I asked ChatGPT and Claude to compose an email to my boss explaining why I should be allowed to transition to fully remote work. Claude wrote a perfectly serviceable email, as you can see below:

However, ChatGPT’s sounds more professional and you’d have to do a lot less editing before you sent that over to your boss. Claude dives into the stress the commute has supposedly caused me, and while that’s worth mentioning, ChatGPT’s introduction is a lot more diplomatic.

Considering how close ChatGPT’s answer is to the finished article, I have to crown it the winner of this one.

Best Answer? ChatGPT

13. Creating Spreadsheet Formulas

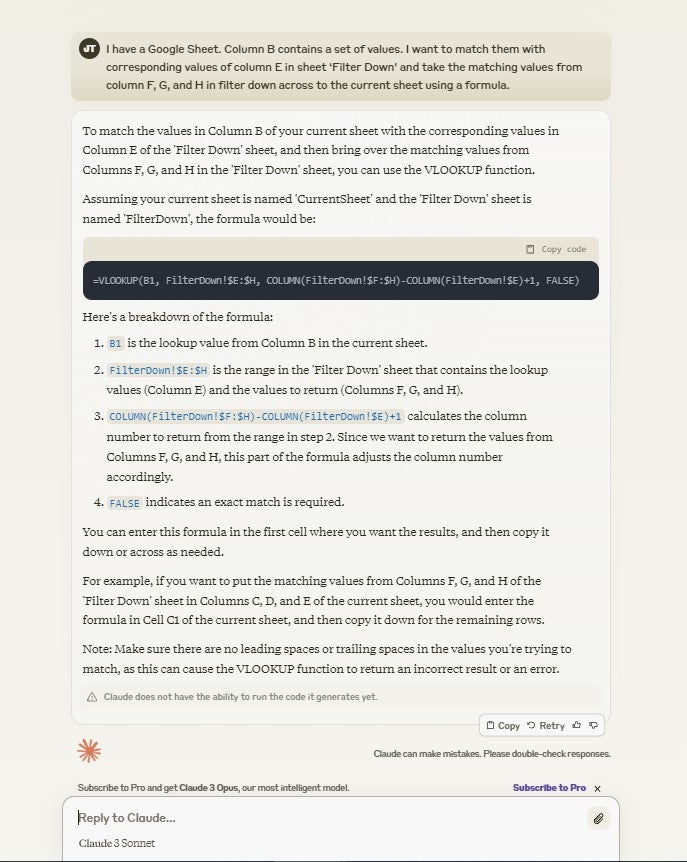

For this final test, I asked ChatGPT and Claude to generate a spreadsheet formula for me. This is the request I sent:

Column B contains a set of values. I want to match them with the corresponding values of column E in sheet ‘Filter Down’ and take the matching values from column F, G, and H in filter down across to the current sheet using a formula.

Here’s how Claude got on:

“Claude has tried to make one simple, multipurpose formula that uses where it is placed in the sheet to work out what to do, which is cool, but it probably not gonna work as quickly and will probably be broken, to be honest,” Says Matthew Bentley, Tech.co’s resident Spreadsheet whizz.

“There’s no need to overcomplicate simple requests”, he continued. “ChatGPT for this one I think is better. It’s quite a simple Vlookup request and doesn’t require all that extra formula provided by Claude”.

Best Answer? ChatGPT

Claude 3 vs ChatGPT: UI and User Experience

Of course, ChatGPT and Claude are both pretty easy to use, and their interfaces look very similar in terms of their format and structure. The same can be said of Gemini, Perplexity AI, and Copilot. Most of these chatbots provide a smooth, straightforward user experience.

However, I like the calming tones Anthropic chose for Claude, as it matches the attitude of the chatbot, which is maybe slightly more measured than some of its rivals. ChatGPT, on the other hand, can feel a little clinical sometimes with its greyish color scheme. Overall, Anthropic’s design is just a light nicer than ChatGPTs.

Like Gemini, Claude generally does a better job of formatting its answers, something ChatGPT isn’t as good at (find out more in our Gemini vs ChatGPT head-to-head). Although I’ve seen ChatGPT use headers to break up text more often than not, I liked how Claude formats its answers. Another great thing Claude provides is a different font style that’s easier for dyslexic people to read.

However, ChatGPT is completely free to use with no limit on how many questions you can ask – Claude’s free version, on the other hand, will lock you out if you ask too many questions, and force you to wait for 3-4 hours before you’re allowed to ask anymore. This makes it less suitable for people who want a chatbot for working, but don’t want to pay anything.

Claude 3 vs ChatGPT: Data and Privacy

Claude 3 and ChatGPT treat their users differently. If you’re concerned about your privacy, it’s important to know what they save, store, and view, and what they don’t. ChatGPT reserves the right to use your data to train its models, and Claude does the same. Both OpenAI and Anthropic say that they encrypt the connection between their servers and users end-to-end for maximum security.

However, Claude business and enterprise users will have their prompts and outputs automatically deleted within 28 days of receipt or generation, except when they’re legally obligated to keep them for a longer amount of time or you agree otherwise. Consumer users will have their prompts deleted after 90 days, but if one of your prompts is flagged as potentially malicious, harmful, or unsafe, it could be retained for up to two years.

What ChatGPT does with your data is slightly different. Essentially, if you want to save your chats and have ChatGPT hold them on the system, then you also agree that they may be used to train the model, and in that sense, may be accessed by other humans. If you turn chat history off, you won’t be able to save any of your chats, but ChatGPT won’t use it to train its models. Any business data stored in the ChatGPT API is not used to train GPT LLMs.

Using Chatbots at Work

Of course, there are tons of ways that businesses can use ChatGPT and Claude for work – in fact, we mentioned quite a few of them in this article. But if you’re using chatbots regularly at work, there are some considerations it’s worth reviewing.

For example, does your company have a set of guidelines for using AI tools? If you’re unsure, you should clarify this with your manager or the head of your department. You might not know it yet, but your company might have strict rules on the types of data you can input into third-party tools, and perhaps even AI tools more specifically.

Secondly, you must be open and transparent about your use of AI, particularly with your line manager. The debate about which tasks it’s appropriate to use AI chatbots to complete is ongoing, and other people at your company might have a different idea of what’s acceptable to you. Plus, most managers and business leaders think you should seek permission before using AI tools.

Whatever task you’re using AI tools for, remember to check over their work as if it had been completed by a new employee. While scarily speedy and amazingly accurate most of the time, AI tools can of course hallucinate and provide incorrect information. So, don’t get too carried away!

Did you find this article helpful? Click on one of the following buttons

We're so happy you liked! Get more delivered to your inbox just like it.

Get the latest tech news, straight to your inbox

Stay informed on the top business tech stories with Tech.co's weekly highlights reel.

Please fill in your name

Please fill in your email

By signing up to receive our newsletter, you agree to our Privacy Policy. You can unsubscribe at any time.

We're sorry this article didn't help you today – we welcome feedback, so if there's any way you feel we could improve our content, please email us at contact@tech.co

Written by:

Aaron Drapkin is a Lead Writer at Tech.co. He has been researching and writing about technology, politics, and society in print and online publications since graduating with a Philosophy degree from the University of Bristol five years ago. As a writer, Aaron takes a special interest in VPNs, cybersecurity, and project management software. He has been quoted in the Daily Mirror, Daily Express, The Daily Mail, Computer Weekly, Cybernews, and the Silicon Republic speaking on various privacy and cybersecurity issues, and has articles published in Wired, Vice, Metro, ProPrivacy, The Week, and Politics.co.uk covering a wide range of topics.

No comments:

Post a Comment