Russians Complain About Their Overpriced, Useless Drone Jammers – UAS VISION

FPV kamikaze drones are a serious threat to Russian armour. A small racing quadcopter armed with an RPGRPG -0.9% warhead can destroy a main battle tank with one well-aimed hit, and lesser-armoured vehicles are even more vulnerable. Ukraine has hundreds of thousands of drones, and the only protection is jammers…which in the case of Russian soldiers, means betting your life on a highly corrupt procurement system, as one recent example shows.

Tank Protection Racket

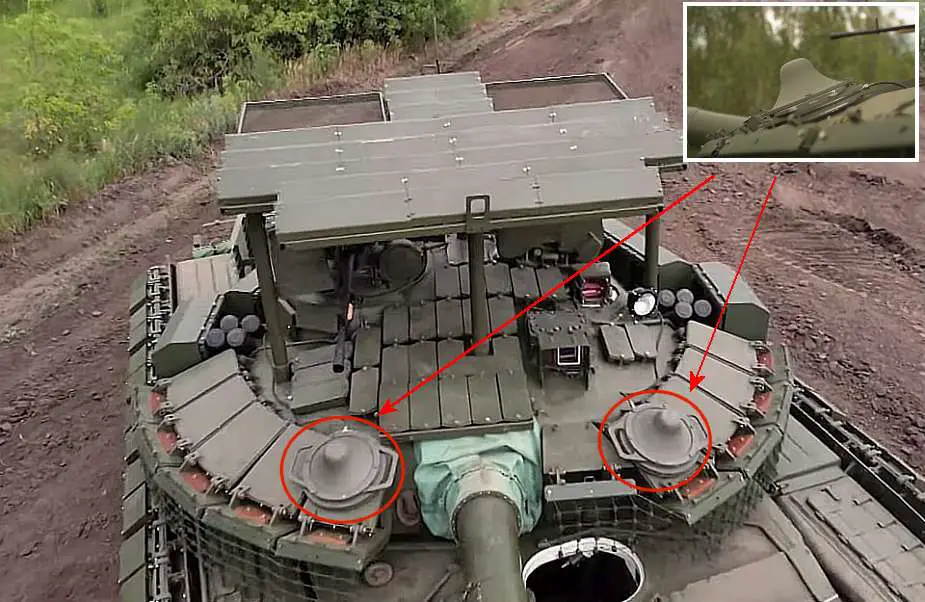

The Breakwater (“Volnorez” / “волнорез” in Russian) electronic protection system was unveiled at the ARMY2023 show in Moscow last year. The size of a dinner plate and weighing 28 pounds, Breakwater attaches to the side of a tank or other vehicle with magnets, providing instant protection. Such devices are sometimes called ‘mushrooms’ or ‘tank boobs.’

The Breakwater creates an invisible 360-degree bubble of protection claimed to extend out to 600 – 1,000 metres, making it far more effective than ‘trench jammers’ which only work out to 50 metres and may not stop an FPV diving towards a target. It can be switched to jam any of twenty different channels commonly used to control drones. But each Breakwater only jams one band, so several may be needed.

According to one Russian military blog

“After being exposed to interference, most drones either veer off course, crashing into the ground, or remain stationary, marking time until they regain signal or run out of energy.”

Generating this protection takes some power, around 70 watts, of which 30 watts is broadcast as radio waves. To provide continuous protection Breakwater has to be installed on a vehicle with an auxiliary power unit; older models like the T-72 do not have one, so the system only works when the engine is on. The protection is blocked by metal, so tanks may have two or more Breakwaters to eliminate any dead zones.

Such jammers are widely sold online on Russia, and bought by charitable groups who send supplies to troops at the front.

You can buy a Breakwater here for 350,000 Rubles or about $3,800. But you might want to hear about one disgruntled buyer’s experience first.

Zero Stars, Do Not Buy

“Romanov Lite” is a Russian military blogger with over 100,000 followers on his Telegram channel, and who carries out frequent fundraising drives for medical supplies and protective gear for soldiers on the front line. And he was not happy with Breakwater.

“Soldiers who received these products are recommended to test them in the field, the results will not please you,”

he advised in a Telegram post, before giving more details of the problems he had found after taking one apart.

First of these was the standard of construction, – the device had clearly been assembled hastily with little or no quality control.

“Absolutely poor build quality. The impression is that the Chinese are soldering all this somewhere in the garage. Therefore, the mean time between failures is the same as in children’s transistors,”

wrote Romanov Lite.

Then there was, perhaps unsurprisingly, the evidence that the device was grossly overpriced in relation to its component parts.

“With a selling price of 350 thousand Rubles ($3800), its real price does not exceed 50 – 60 thousand ($540-$650).”

These should not have come as a shock. Overcharging for poorly-assembled devices made of cheap commercial electronics is pretty much standard in the Russian defence industry. But this jammer had some more fundamental design flaws too.

“The antenna creates a completely open “funnel” above the Breakwater, which Ukrainians spoke about,” says Romanov Lite.

The issue seems to be that the antenna does not, as stated, give full spherical coverage, but more of a toroidal or donut shape. If the opponent is aware of this blind spot — and it seems the Ukrainians are—they can use it to attack from a suitable angle.

There is a worse problem though.

“[In] A violation of all the laws of physics, the transistor module is located in a sealed container ABOVE the cooling radiator, where there is simply nowhere for heat to dissipate and by default it is doomed to burn out, which it does regularly ”

says Romanov Lite.

As noted above, Breakwater consumes 70 watts, converting 30 watts in radio waves; the other 40 watts are dissipated as heat. Managing excess heat is a common issue with electronics, which is why many desktops and laptops have cooling fans and smartphones in insulating cases may overheat.

The Breakwater’s radiator, which is supposed to disperse waste heat into the environment, is located in the heart of the device, so it just recirculates it instead. Even in a Ukrainian winter, the heat is enough to burn out the unit. Bad news for the tank crew, but potentially good news for the makers who can sell another unit, assuming the crew survive long enough to get one.

Follow the Money

These views were echoed by DanielR, a physicist who analyses Russian military hardware on Twitter/X ,

“When I started looking at these photos I was initially very confused as nothing made sense,” DanielR told Forbes. “This is because the quality was much worse than I had expected. “

DanielR was looking at pictures posted by “Serhii Flash” a Ukrainian electronics warfare expert who had dismantled a Breakwater and put his own scathing review online.

“Overall, the quality is more like an electronic toy or home appliance,”

says DanielR, who doubts that the flimsy device held together with sealant and nylon screws would survive for long on a tank.

In his critique, DanielR notes that the DC-DC power converter is a $10 consumer model that is inadequate for the job, and suggests that this is the part which is most likely to fail.

“It seems that someone is making a lot of money,” says DanielR.

Romanov Lite has promised to visit the factory where Breakwaters are made. But if this company disappears, another just like will likely take its place. There is a market desperate for drone jammers, and in the Russian defence business, that means money for crooks.

Source: Forbes

Russia Unveils Portable Tank-Mounted Drone Jammer to Counter Ukrainian Attacks | weapons defence industry military technology UK | analysis focus army defence military industry army

In the ever-evolving landscape of modern warfare, the use of drones

has become increasingly prevalent, posing significant threats to armored

vehicles on the battlefield. Responding to this emerging challenge,

Russia has unveiled its latest countermeasure, the REB "Волнорез"

(Volnorez - Breakwater) electronic warfare jammers. This

state-of-the-art system was first showcased and mounted on a T-80BVM

Main Battle Tank (MBT) during the Russian Army Expo 2023 defense

exhibition, capturing the attention of defense analysts worldwide.

Follow Army Recognition on Google News at this link

The

Russian-made Volnorez portable drone jammer system can be easily

mounted on a tank turret to counter drone attacks. (Picture source

Facebook Garupan History)

The Volnorez boasts impressive frequency coverage, spanning from 900 MHz to 3,000 MHz. This wide spectrum ensures that it can effectively jam a variety of drones operating within this range. With the ability to disrupt drone signals from over a kilometer away, the Volnorez stands as a formidable deterrent against aerial threats. Once jammed, most drones either veer off course, crashing into the ground, or remain stationary, loitering in place until they either regain signal or deplete their power reserves. This capability ensures that vehicles equipped with the Volnorez, as well as those in its vicinity, gain a significant layer of protection against drone attacks, marking a game-changer in defensive capabilities, especially in conflict zones like Ukraine.

One of the standout features of the Volnorez is the versatility of its jammer antennas. These are magnetically attached, allowing for flexible placement on almost any part of the vehicle. Furthermore, the jammer signals are omnidirectional, ensuring 360° of protection regardless of the antenna's position on the vehicle. While it's believed that the Volnorez is primarily fitted on vehicles equipped with an auxiliary power unit (APU) to ensure uninterrupted operation, early tests of the system were conducted on a T-72B3 tank, which did not have an APU. This suggests potential versatility in its deployment across different vehicle platforms.

The introduction of the Volnorez jamming system underscores the importance of electronic warfare in modern combat scenarios. As the situation in Ukraine continues to evolve, the effectiveness of this new system will undoubtedly be closely watched by military experts and strategists around the globe.

Defense News September 2023