Graphical Abstract

Summary

Farmers think it's cool technology, but struggle to make practical use of imagery.

This paper describes a study using uncrewed aerial systems (#UAS) to collect high-resolution #multispectralimagery of coffee agro-ecosystems in #PuertoRico. The authors created land cover classification maps of the farms using a pixel-based supervised #classification approach. The average overall classification accuracy was 53.9%, which was expected given the high diversity of the landscapes.

To understand the utility of the maps for farm management, the authors interviewed the farmers, showing them the imagery and classification maps. While the farmers were excited about the maps and found them to be a source of pride, they were unsure how to practically apply them to management of their farms. Many farmers had difficulty orienting themselves in the maps and noted that the maps lacked representation of the full biodiversity and crops present.

The authors conclude that while the classification accuracies could be improved with refined methodologies, involving the farmers revealed important insights. Farmers appreciated the simplified maps for an introduction, but wanted to see more of their farm diversity represented. The authors argue for more iterations of the classifications that incorporate farmer feedback and local knowledge. Overall, the study highlights the potential of UAS remote sensing for highly diverse agroecosystems, but emphasizes the importance of farmer engagement and co-development of mapping products to maximize their relevance and utility for farm management. Further work is needed to bridge the technology with farmers' needs and perceptions.

Geographies | Free Full-Text | Farmer Perceptions of Land Cover Classification of UAS Imagery of Coffee Agroecosystems in Puerto Rico

by

Gwendolyn Klenke 1,*, Shannon Brines 1,*, Nayethzi Hernandez 1, Kevin Li 1, Riley Glancy1, Jose Cabrera2, Blake H. Neal2, Kevin A. Adkins2, Ronny Schroeder3 and Ivette Perfecto1,*

1 School for Environment and Sustainability, University of Michigan, Ann Arbor, MI 48109, USA

2 Department of Aeronautical Science, Embry-Riddle Aeronautical University, Daytona Beach, FL 32114, USA

3 Department of Applied Aviation Sciences, Embry-Riddle Aeronautical University, Prescott, AZ 86301, USA

*Authors to whom correspondence should be addressed.

Submission received: 1 March 2024 / Revised: 5 May 2024 / Accepted: 14 May 2024 / Published: 16 May 2024

Abstract

Highly diverse agroecosystems are increasingly of interest as the realization of farms’ invaluable ecosystem services grows. Simultaneously, there has been an increased use of uncrewed aerial systems (UASs) in remote sensing, as drones offer a finer spatial resolution and faster revisit rate than traditional satellites. With the combined utility of UASs and the attention on agroecosystems, there is an opportunity to assess UAS practicality in highly biodiverse settings. In this study, we utilized UASs to collect fine-resolution 10-band multispectral imagery of coffee agroecosystems in Puerto Rico. We created land cover maps through a pixel-based supervised classification of each farm and assembled accuracy assessments for each classification. The average overall accuracy (53.9%), though relatively low, was expected for such a diverse landscape with fine-resolution data. To bolster our understanding of the classifications, we interviewed farmers to understand their thoughts on how these maps may be best used to support their land management. After sharing imagery and land cover classifications with farmers, we found that while the prints were often a point of pride or curiosity for farmers, integrating the maps into farm management was perceived as impractical. These findings highlight that while researchers and government agencies can increasingly apply remote sensing to estimate land cover classes and ecosystem services in diverse agroecosystems, further work is needed to make these products relevant to diversified smallholder farmers.

1. Introduction

Unlike the highly input-dependent monocultures that make up a large portion of the food production system [1,2], diversified agroecosystems have the capacity to maintain ecosystem services, biodiversity, and farmer livelihoods representing a more sustainable alternative to monocultures [3,4,5]. Coffee agroecosystems are ecologically, economically, and politically significant to the neotropics [6]. Ecologically, coffee is significant because of the species richness it has the potential to promote. While there exists a gradient from which coffee is grown, ranging from unshaded monocultures to shaded polycultures and agroforestry systems, many coffee farms in the neotropics promote biodiversity by planting coffee in the shade of overstory vegetation. This overstory vegetation and other cultivated plants intercropped with coffee can provide habitat for wild flora and fauna and regulate ecosystem functions [7,8,9]. Economically, a significant portion of the world’s coffee production takes place in Latin America [10,11,12]. Because of the significant economic impact that coffee exports have on the neotropics, government policy has frequently encouraged high-intensity production at the expense of more ecologically sound agroecosystems [13].

The advent of uncrewed aircrafts (UAs), or drones, means that remote sensing imagery can be captured with a much finer spatial resolution, on the order of tens of centimeters [14], compared to satellites like Sentinel-2A MSI and Landsat 8 OLI, which have resolutions of 10–20 m and 30 m per pixel, respectively [15]. In addition to the increased spatial resolution, drones have quicker revisit times and can be employed with greater ease and maneuverability given appropriate conditions The flexibility and increased spatial resolution of drones mean that UAs have the potential to create vastly more accurate land cover classifications. These advances in drone capabilities have the potential to better support diversified farming systems by providing a platform to monitor key agroecological features, such as biodiversity, soil health, or pests [16].

While the use of finer-resolution uncrewed aerial vehicle (UAV) data may aid in improving classification of more diverse farms, researchers and other outside actors making these classifications should be aware of how their perceptions potentially impact classes of interest [17]. In order to derive practical tools and analyses from classifications, farmers should be included in the mapping and classification of their land. This becomes especially important in diversified systems, as more nuances can exist in what does and does not constitute a “crop”. Working in partnership with farmers highlights how emphases on accuracy in certain land cover classes may differ between researchers and farmers, and how this can lead to alternative sources of bias. For example, Laso et al. (2023) note that the inclusion of “silvopastures” in their classification led to the potential underreporting of another class. However, in Laso et al. (2023), the inclusion of “silvopastures” was more relevant to farmers [17].

In this project, we sought to understand how fine-resolution imagery enabled by advancing UAV technology may bolster management of diversified smallholder farms. We classified multispectral imagery taken by UAV over nine farms in the central western region of Puerto Rico and presented imagery and classified maps of given farms to farmers. After sharing imagery and maps with farmers, we interviewed the farmers to understand how data collected by UAVs can be used in ways that align with farmers’ expectations and needs. We synthesized the technical methodologies of remotely sensing diverse farms at a high resolution and the results of engaging farmers in the initial classification of their own land. In conducting this work, we acknowledge that use of UAVs has the potential to reinforce historical, asymmetric power dynamics between researchers and land stewards [18]. By inviting land managers to review our initial classifications in interviews, we hope to take steps towards empowering communities as the experts in their own lands and de-emphasize the power dynamics between researchers and land managers.

2. Materials and Methods

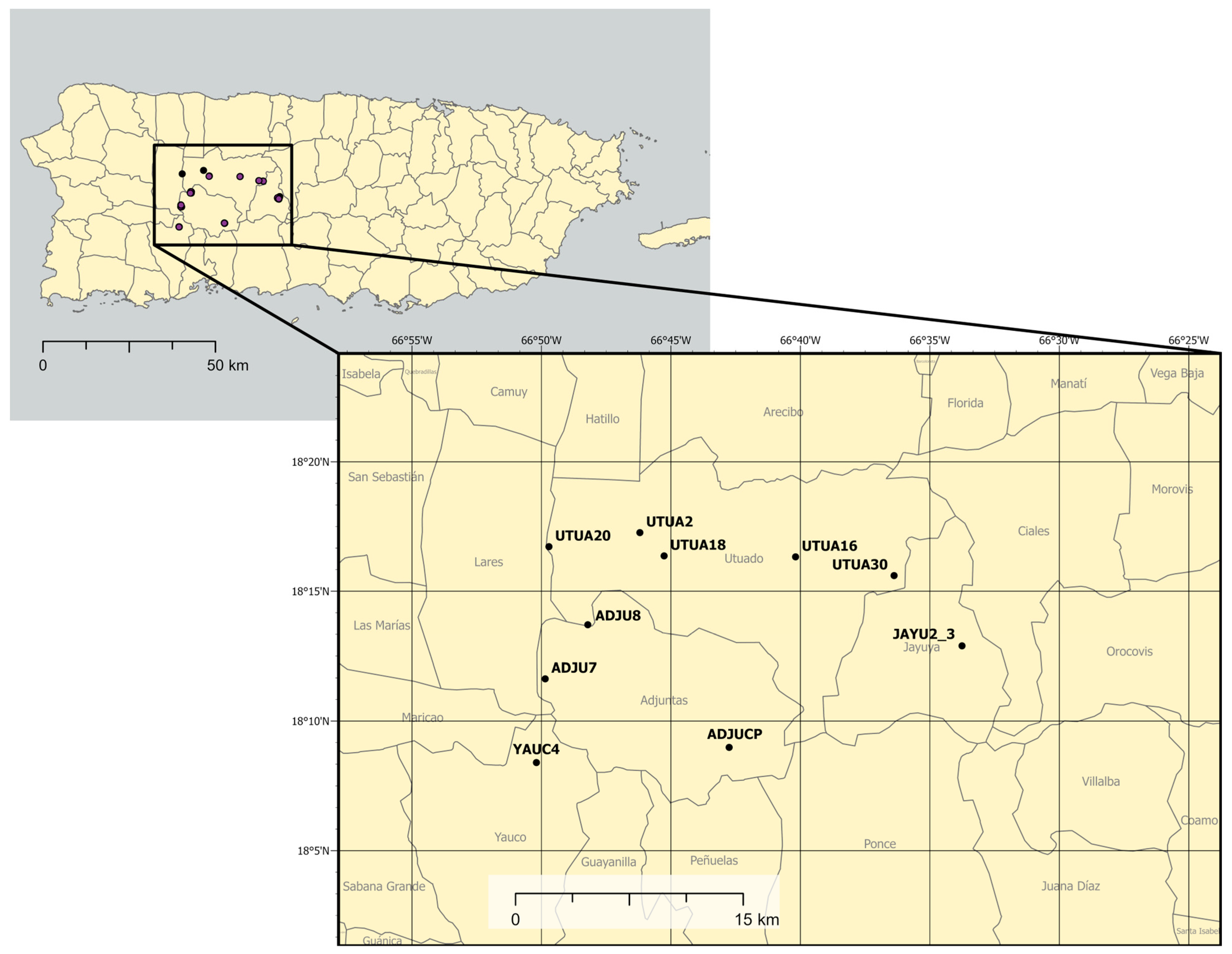

Our study took place in the coffee-growing mountainous areas of central-western Puerto Rico. More specifically, farms were surveyed in Utuado, Adjuntas, Jayuya, and Yauco (see Figure 1). Farms in these regions experience between 177 and 229 cm of annual rainfall [19] and are classified as submontane and lower montane wet forests [20]. Soils present in the coffee-growing region include ultisols, inceptisols, and oxisols [21]. Farms surveyed were a part of long-term coffee agroecosystem research in the region and spanned across a gradient of coffee production intensification [8]. Coffee cultivation periods significantly vary in this region, but coffee is generally harvested in autumn. Other commonly found crops in these diverse agroecosystems include citrus trees, bananas, and plantains. The farms surveyed had an average slope of 15.4 degrees. Farms ranged from 0.8 to 56.7 hectares in size. More information can be found in Table 1.

Figure 1. Study sites within the central-western coffee growing region of Puerto Rico. Municipalities layer from UN Office for the Coordination of Humanitarian Affairs. The figure is projected to “StatePlane Puerto Rico Virgin Isl FIPS 5200 (Meters)”, a version of the Lambert conformal conic projection, and has a datum of NAD 1983.

Table 1. Information on farm size, aspect, slope, and classification based on Moguel and Toledo’s (1999) coffee growing gradient.

The uncrewed aircraft (UA) flights used in this study were conducted in 2022 to collect 10-band multispectral imagery. Before 2021, numerous preliminary data-gathering missions occurred with the use of fixed-wing and multirotor UAs. Ground data collection, which includes the GPS and plant characteristic data, occurred in 2021, 2022, and 2023. Interviews with farmers were conducted in May of 2023 and were subject to review and exemption approval by the Institutional Review Board (IRB) of the University of Michigan.

2.1. Ground Data Collection

Ground data collection was conducted in field campaigns in 2021, 2022, and 2023 to create control points to train and test land cover classification accuracy. We used the ESRI Collector or ESRI Field Maps smartphone app to capture data from a linked external GNSS receiver. In earlier campaigns, the Trimble R1 was used, and in later campaigns, a Bad Elf Flex was incorporated and used as a secondary GNSS receiver. Both of these external GNSS receivers were placed on a 2-m tall survey pole in order to assist in an appropriate satellite connection. Both external receivers increased GPS accuracy (as compared to integrated GPS in the smartphones used to capture data), but steep topography meant that strong connections to satellites were not always met, resulting in decreased GPS accuracy. The Trimble R1 Receiver typically receives submeter accuracy [22], whereas the Bad Elf Flex receives 30–60 cm accuracy on average [23]. Because of the steep topography, typically accuracies of below 1 m were accepted. On very few occasions, accuracies were accepted at around 1.5 m if a given surveyor had waited 5 min with no increase in accuracy.

At a given crop or plant of interest, the survey pole with attached external GPS was placed as close to the base of the plant as possible. Using a smartphone and either ESRI’s Field Maps or Collector, a GPS point was recorded. The data capture software recorded various types of information for each GPS point. If the plant of interest was coffee, information on the coffee leaf rust (CLR) and leaf miner level was recorded. Other information collected included the plant type, specific plant species if relevant, farm code, percent of plant covered by vines, notes about the surrounding canopy, date and time of point collection, and a photo of the plant or surroundings if desired.

2.2. Remote Sensing Flights with Uncrewed Aircraft

The uncrewed aircraft (UA) flights used in this study were conducted in mid-May of 2022 to collect 10-band multispectral imagery. UA work and subsequent method documentation were in accordance with the Federal Aviation Administration’s (FAA) 14 CFR Part 107 regulations. Highly variable topography within the coffee-growing region of Puerto Rico required significant mission and flight planning in order to collect quality multispectral and LiDAR data. Mission planning was completed prior to arrival in Puerto Rico, and included tasks such as identifying appropriate equipment and sensors for the specific terrain and creating standardized procedures. Google Earth Pro was first utilized to identify farm boundaries and areas within farms that may be of special interest, in addition to being used to identify potential divisions for farms that were too large to be imaged with a single drone flight.

A DJI Inspire 2 multirotor UA was outfitted with a multispectral imaging sensor. Multispectral imaging for relevant field campaigns was performed using a MicaSense RedEdge-MX Dual Camera Imaging System, which included 10 synchronized bands that spectrally overlapped with Sentinel-2A MSI and Landsat 8 OLI imagery (detailed in Table 2).

Table 2. Spectral band information for the MicaSense RedEdge-MX Dual Camera Imaging System as compared to Sentinel-2A MSI and Landsat 8 OLI.

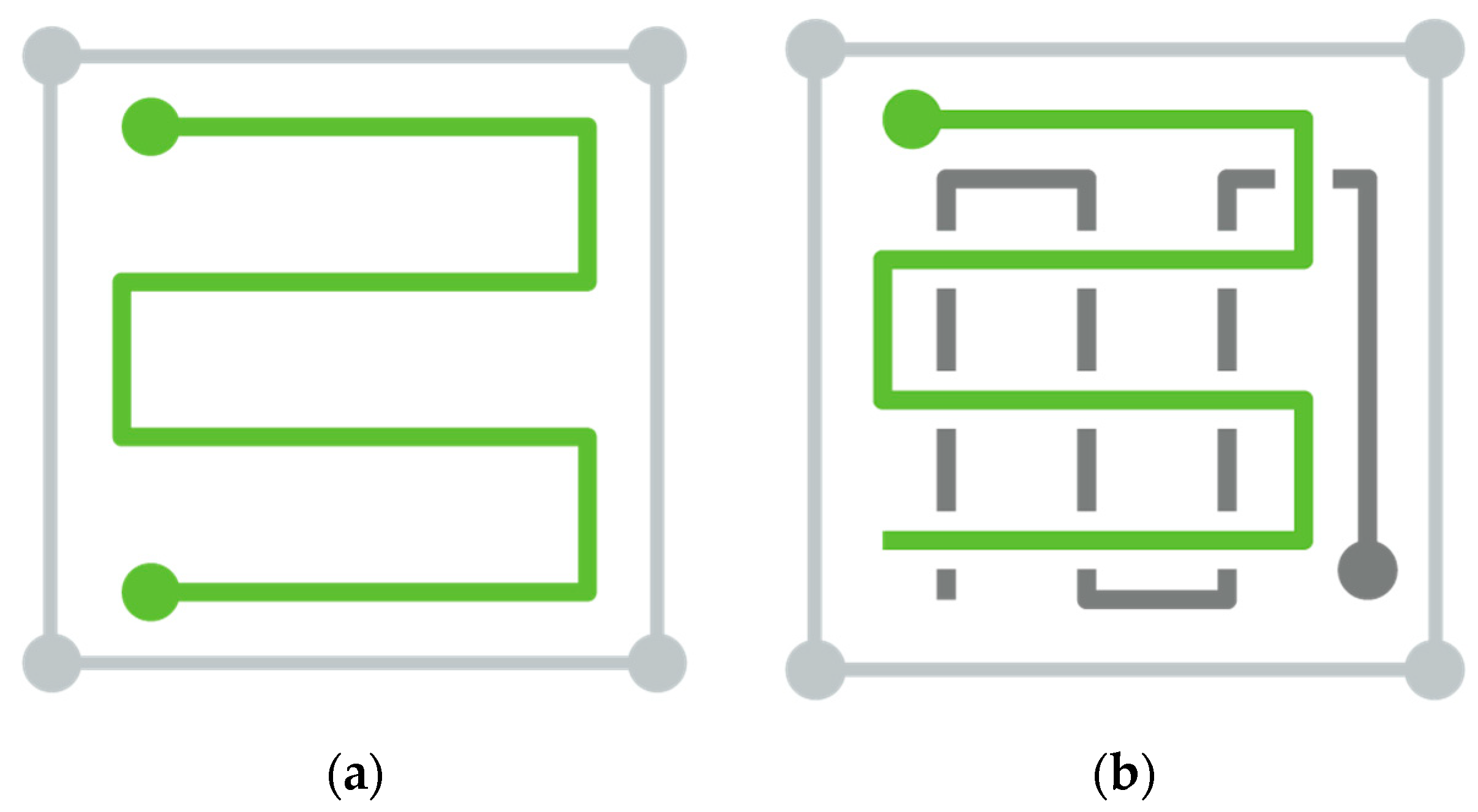

On site, a waypoint-defined flight plan was created in DJI Ground Station Pro on a mobile tablet. The size of the farm, data needs, and underlying surface were considered in determining whether a single or double grid (cross-hatch) flight pattern was flown (Figure 2). Generally, larger farms were flown over as a single grid, as a double grid requires more flight time and therefore more battery life.

Figure 2. (a) Depiction of a single-grid flight pattern; (b) depiction of a double-grid flight pattern.

Prior to farm classifications, basic image processing was performed in Agisoft Metashape in order to create a georeferenced orthomosaic [24]. The default processing utilized the GPS data generated by the UAS and MicaSense dual camera data capturing process, with no additional manual ground control point input. Reflectance calibration was performed, but no reflectance normalization was performed across flights or farms.

2.3. Image Processing and Classification

The 2022 images were pre-processed and classified for interviews with farmers in 2023. In order to run comprehensive, farm-level classifications, it was determined that for farms that had multiple multispectral images (UA flights), the various images should be mosaicked to create one image per farm. Mosaics were created in ERDAS IMAGINE using the MosaicPro tool, with an “overlay” overlap function specified, default “optimal seamline” generation option chosen, and color corrections set to “histogram matching”.

Pixel-based supervised classifications were run in ArcGIS Pro 3.1, sourced from Esri Inc., Redlands, CA, USA. After loading in the mosaicked farm image, the ground control points (GCPs) from three field campaigns were also layered on top. A classification schema was created to encompass the dominant crops and land cover types across the farms, based on previous visits. This schema included the following 10 classes: coffee, citrus, banana, palms, low herbaceous vegetation/grass, bare earth, pavement, buildings, water, and overstory vegetation. For each class, training site polygons were drawn using GCPs as a reference. For instance, if creating a training site for coffee, a polygon was drawn around whichever coffee plant(s) a GCP identified as coffee. For farms that may be larger, significant areas of land would have no GCPs. In order to create representative training sites across the entirety of a farm, polygons were drawn in areas without GCPs that were visually confirmed to match plants with associated GCPs. After creating ample training sites for each class within each farm, a support vector machine (SVM) classifier was run on the entirety of the farm. We expected that many farms may have a limited number of potential training and testing sites. Knowing this, we selected SVM because the classifier makes no assumptions about the data distribution [25] and is less susceptible to an imbalance in training samples [26].

After preliminary classifications were completed, interviews occurred, and analysis was finalized after the interviews. Accuracy assessments were run using testing created with the same process as the training sites. For each farm, roughly the same number of testing sites and training sites (0–15 sites depending on the farm and class) were created for a given class. As much as possible, testing sites did not overlap with previously created training sites, with a few exceptions. For instance, farms with water bodies typically only had one small pond, which meant there was little to no separation between training and testing sites for that class for that farm. Testing sites were used as reference data for the accuracy assessments, which were then run. We tested additional iterations of classifications utilizing principal component analyses (PCAs) to determine if accuracy was increased by the addition of more data (Table 3). While the inclusion of PCAs in classifications did not ultimately improve classifications, the PCAs confirmed that 10 bands of imagery explained more than 98% of the variance.

Table 3. Improved classification iterations applied on 2022 farm imagery.

2.4. Farmer Interviews

In May 2023, we conducted semi-structured interviews with farmers, land managers, and owners, with references made to the multispectral imagery and the classifications. For this purpose, we made posters of each farm’s multispectral imagery and classifications in ArcGIS Pro 3.1. These posters were then printed on 32″ × 40″ matte paper These interviews were conducted with the intention of better understanding land use history, farmers’ spatial relationships with their farms, and how remote sensing or land cover classifications may improve the management or understanding of such complex agroecosystems. Interviews were conducted onsite at farms, or at homes on farm property with teams of 2–3 researchers. Interviewees were asked if they consented to both the interview itself, as well as being recorded during the interview using an audio recorder. See Appendix A for more information on the interview script.

Our interviews assumed that we would be referencing the printed orthomosaics and classifications, but many interviews also included walking areas of the farm with farmers as they pointed out specific crops or landmarks. Interview length varied greatly, with some interviews under an hour and others over two and a half hours. This length variation is primarily because interviews were farmer-guided, with respondents addressing topics they felt relevant. After a series of questions that were intended to orient researchers to the specifics of a given farm, the multispectral image was shown to the farmers. This was intended to show the farmers what the UA had collected, as well as compile any preliminary thoughts the farmers had on the UA itself. In earlier interviews, tracing paper was laid on top of the multispectral image, and farmers were encouraged to annotate any areas they felt important or of general interest. Annotating tracing paper was later removed as part of the interview process, as farmers were often more comfortable speaking generally about the land. After viewing the multispectral image, the classification image was brought out, and farmers were asked questions about the utility of the classification in their management. Viewing the classification map was largely considered to be the conclusion of the interview, and farmers were asked if they had any questions for the researchers. Both the multispectral imagery and the classification maps were left with interviewees at the conclusion of the discussions.

After the interviews were completed, they were uploaded into transcription software and transcribed in Spanish. Researchers then translated the transcriptions from Spanish to English, making corrections to the transcriptions where the software failed to capture any regional language differences or language not otherwise captured. A content analysis was run on the interviews, which included coding each interview transcript individually, as well as synthesizing notes from interviews that were not recorded. In order to conduct an effective content analysis, each theme was clearly defined by researchers. Examples or quotes from interviews were highlighted and sorted into relevant themes. Each example was again reviewed by researchers to ensure that a given example fit into the theme it was assigned to. Each theme was linked to a more generalized research finding from the interviews, and the relevance of each theme to the project at large was defined. Results were then summarized and put into a content matrix.

3. Results

3.1. Ground Data and Image Capturing

The results of drone flights for 2022 were largely successful. Ten farms were surveyed with multispectral imagery. Table 4 details the number of flights flown per farm, as well as the grid pattern flown at each farm. Farms that required multiple flights were flown in succession, with brief pauses between flights to accommodate a battery change for the drone. Of these 10 farms, all but two (ADJUCP and ADJU7) were classified. ADJUCP was not classified as we were unsure if an interview would occur with land managers, and ADJU7 was not classified as large amounts of water were highly reflective and changed the color balance of the farm mosaic. In addition, Table 4 details the ground control points (GCPs) collected by farm.

Table 4. The number of flights flown and ground control points collected by farm.

3.2. Classifications and Accuracy Assessments

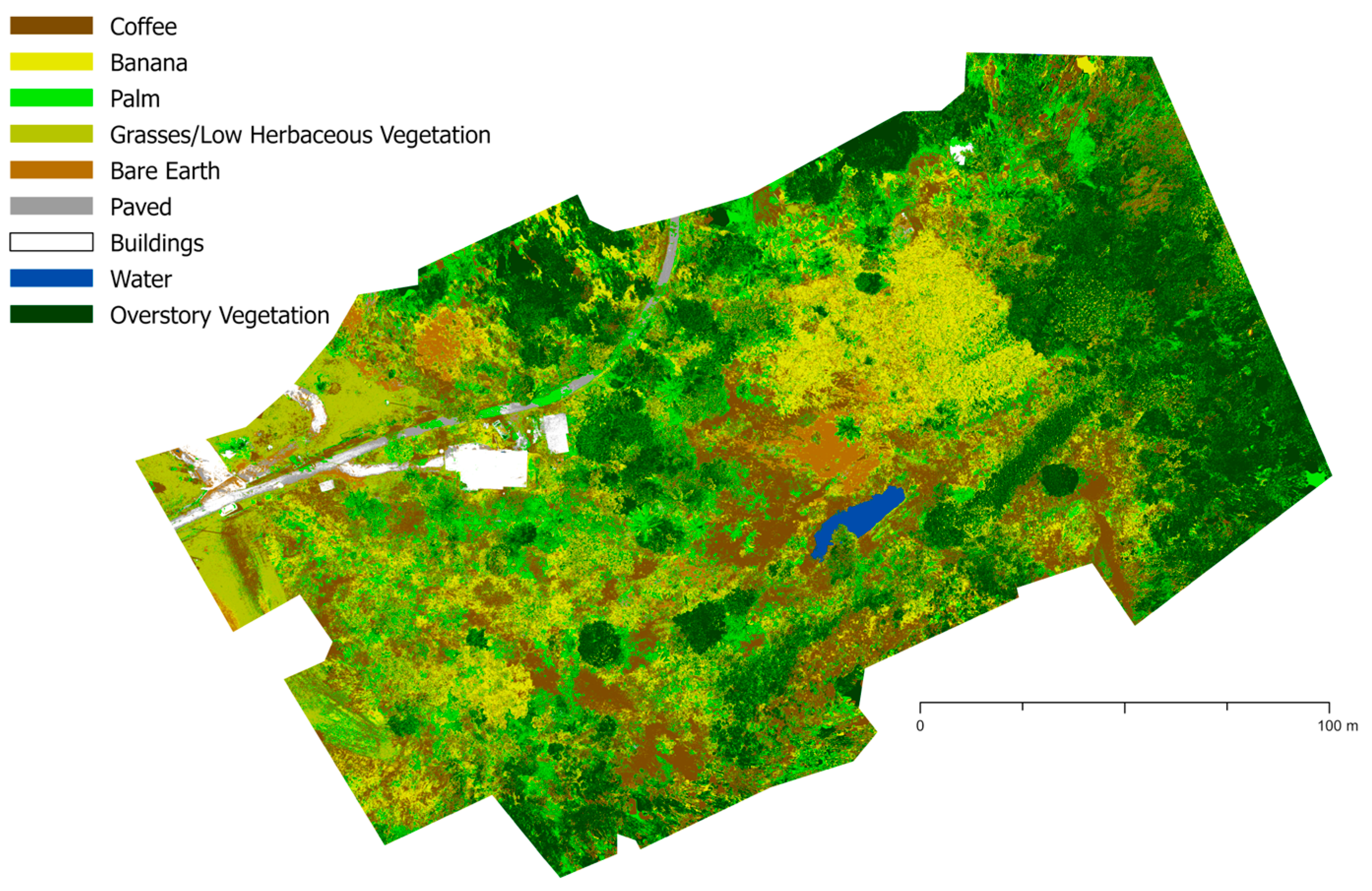

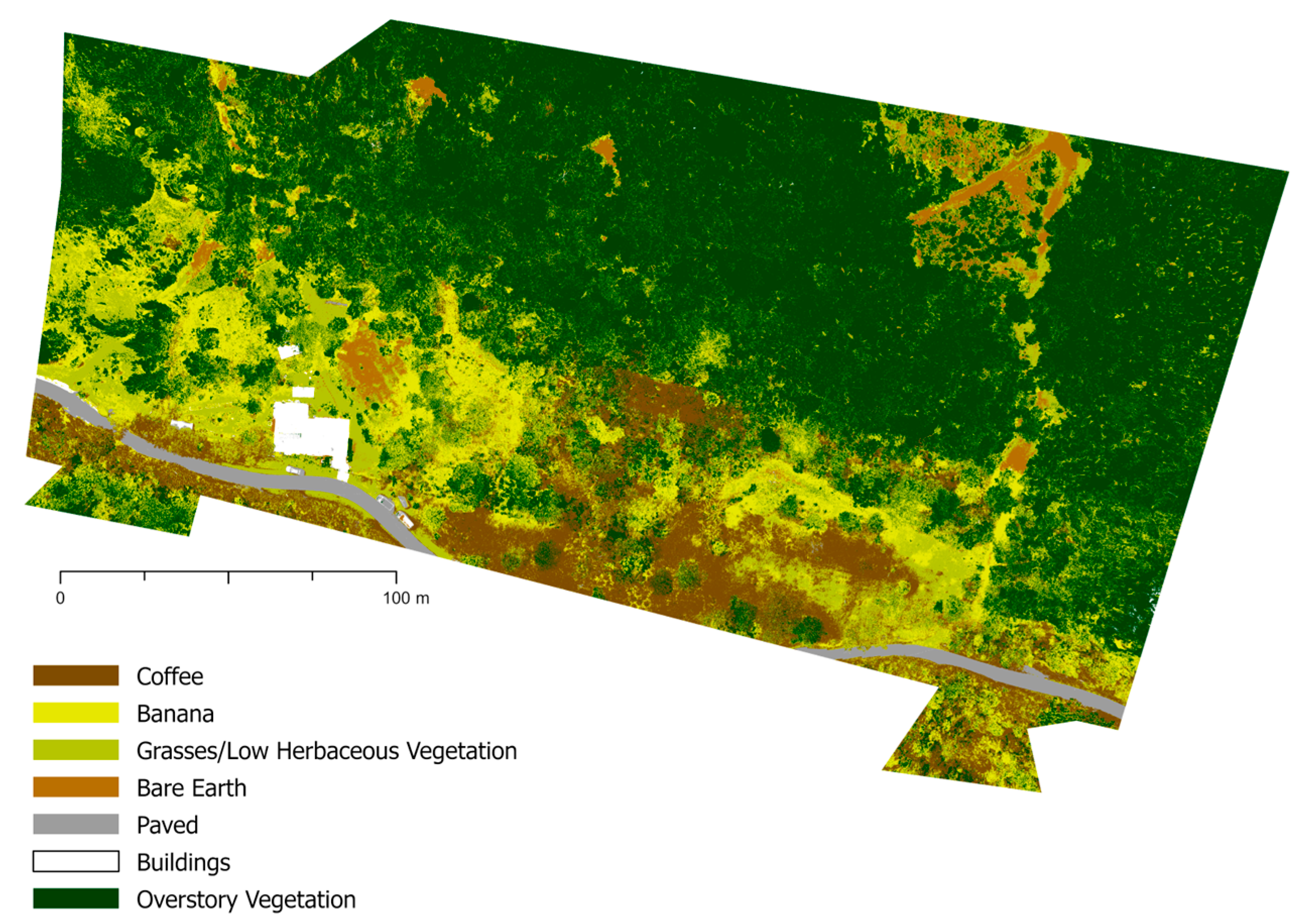

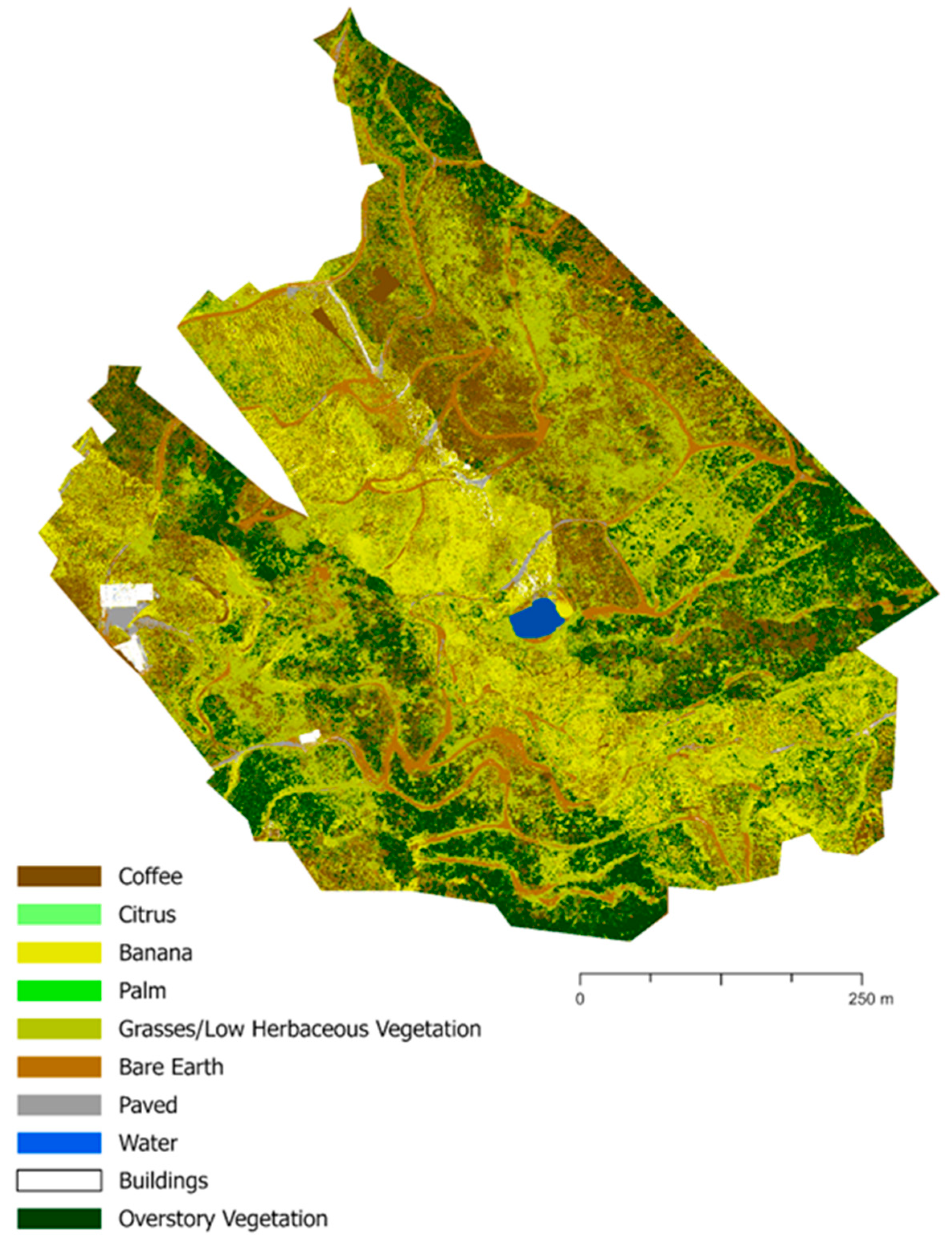

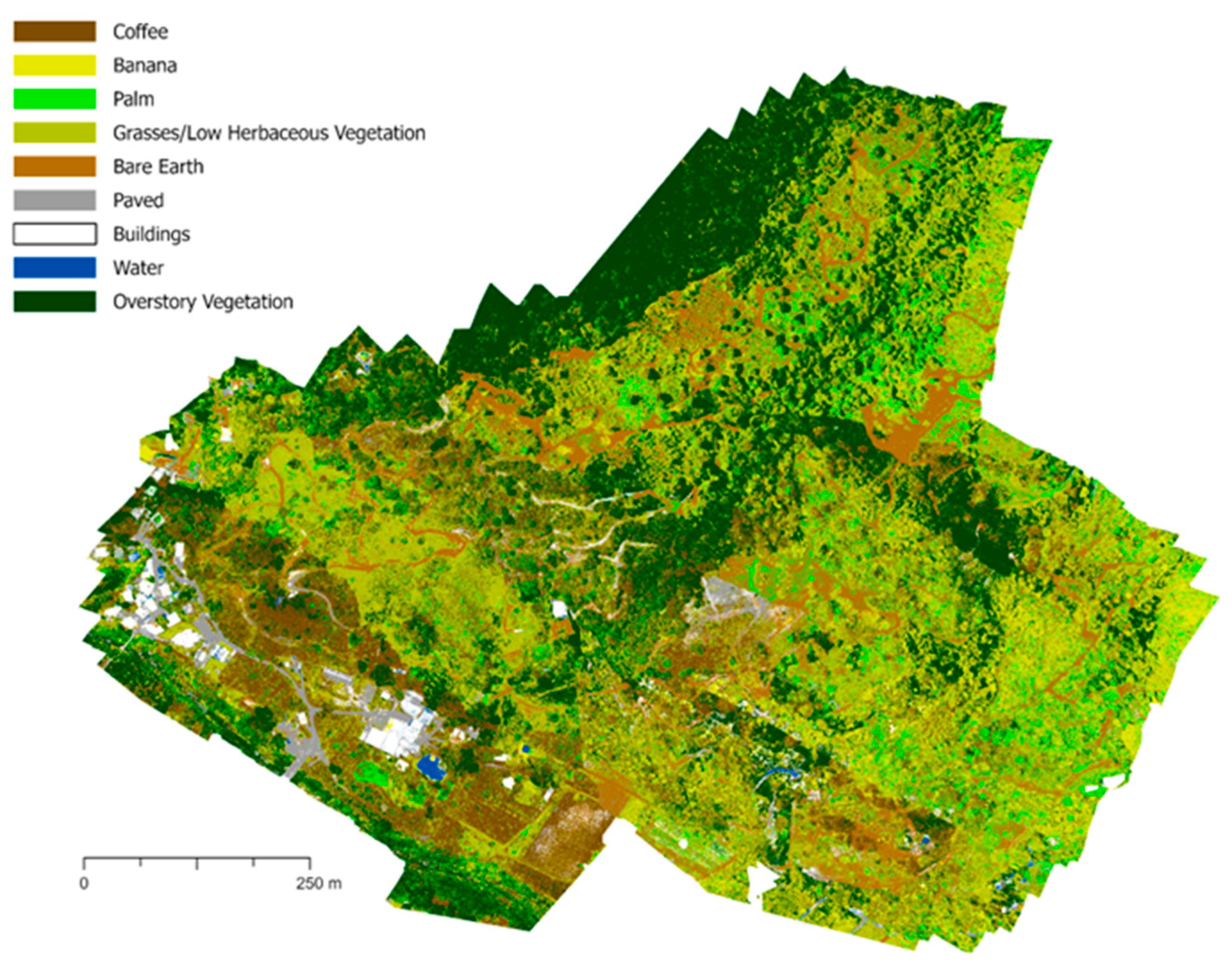

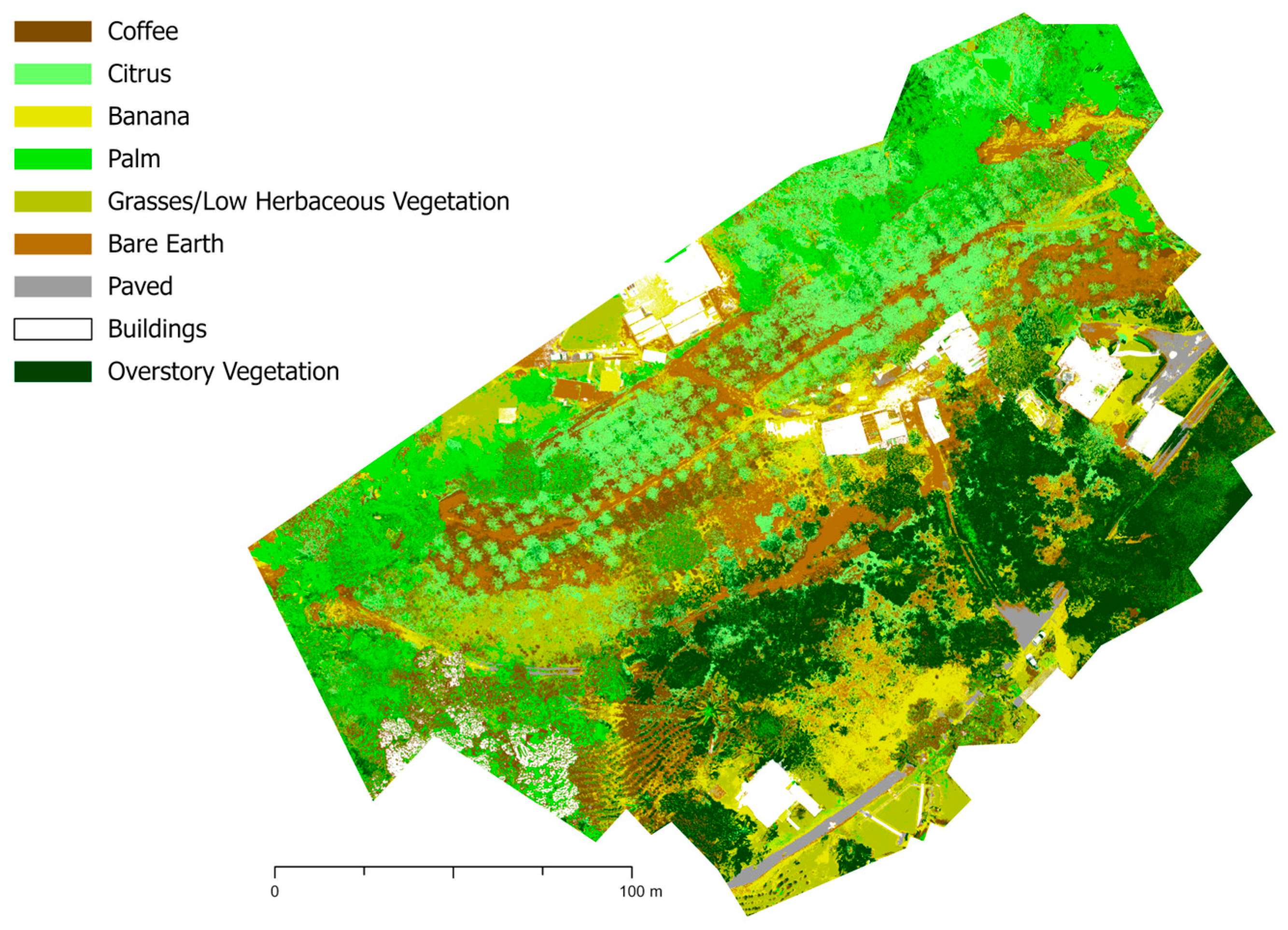

An example classification result is shown in Figure 3. Classification figures for all other farms can be found in Appendix B, Figure A1, Figure A2, Figure A3, Figure A4, Figure A5, Figure A6 and Figure A7. Training and testing sites are detailed in Table A1, Table A2, Table A3 and Table A4 in Appendix C. Both training and testing sites are quantified in two forms: polygons and pixels. Polygons designate the number of sites drawn, and pixels refer to the total number of pixels across all polygons.

Figure 3. Example land cover classification of farm UTUA2 using 2022 multispectral imagery. All maps shown are projected in the coordinate system “StatePlane Puerto Rico Virgin Isl FIPS 5200 (Meters)” datum of NAD 1983.

The initial landcover classifications were all assessed for accuracy. Table 5 details both the overall accuracy of the classification, as well as the Cohen’s Kappa statistic. The Cohen’s Kappa statistic incorporates errors of commission and omission and is regarded as more nuanced than that of overall accuracy [27]. Kappa is reported on a scale of −1 to +1, with values closer to +1 indicating a stronger classifier. A classifier is considered strong if it has a high accuracy while considering the expected accuracy of a random classifier [28].

Table 5. Accuracy of farm classification using 2022 imagery. The table details the overall accuracy of each farm along with Cohen’s Kappa statistic.

The average overall accuracy across all farms was 53.9%, and the average Kappa statistic across all farms was 0.409. Farm YAUC4 had the highest overall accuracy, as well as the highest Kappa statistic. The farm with the lowest accuracy and Kappa statistic for classification was UTUA16. Individual accuracy assessments, including users’ and producers’ error, can be found in the Supplementary Material, Tables S1–S9.

Our secondary classification results were similar to those of the initial classification, but ultimately did not improve classification accuracy consistently. Because of this, they were omitted from the paper, but more information on their results can be found in Appendix D, Table A5. Individual accuracy assessments for secondary classifications can be found in the Supplementary Material, Tables S10–S19.

3.3. Farmer Interview Content Analysis

We conducted a total of nine interviews, six of which were recorded on an audio recorder, following the interviewee’s consent. Using the recorded interviews and notes from the interviewees who did not consent to be recorded, we created a content matrix (Table 6) to summarize shared themes across interviews. The themes highlighted included utility, novelty, orientation, biodiversity, clarity, and land management. Farmers found the maps interesting and exciting but were unsure if they were applicable to the land management of their farms. Many farmers struggled to orient themselves, especially when landmarks the farmers were familiar with were not overtly visible in the map. Many farmers noted a lack of biodiversity or crops present in the map. Lastly, while viewing maps, many farmers noted current or future management decisions they considered. These were included in the content matrix, as they may inform future iterations or methodologies of classifications.

Table 6. Content matrix summarizing interview findings.

4. Discussion

We learned from our interviews with farmers how our maps could be improved in terms of accuracy and relevance. While diversified coffee agroecosystems have a myriad of potential land cover classes, we initially believed that fewer classification categories would support the legibility of the maps to farmers who might be unfamiliar with this format. However, many farmers noted that biodiversity and plants that they deemed important were absent from our maps. These exchanges underscore the importance of contextualizing the development of a classification workflow with local knowledge, as it can help identify critical problems that justify extra effort to provide a more relevant deliverable for farmers.

We obtained an average kappa value across all farms of 0.409, meaning that the classifiers, generally, were fair in comparison to a random classifier [29,30]. Many of the farms had a slight disagreement between overall accuracy and the kappa index; for instance, YAUC4 had an accuracy of 74% (or 0.74) and a kappa statistic of 0.51. In the case of all classification iterations in this paper, the overstory vegetation class often had more training and testing sites made of larger polygons, and therefore more pixels. While the higher amount of pixels of overstory vegetation may have skewed overall accuracy, the kappa statistic takes into account the relative impact of each class, meaning that it is not skewed by a single well-represented class [27,28,31]. It is worth noting, in this paper and otherwise, that while overall accuracy and the kappa statistic are common ways to evaluate land cover classifications in the remote sensing field, more recent literature [32,33] has highlighted that confusion matrices are not entirely reliable and need to be analyzed with some understanding that the accuracies reported are not absolute.

Somewhat expectedly, many of the vegetation classes (i.e., coffee, citrus, banana, palm, and overstory vegetation) were misclassified as other vegetation classes. Because these classes are spectrally similar, and because the initial classifications utilized all 10 bands, including those that had little separation between classes within the same band, it could be anticipated that there would be some confusion amongst these classes. Figure 4 illustrates the spectral similarities across vegetation training classes. Another area of confusion was between the pavement and building classes. Across many of the farms, buildings and pavements were misclassified as one another, but were less often misclassified as bare earth and vegetation.

Figure 4. The spectral profile of vegetation classes for farm UTUA2.

There exists a myriad of reasons why the land cover classifications of this paper may be considered “inaccurate”, many of which have been alluded to earlier in this discussion. One such reason may be the inability of researchers to distinguish land cover types in multispectral imagery. For instance, on many farms, coffee may be grown under the canopy cover of other vegetation. If all coffee ground control points were obscured by larger overstory vegetation, researchers would be unable to accurately draw training and testing sites. In addition, some classes present on farms, while relevant, lacked sufficient training and testing points due to their rarity. As an example, we trained the classifier to identify citrus in UTUA20, but found that the small number of citrus trees present meant testing sites were either generated on the same trees training was performed on, or testing was unable to be completed.

Our classification results could be improved with additional steps that were not available to us at the time but may benefit future studies. We were unable to conduct radiometric normalization prior to the image mosaicking process, which may have improved consistency across flights and farms [34]. While histogram equalization was conducted during the mosaicking process, the resulting mosaics still had visible radiometric differences. For example, radiometric normalization could have reduced the bright spots present in one flight over ADJU7, which likely led to spectral imbalances that prevented us from successfully classifying the imagery of this farm. In addition, if radiometric normalization occurred earlier in the process, it may have been feasible to train the classifier on only one farm and then apply it across farms. This would reduce the work to create many training sites across farms in order to compensate for the radiometric discrepancies. Additionally, classifications may be improved by using ground control points in orthomosaic creation. During the processing of imagery in Agisoft Metashape, only the internal UA GNSS system was used to georeference raw images. By including ground control points collected with a more precise external GPS receiver in the image processing methodology, multispectral imagery may have been better aligned with ground control points collected for building training sites. More broadly speaking, the inclusion of more GCPs in creating training and testing sites may also improve classification accuracy. However, for some research, the time and labor needed to complete more ground truthing may not be justified by an increased overall accuracy.

Analyzing the interview recordings and notes allowed for a more nuanced understanding of the remote sensing work conducted in this study. It became very apparent during interviews that farmers and land managers were extremely excited to view, talk about, and keep the map printouts. Many remarked that the images of their farms were beautiful and were excited to display the printouts for others to see but were unsure of how the maps or products derived from the maps could be implemented in regular management. One farmer noted that they planned to hang imagery in a cafe for visitors to see, but when questioned about the utility of the map in their work, they indicated that they would instead be more interested in utilizing the drone to evenly distribute pesticides.

While the beauty and excitement of images and landcover classification maps are often overlooked as an aspect of utility in the remote sensing field, we understood this to be an extremely important subtheme, as it became more evident that farmers and researchers could build further rapport by addressing the beauty of the images and the farms that land managers work so hard to maintain. Connection building in the context of this paper is extremely relevant, as land cover classifications are regarded as an iterative process [17]. By fostering better connections between researchers and farmers, we can more intimately understand the ways in which our work fits into farmers’ management and make adjustments to maps accordingly. In many of our interviews, interviewees often pointed out a lack of diversity or missing landmarks. Without having conversations with land managers, researchers are limited to making changes that may not be useful to farmers and instead only serve to increase classification accuracies for schemas that were flawed themselves.

Farmers who communicated to us that maps were lacking relevant information also had more difficulty orienting themselves during interviews. One farmer remarked that he had often regarded his land as a square parcel and viewing it as the roughly rectangular shape the imagery was captured as led him to become disoriented. The farmer also noted that he might have been able to orient himself in spite of his perception of the parcel, but only if landmarks he passed by daily had been included and labeled as such. When farmers are not able to orient themselves to the imagery, implementation of the maps in management becomes even farther fetched.

While many farmers indicated absent crop and vegetation diversity in the land cover classification map, we felt that sharing a more simplistic map first actually enhanced the feedback we received and farmers’ own understanding of the maps. Because the map shared was simpler, farmers noted specific areas where they were interested in seeing more detail, where they were practicing a given land management technique, or where they had a few personally relevant crops. In addition, we believe that the lack of detail present allowed for quicker orientation and better clarity of understanding of the maps. This was extremely important, as we understood that land managers had never seen their land displayed in this manner and needed some time to relate the imagery to land they were intimately familiar with.

Including interviews as part of this project greatly enhanced the findings of this paper and would enhance any future work in similar settings. Colloredo-Mansfield et al. found similar results in their work, noting that participatory drone mapping allowed researchers to ascertain broader and more relevant information about land management [35]. In addition, Colloredo-Mansfield et al. found that producing land cover classification maps allowed them to understand sensitive areas of farms (e.g., where young plants were growing) and establish rapport between researchers and farmers. Following Colloredo-Mansfield et al. [35], it is clear that our project would benefit from more knowledge sharing between researchers and farmers. One farmer noted during our interview that while she was extremely excited about participating in research, she was disappointed that she previously had no proof of the drones being on the property to share with a friend. By leaving her with the printout of the map and a description of the work we had done, the farmer may be more likely to continue working with researchers. In return, we received valuable feedback on the crops and vegetation relevant to her on her property. Similar land cover classification projects would benefit from additional iterations incorporating such feedback and knowledge-sharing.

The detailed nature of the high-resolution imagery was seemingly part of the interest that farmers had in interacting with the printouts. While the pixel-based supervised land cover classifications had fair accuracy, switching to an object-based classification would likely increase the overall average accuracy, as it is documented that object-based classifications perform better, especially at finer resolutions [36]. However, the fine-resolution data presented in this paper came at a cost of increased processing power and time requirements for each step of image processing and classification. Object-based classifications may require even more computational power, especially at the segmentation step [37].

Classification maps may also be enhanced with the addition of elevation or surface data, like LiDAR data that are collected together with the multispectral imagery, and could be the subject of collaborative data fusion projects. Farmers interviewed also often noted that they oriented themselves using peaks and valleys present on farms, something not reflected in the printout of the multispectral imagery or land cover classification maps. However, including data of this type may mandate a more dynamic format in which to present maps to farmers. While digital elevation and surface models are something many in remote sensing are familiar with, viewing elevation data on a 2D plane may still present some challenges for those who have not seen such maps before. This potentially could be remedied by creating a 3D model of the surface or elevation data and viewing it together with farmers on a computer.

5. Conclusions

This study was conducted to better understand pixel-based supervised classifications of diverse ecosystems, and the utility these classifications have for researchers and land managers. We applied this exploration to coffee agroecosystems in Puerto Rico, contributing to the growing literature on understanding the role of UAS-collected fine-resolution imagery. We found that while our land cover classifications were only moderately accurate, increased accuracy could be achieved by utilizing different methodologies and better ground truths. In addition, we concluded that while farmers were unsure about using the maps as a farm management tool, they were still excited about the technology being applied to their land and grateful to have a better understanding of the research being conducted. We also found that sharing our maps with farmers, even with their flaws, generated better communication between researchers and farmers and created the opportunity to “be attentive to the ‘social position of the new map and how it engages institutions’” [17,38], rather than reinforcing historical power dynamics.

However, there still exist many opportunities to expand and improve this research. Improving remote sensing methodologies includes further exploring object-based classifications in the context of Puerto Rican coffee agroecosystems, and improving farmer collaboration could include viewing more map iterations after classifications have been adjusted to incorporate farmer feedback. Both remote sensing and interview methodologies would be improved by visiting farmers and their land more often. We argue that this work encourages further exploration of fine-resolution remote sensing in coffee agroecosystems, as well as further work alongside land managers to create classification schemes and products better suited to their management needs.

Supplementary Materials

Author Contributions

Conceptualization, G.K. and S.B.; methodology, G.K., S.B. and K.A.A.; software, G.K., S.B., K.A.A. and R.S.; validation, G.K., N.H., K.L. and R.G.; formal analysis, G.K., S.B., N.H. and I.P.; investigation, G.K., N.H., K.L., R.G., J.C., B.H.N. and K.A.A.; resources, S.B., K.A.A., R.S. and I.P.; data curation, G.K. and S.B.; writing—original draft preparation, G.K.; writing—review and editing, all authors; visualization, G.K.; supervision, S.B.; project administration, S.B., K.A.A. and I.P.; funding acquisition, S.B., K.A.A. and I.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by USDA|National Institute for Food and Agriculture, USDA NIFA grant number 2019-67022-29929.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Acknowledgments

We would like to acknowledge the farmers who gave their time and effort to be interviewed. We would also like to acknowledge JonahPollens-Dempsey for his role in troubleshooting and editing.

Conflicts of Interest

The authors declare no conflicts of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript; or in the decision to publish the results.

Appendix A

English Version of Interview

Note: The interviews will be conducted in Spanish, but we have included the English version for IRB purposes.

“Hello, my name is Nayethzi Hernandez and this is my colleague Gwen Klenke. We’re both graduate students at the University of Michigan. And this project is in collaboration with Ivette Perfecto, who you know. Thank you for taking the time to participate in this study. As Warren let you know, our team is looking into diverse Puerto Rican coffee farms and agroecology systems. As someone who is so knowledgeable, I really appreciate your time. Through interviews, we’re just looking for generalizable information, and none of this will be identifiable. If that’s still okay with you it’ll take us roughly 1 h. Before we begin I want to confirm that it’s okay that I record our conversation. Please let me know if anything comes up during the interview you just let me know.

Excellent! Let’s begin talking a bit about your land.

Question group 1: Land history and farm management

Can you tell me a bit about how you started growing coffee?

When it comes to your farm, what are your goals with your crops?

Could you tell me a little bit about how you decided to put which crops where?

What type of knowledge or techniques influence how you manage the farm?

Could you tell me about some of the environmental changes that you’ve experienced while farming this land?

What are some goals you have for your farm?

Question group 2: Show farmers the map

*Translate what Gwen says about how the maps are made*

When you first look over the map what are some of your thoughts?

Question group 3: Map review

After looking over the map, what are areas of the map that are of interest to you?

Are there any changes you would like to consider when looking over this map?

If this technology was available to you would it be helpful for farm management?

If it’s helpful to you, how often would you want an updated map?

Closing:

Thank you so much for your insights! We really appreciate your time. We invite you to keep the map if you’d like it. Before we finish, is there anything you’d like to ask or say to us regarding the map or the interview? I will provide you with my contact information if you have any questions for me about this study, or anything else”.

Appendix B

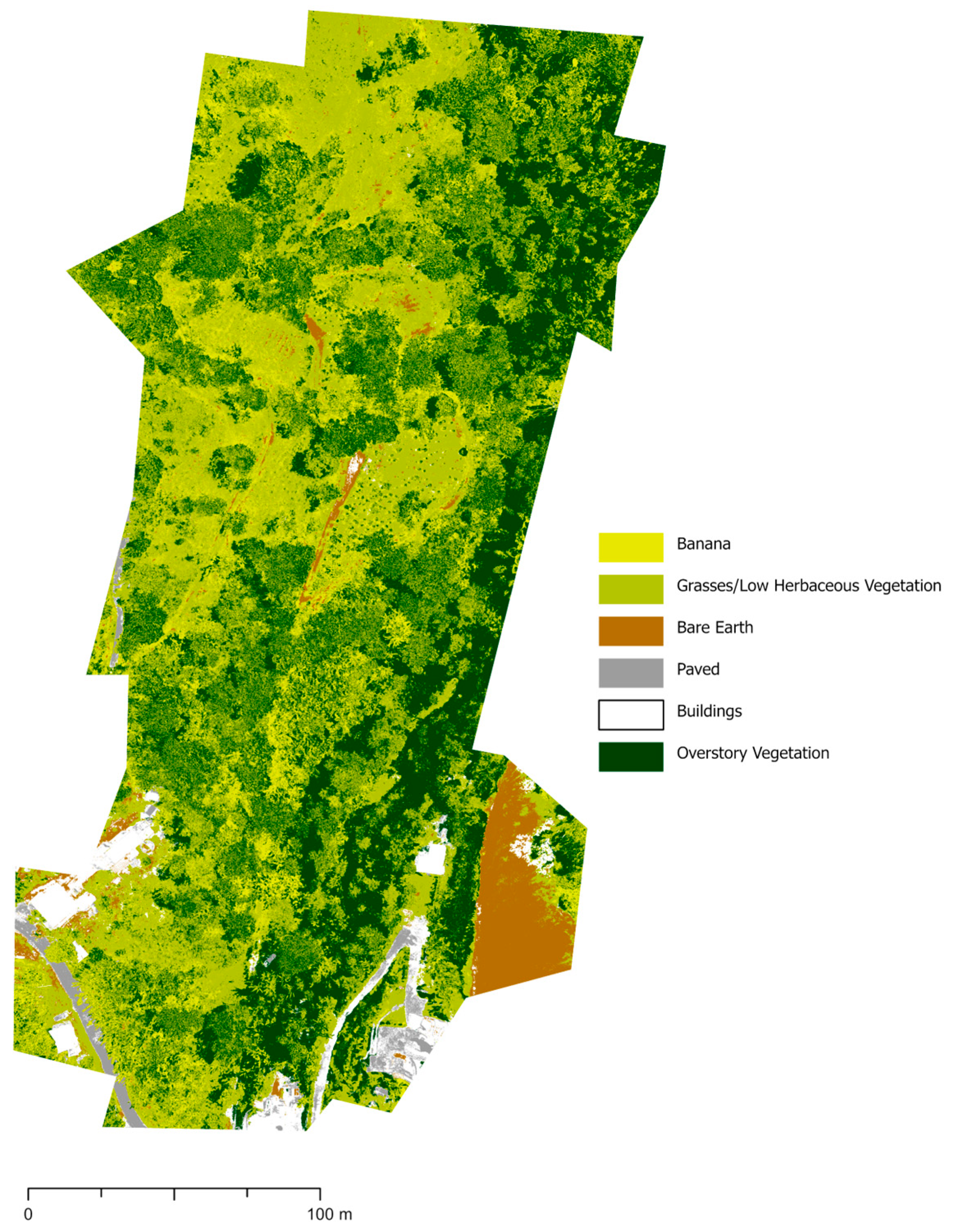

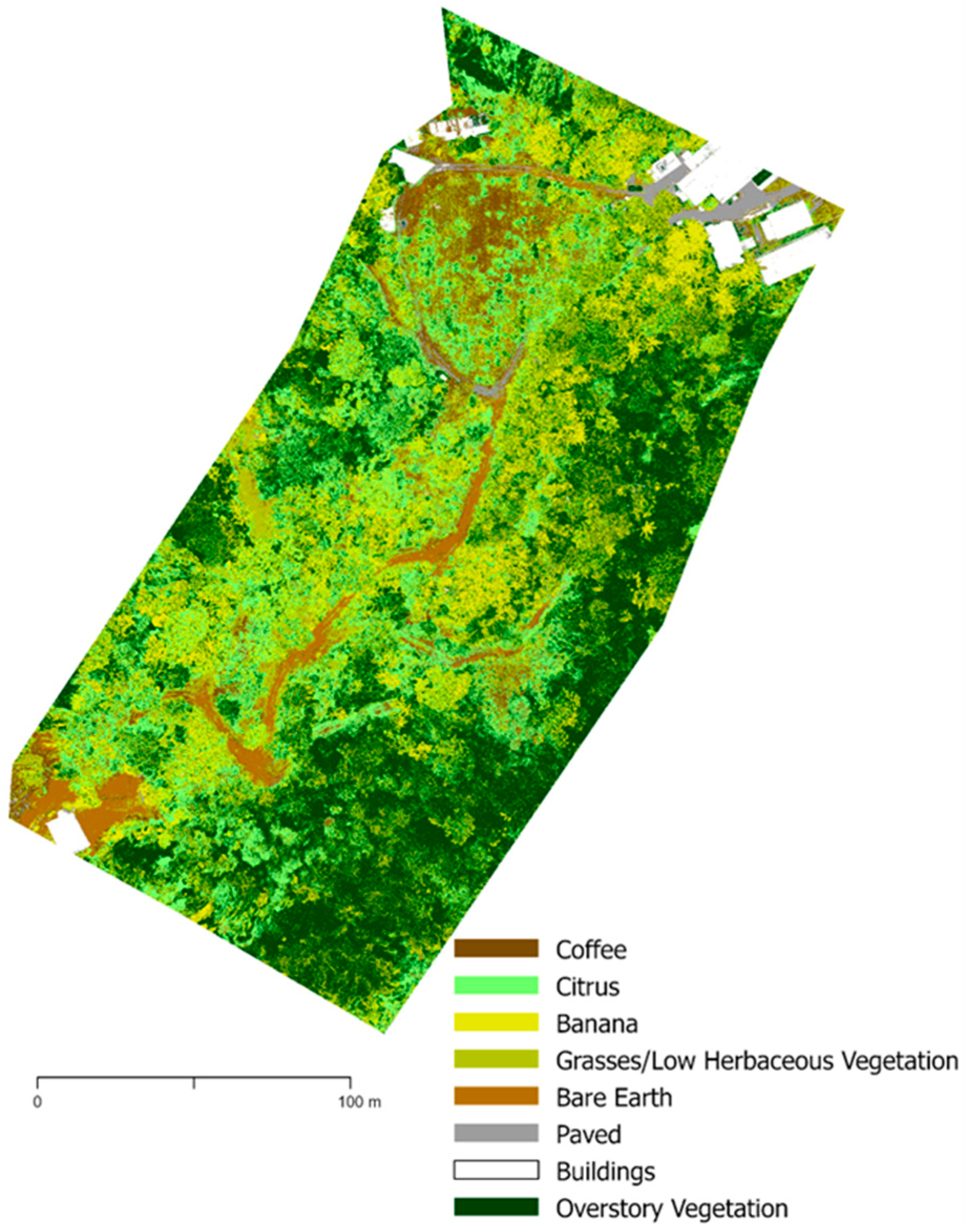

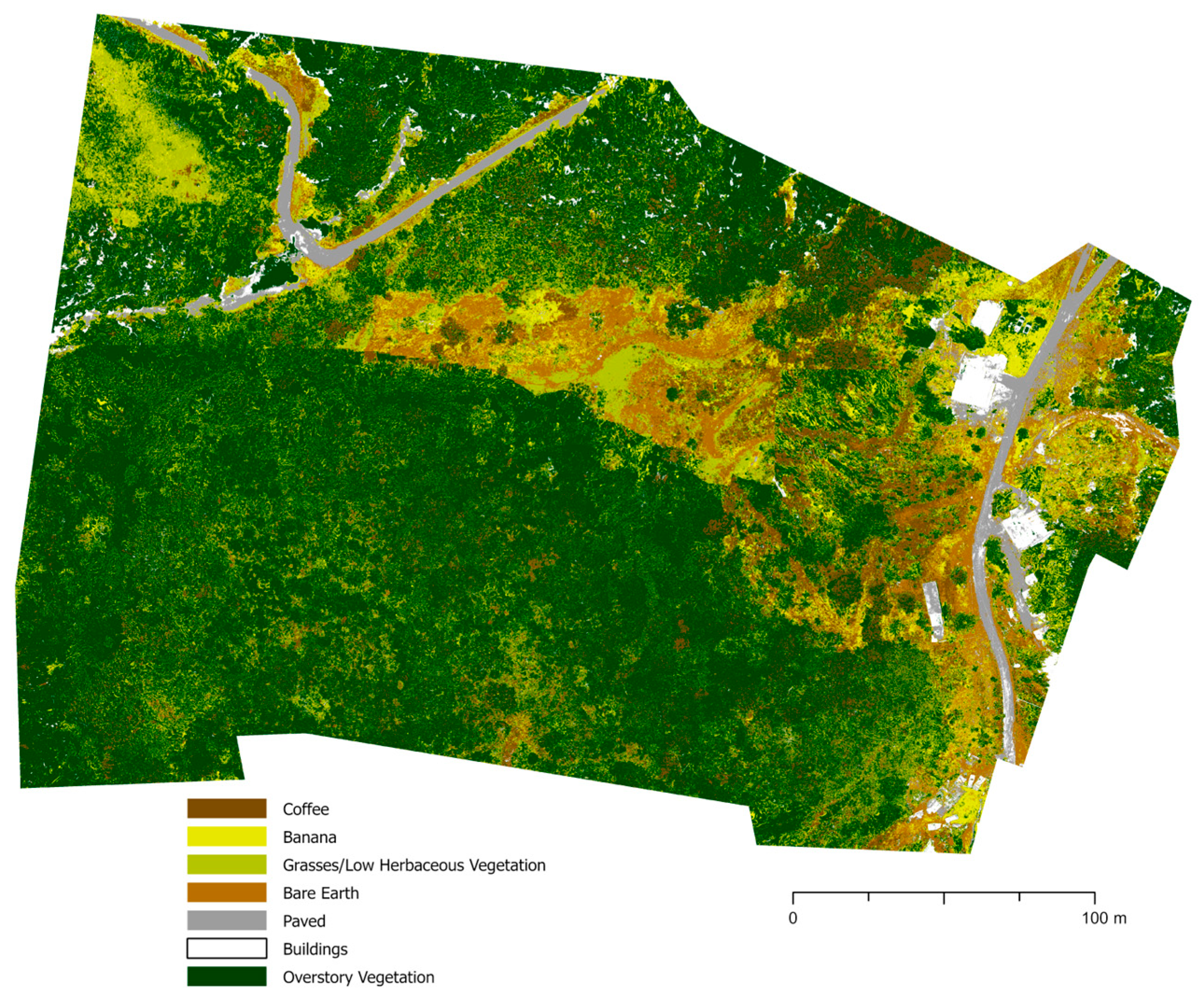

Figure A1. Land cover classification of farm UTUA16 using 2022 multispectral imagery.

Figure A2. Land cover classification of farm UTUA18 using 2022 multispectral imagery.

Figure A3. Land cover classification of farm UTUA20 using 2022 multispectral imagery.

Figure A4. Land cover classification of farm UTUA30 using 2022 multispectral imagery.

Figure A5. Land cover classification of farm YAUC4 using 2022 multispectral imagery.

Figure A6. Land cover classification of farm ADJU8 using 2022 multispectral imagery.

Figure A7. Land cover classification of farm JAYU2_3 using 2022 multispectral imagery.

Appendix C

Table A1. Number of training sites for each class in each farm. Each site is a polygon drawn around one representative site.

Table A1. Number of training sites for each class in each farm. Each site is a polygon drawn around one representative site.

| Farm | Sites/Polygons | Total | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Coffee | Citrus | Banana | Palm | Grasses/Low Herb | Bare Earth | Paved | Buildings | Water | Overstory Veg | ||

| UTUA2 | 16 | 9 | 3 | 3 | 6 | 6 | 2 | 4 | 0 | 1 | 50 |

| UTUA16 | 3 | 0 | 2 | 5 | 1 | 1 | 1 | 2 | 1 | 2 | 18 |

| UTUA18 | 0 | 0 | 4 | 0 | 3 | 3 | 2 | 2 | 0 | 3 | 17 |

| UTUA18_obj | 6 | 0 | 2 | 0 | 2 | 3 | 3 | 3 | 0 | 2 | 21 |

| UTUA20 | 4 | 7 | 4 | 0 | 2 | 4 | 2 | 3 | 0 | 2 | 28 |

| UTUA30 | 10 | 0 | 8 | 0 | 2 | 4 | 4 | 4 | 0 | 4 | 36 |

| YAUC4 | 9 | 0 | 6 | 0 | 8 | 5 | 4 | 3 | 0 | 3 | 38 |

| ADJU8 | 14 | 0 | 14 | 0 | 10 | 10 | 3 | 6 | 2 | 7 | 66 |

| JAYU2_3 | 22 | 0 | 14 | 11 | 10 | 12 | 7 | 10 | 2 | 11 | 99 |

Table A2. Number of pixels in training sites per class in each farm classification.

Table A2. Number of pixels in training sites per class in each farm classification.

| Farm | Pixels | Total Image Pixels | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Coffee | Citrus | Banana | Palm | Grasses/Low Herb | Bare Earth | Paved | Buildings | Water | Overstory Veg | Total | ||

| UTUA2 | 15,420 | 89,118 | 17,989 | 148,768 | 60,581 | 47,051 | 20,753 | 185,462 | 0 | 130,191 | 715,333 | 46,438,992 |

| UTUA16 | 22,218 | 0 | 219,318 | 164,487 | 46,675 | 13,170 | 4615 | 85,679 | 26,321 | 448,878 | 1,031,361 | 40,464,036 |

| UTUA18 | 0 | 0 | 29,439 | 0 | 21,740 | 113,898 | 8102 | 54,437 | 0 | 246,623 | 474,239 | 45,370,368 |

| UTUA18_obj | 19,621 | 0 | 14,374 | 0 | 52,909 | 161,456 | 18,481 | 60,649 | 0 | 427,093 | 754,583 | 45,370,368 |

| UTUA20 | 4467 | 24,900 | 218,041 | 0 | 28,145 | 20,922 | 13,867 | 176,316 | 0 | 346,139 | 832,797 | 37,109,000 |

| UTUA30 | 9420 | 0 | 45,587 | 0 | 37,646 | 18,861 | 23,754 | 55,815 | 0 | 1,339,405 | 1,530,488 | 34,595,745 |

| YAUC4 | 13,393 | 0 | 74,659 | 0 | 63,188 | 49,843 | 107,538 | 142,527 | 0 | 983,011 | 1,434,159 | 49,498,494 |

| ADJU8 | 36,804 | 0 | 10,892 | 0 | 96,113 | 48,835 | 23,161 | 99,930 | 45,674 | 424,707 | 786,116 | 175,322,016 |

| JAYU2_3 | 60,510 | 0 | 42,551 | 47,663 | 361,668 | 163,092 | 57,446 | 142,129 | 10,865 | 635,097 | 1,521,021 | 184,144,180 |

Table A3. Number of testing sites per class for each farm. Each site is a polygon drawn around one representative site.

Table A3. Number of testing sites per class for each farm. Each site is a polygon drawn around one representative site.

| Farm | Sites/Polygons | Totals | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Coffee | Citrus | Banana | Palm | Grasses/Low Herb | Bare Earth | Paved | Buildings | Water | Overstory Veg | ||

| UTUA2 | 9 | 4 | 4 | 3 | 5 | 5 | 3 | 3 | 0 | 2 | 38 |

| UTUA16 | 0 | 0 | 3 | 3 | 2 | 3 | 2 | 2 | 1 | 2 | 18 |

| UTUA18 | 7 | 1 | 3 | 0 | 4 | 9 | 5 | 5 | 0 | 4 | 38 |

| UTUA18_obj | 7 | 1 | 3 | 0 | 4 | 9 | 5 | 5 | 0 | 4 | 38 |

| UTUA20 | 6 | 0 | 4 | 1 | 3 | 9 | 5 | 5 | 0 | 3 | 36 |

| UTUA30 | 11 | 0 | 4 | 2 | 3 | 3 | 4 | 3 | 0 | 2 | 32 |

| YAUC4 | 11 | 0 | 5 | 3 | 6 | 7 | 3 | 2 | 0 | 3 | 40 |

| ADJU8 | 16 | 0 | 13 | 0 | 10 | 10 | 3 | 3 | 2 | 4 | 61 |

| JAYU2_3 | 22 | 0 | 15 | 4 | 12 | 12 | 7 | 4 | 1 | 5 | 82 |

Table A4. Number of pixels in testing sites per class for each farm.

Table A4. Number of pixels in testing sites per class for each farm.

| Farm | Pixels | Total Image Pixels | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Coffee | Citrus | Banana | Palm | Grasses/Low Herb | Bare Earth | Paved | Buildings | Water | Overstory Veg | Totals | ||

| UTUA2 | 3810 | 17,091 | 18,297 | 80,214 | 54,190 | 10,729 | 38281 | 125,668 | 0 | 203,356 | 551,636 | 46,438,992 |

| UTUA16 | 0 | 0 | 7781 | 61,145 | 41,312 | 9343 | 9845 | 11,010 | 37,650 | 233,632 | 411,718 | 40,464,036 |

| UTUA18 | 2493 | 4121 | 59,215 | 0 | 28,739 | 22,514 | 32,468 | 80,595 | 0 | 260,498 | 490,643 | 45,370,368 |

| UTUA18_obj | 2493 | 4121 | 59,215 | 0 | 28,739 | 22,514 | 32,468 | 80,595 | 0 | 260,498 | 490,643 | 45,370,368 |

| UTUA20 | 2793 | 0 | 21,690 | 24,840 | 9667 | 23,540 | 6096 | 99,500 | 0 | 239,493 | 427,619 | 37,109,000 |

| UTUA30 | 2483 | 0 | 2546 | 38,088 | 35,111 | 22,465 | 22,665 | 31,082 | 0 | 137,590 | 292,030 | 34,595,745 |

| YAUC4 | 7746 | 0 | 34,838 | 79,386 | 10,584 | 52,144 | 24,292 | 5484 | 0 | 367,339 | 581,813 | 49,498,494 |

| ADJU8 | 18,869 | 0 | 43,640 | 0 | 49,656 | 69,418 | 14,798 | 51,844 | 56,798 | 306,815 | 611,838 | 175,322,016 |

| JAYU2_3 | 14,095 | 0 | 41,412 | 28,680 | 51,561 | 35,116 | 33,236 | 337,495 | 16,366 | 296,676 | 854,637 | 184,144,180 |

Appendix D

Table A5. Accuracy of secondary classifications. The table details the overall accuracy of each farm along with Cohen’s Kappa statistic.

Table A5. Accuracy of secondary classifications. The table details the overall accuracy of each farm along with Cohen’s Kappa statistic.

| Iteration | Farm | Overall Accuracy (%) | Kappa |

|---|---|---|---|

| B | UTUA2 | 51.3 | 0.399 |

| UTUA16 | 51.6 | 0.389 | |

| UTUA18 | 55.3 | 0.425 | |

| UTUA20 | 52.7 | 0.395 | |

| C | UTUA2 | 45.4 | 0.361 |

| UTUA16 | 51.2 | 0.376 | |

| UTUA18 | 46.9 | 0.324 | |

| D | UTUA20 | 50.9 | 0.372 |

| E | UTUA2 | 47.3 | 0.380 |

| F | UTUA2 | 45.6 | 0.358 |

Secondary classifications were completed using several alternate band combinations, but overall, the new layer stacks did not lead to an increase in accuracy under these methods. With the exception of three classifications (Iteration B of farm UTUA16, Iteration B of farm UTUA20, and Iteration C of farm UTUA16), overall accuracies of secondary classifications were lower than the initial classification, although the differences in all cases were only marginal. When considering Iteration A accuracies alongside Iterations B–F, the average overall accuracies could not be directly compared because not all farms initially classified were used in the secondary classifications. However, when comparing Iteration A to each of Iterations B, C, and D, and filtering to only the relevant farms, the accuracy for Iteration A maintained a higher overall average than the respective secondary classifications. The lowered accuracies of secondary classifications were somewhat anticipated. While it has been documented that ancillary data work well to enhance object-based classifications [37], the effects are not as strong for pixel-based classifications because pixel-based classifications lack “objects” that ancillary data can contextualize [37].

References

- Foley, J.A.; Ramankutty, N.; Brauman, K.A.; Cassidy, E.S.; Gerber, J.S.; Johnston, M.; Mueller, N.D.; O’Connell, C.; Ray, D.K.; West, P.C.; et al. Solutions for a Cultivated Planet. Nature 2011, 478, 337–342. [Google Scholar] [CrossRef] [PubMed]

- Altieri, M. Green Deserts: Monocultures and Their Impacts on Biodiversity. In Red Sugar, Green Deserts: Latin American Report on Monocultures and Violations of the Human Rights to Adequate Food and Housing, to Water, to Land and to Territory; FIAN International/FIAN Sweden: Stockholm, Sweden, 2009; pp. 67–76. ISBN 978-91-977188-3-7. [Google Scholar]

- Iverson, A.L.; Gonthier, D.J.; Pak, D.; Ennis, K.K.; Burnham, R.J.; Perfecto, I.; Ramos Rodriguez, M.; Vandermeer, J.H. A Multifunctional Approach for Achieving Simultaneous Biodiversity Conservation and Farmer Livelihood in Coffee Agroecosystems. Biol. Conserv. 2019, 238, 108179. [Google Scholar] [CrossRef]

- Mayorga, I.; Vargas de Mendonça, J.L.; Hajian-Forooshani, Z.; Lugo-Perez, J.; Perfecto, I. Tradeoffs and Synergies among Ecosystem Services, Biodiversity Conservation, and Food Production in Coffee Agroforestry. Front. For. Glob. Chang. 2022, 5, 690164. [Google Scholar] [CrossRef]

- Saj, S.; Torquebiau, E.; Hainzelin, E.; Pages, J.; Maraux, F. The Way Forward: An Agroecological Perspective for Climate-Smart Agriculture. Agric. Ecosyst. Environ. 2017, 250, 20–24. [Google Scholar] [CrossRef]

- Perfecto, I.; Armbrecht, I. The Coffee Agroecosystem in the Neotropics: Combining Ecological and Economic Goals. In Tropical Agroecosystems; CRC Press: Boca Raton, FL, USA, 2003; pp. 159–194. [Google Scholar]

- Jha, S.; Bacon, C.M.; Philpott, S.M.; Ernesto Méndez, V.; Läderach, P.; Rice, R.A. Shade Coffee: Update on a Disappearing Refuge for Biodiversity. BioScience 2014, 64, 416–428. [Google Scholar] [CrossRef]

- Moguel, P.; Toledo, V.M. Biodiversity Conservation in Traditional Coffee Systems of Mexico. Conserv. Biol. 1999, 13, 11–21. [Google Scholar] [CrossRef]

- Perfecto, I.; Rice, R.A.; Greenberg, R.; Van der Voort, M.E. Shade Coffee: A Disappearing Refuge for Biodiversity: Shade Coffee Plantations Can Contain as Much Biodiversity as Forest Habitats. BioScience 1996, 46, 598–608. [Google Scholar] [CrossRef]

- ITC (International Trade Center). Coffee Exporter’s Guide, 3rd ed.; ITC: Geneva, Switzerland, 2011; p. 247. [Google Scholar]

- Rice, R.A. A Place Unbecoming: The Coffee Farm of Northern Latin America. Geogr. Rev. 1999, 89, 554–579. [Google Scholar] [CrossRef]

- Harvey, C.A.; Pritts, A.A.; Zwetsloot, M.J.; Jansen, K.; Pulleman, M.M.; Armbrecht, I.; Avelino, J.; Barrera, J.F.; Bunn, C.; García, J.H.; et al. Transformation of Coffee-Growing Landscapes across Latin America. A Review. Agron. Sustain. Dev. 2021, 41, 62. [Google Scholar] [CrossRef]

- Borkhataria, R.; Collazo, J.A.; Groom, M.J.; Jordan-Garcia, A. Shade-Grown Coffee in Puerto Rico: Opportunities to Preserve Biodiversity While Reinvigorating a Struggling Agricultural Commodity. Agric. Ecosyst. Environ. 2012, 149, 164–170. [Google Scholar] [CrossRef]

- Jay, S.; Baret, F.; Dutartre, D.; Malatesta, G.; Héno, S.; Comar, A.; Weiss, M.; Maupas, F. Exploiting the Centimeter Resolution of UAV Multispectral Imagery to Improve Remote-Sensing Estimates of Canopy Structure and Biochemistry in Sugar Beet Crops. Remote Sens. Environ. 2019, 231, 110898. [Google Scholar] [CrossRef]

- Cerasoli, S.; Campagnolo, M.; Faria, J.; Nogueira, C.; da Caldeira, M.C. On Estimating the Gross Primary Productivity of Mediterranean Grasslands under Different Fertilization Regimes Using Vegetation Indices and Hyperspectral Reflectance. Biogeosciences 2018, 15, 5455–5471. [Google Scholar] [CrossRef]

- Librán-Embid, F.; Klaus, F.; Tscharntke, T.; Grass, I. Unmanned Aerial Vehicles for Biodiversity-Friendly Agricultural Landscapes—A Systematic Review. Sci. Total Environ. 2020, 732, 139204. [Google Scholar] [CrossRef]

- Laso, F.J.; Arce-Nazario, J.A. Mapping Narratives of Agricultural Land-Use Practices in the Galapagos. In Island Ecosystems: Challenges to Sustainability; Walsh, S.J., Mena, C.F., Stewart, J.R., Muñoz Pérez, J.P., Eds.; Social and Ecological Interactions in the Galapagos Islands; Springer International Publishing: Cham, Switzerland, 2023; pp. 225–243. ISBN 978-3-031-28089-4. [Google Scholar]

- Bersaglio, B.; Enns, C.; Goldman, M.; Lunstrum, L.; Millner, N. Grounding Drones in Political Ecology: Understanding the Complexities and Power Relations of Drone Use in Conservation. Glob. Soc. Chall. J. 2023, 2, 47–67. [Google Scholar] [CrossRef]

- National Weather Service. PR and USVI Normals. Available online: https://www.weather.gov/sju/climo_pr_usvi_normals (accessed on 22 June 2023).

- Helmer, E.H.; Ramos, O.; del MLópez, T.; Quiñónez, M.; Diaz, W. Mapping the Forest Type and Land Cover of Puerto Rico, a Component of the Caribbean Biodiversity Hotspot. Caribb. J. Sci. 2002, 38, 165–183. [Google Scholar]

- Alvarez-Torres, B. How Do the Various Soil Types in Puerto Rico Support Different Crops? Available online: https://sustainable-secure-food-blog.com/2020/07/22/how-do-the-various-soil-types-in-puerto-rico-support-different-crops/ (accessed on 22 June 2023).

- Trimble R1 GNSS Receiver—Geomaticslandsurveying. Available online: https://geomaticslandsurveying.com/product/trimble-r1/ (accessed on 15 May 2024).

- Bad Elf. Bad Elf Flex. Available online: https://bad-elf.com/pages/flex (accessed on 14 June 2023).

- MicaSense RedEdge MX Processing Workflow (Including Reflectance Calibration) in Agisoft Metashape Professional. Available online: https://agisoft.freshdesk.com/support/solutions/articles/31000148780-micasense-rededge-mx-processing-workflow-including-reflectance-calibration-in-agisoft-metashape-pro (accessed on 18 July 2023).

- Mountrakis, G.; Im, J.; Ogole, C. Support Vector Machines in Remote Sensing: A Review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Train Support Vector Machine Classifier (Spatial Analyst)—ArcGIS Pro | Documentation. Available online: https://pro.arcgis.com/en/pro-app/latest/tool-reference/spatial-analyst/train-support-vector-machine-classifier.htm (accessed on 4 July 2023).

- Congalton, R.G. A Review of Assessing the Accuracy of Classifications of Remotely Sensed Data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Rosenfield, G.H.; Fitzpatrick-Lins, K. A Coefficient of Agreement as a Measure of Thematic Classification Accuracy. Photogramm. Eng. 1986, 52, 223–227. [Google Scholar]

- Fleiss, J.L.; Levin, B.; Paik, M.C. Statistical Methods for Rates and Proportions, 3rd ed.; Wiley Series in Probability and Statistics; Wiley: Hoboken, NJ, USA, 2003; ISBN 978-0-471-52629-2. [Google Scholar]

- Landis, J.R.; Koch, G.G. An Application of Hierarchical Kappa-Type Statistics in the Assessment of Majority Agreement among Multiple Observers. Biometrics 1977, 33, 363–374. [Google Scholar] [CrossRef]

- Manel, S.; Williams, H.C.; Ormerod, S.J. Evaluating Presence–Absence Models in Ecology: The Need to Account for Prevalence. J. Appl. Ecol. 2001, 38, 921–931. [Google Scholar] [CrossRef]

- Foody, G.M. Status of Land Cover Classification Accuracy Assessment. Remote Sens. Environ. 2002, 80, 185–201. [Google Scholar] [CrossRef]

- Olofsson, P.; Foody, G.M.; Herold, M.; Stehman, S.V.; Woodcock, C.E.; Wulder, M.A. Good Practices for Estimating Area and Assessing Accuracy of Land Change. Remote Sens. Environ. 2014, 148, 42–57. [Google Scholar] [CrossRef]

- Tuia, D.; Marcos, D.; Camps-Valls, G. Multi-Temporal and Multi-Source Remote Sensing Image Classification by Nonlinear Relative Normalization. ISPRS J. Photogramm. Remote Sens. 2016, 120, 1–12. [Google Scholar] [CrossRef]

- Colloredo-Mansfeld, M.; Laso, F.J.; Arce-Nazario, J. Drone-Based Participatory Mapping: Examining Local Agricultural Knowledge in the Galapagos. Drones 2020, 4, 62. [Google Scholar] [CrossRef]

- Baker, B.A.; Warner, T.A.; Conley, J.F.; McNeil, B.E. Does Spatial Resolution Matter? A Multi-Scale Comparison of Object-Based and Pixel-Based Methods for Detecting Change Associated with Gas Well Drilling Operations. Int. J. Remote Sens. 2013, 34, 1633–1651. [Google Scholar] [CrossRef]

- Whiteside, T.G.; Boggs, G.S.; Maier, S.W. Comparing Object-Based and Pixel-Based Classifications for Mapping Savannas. Int. J. Appl. Earth Obs. Geoinf. 2011, 13, 884–893. [Google Scholar] [CrossRef]

- Kim, A.M. Critical Cartography 2.0: From “Participatory Mapping” to Authored Visualizations of Power and People. Landsc. Urban Plan. 2015, 142, 215–225. [Google Scholar] [CrossRef]

Figure 1. Study sites within the central-western coffee growing region of Puerto Rico. Municipalities layer from UN Office for the Coordination of Humanitarian Affairs. The figure is projected to “StatePlane Puerto Rico Virgin Isl FIPS 5200 (Meters)”, a version of the Lambert conformal conic projection, and has a datum of NAD 1983.

Figure 2. (a) Depiction of a single-grid flight pattern; (b) depiction of a double-grid flight pattern.

Figure 3. Example land cover classification of farm UTUA2 using 2022 multispectral imagery. All maps shown are projected in the coordinate system “StatePlane Puerto Rico Virgin Isl FIPS 5200 (Meters)” datum of NAD 1983.

Table 1. Information on farm size, aspect, slope, and classification based on Moguel and Toledo’s (1999) coffee growing gradient.

| Farm | Size (ha) | Aspect | Median Slope (°) | Classification |

|---|---|---|---|---|

| UTUA2 | 1.64 | West-facing | 7 | Commercial polyculture |

| UTUA16 | 0.96 | South-facing | 12 | Traditional polyculture |

| UTUA18 | 2.13 | East-facing | 16 | Traditional polyculture |

| UTUA20 | 1.63 | South-facing | 18 | Commercial polyculture |

| UTUA30 | 0.82 | West-facing | 25 | Traditional polyculture |

| YAUC4 | 2.47 | North-facing | 12 | Traditional polyculture |

| ADJUCP | 3.45 | North-facing | 12 | Commercial polyculture |

| ADJU8 | 41.97 | East-facing | 16 | Shaded monoculture |

| JAYU2_3 | 56.05 | South-facing | 17 | Shaded monoculture |

Table 2. Spectral band information for the MicaSense RedEdge-MX Dual Camera Imaging System as compared to Sentinel-2A MSI and Landsat 8 OLI.

| Sentinel-2A MSI | Landsat 8 OLI | MicaSense RedEdge-MX Dual Camera Imaging System | |||

|---|---|---|---|---|---|

| Spectral Region | Wavelength Range (nm) | Spectral Region | Wavelength Range (nm) | Spectral Region | Wavelength Range (nm) |

| Blue | 458–523 | Blue | 435–451 | Blue | 430–458 |

| Green peak | 543–578 | Blue | 452–512 | Blue | 459–491 |

| Red | 650–680 | Green | 533–590 | Green | 524–538 |

| Red edge | 698–713 | Red | 636–673 | Green | 546.5–573.5 |

| Red edge | 733–748 | NIR | 851–879 | Red | 642–658 |

| Red edge | 773–793 | SWIR1 | 1566–1651 | Red | 661–675 |

| NIR | 785–899 | SWIR2 | 2107–2294 | Red Edge | 700–719 |

| NIR narrow | 855–875 | | | Red Edge | 711–723 |

| SWIR | 1565–1655 | | | Red Edge | 731–749 |

| SWIR | 2100–2280 | | | NIR | 814.5–870.5 |

Table 3. Improved classification iterations applied on 2022 farm imagery.

| Iteration Name | Multispectral Bands | Principal Components | Other Layers | Farms Layer Stack Was Performed on |

|---|---|---|---|---|

| Iteration A | 1–10 | 一 | 一 | UTUA2, UTUA16, UTUA18, UTUA20, UTUA30, YAUC4, ADJU8, JAYU2 |

| Iteration B | 一 | 1–10 | 一 | UTUA2, UTUA16, UTUA18, UTUA20 |

| Iteration C | 5–7 | 1–3 | 一 | UTUA2, UTUA16, UTUA18 |

| Iteration D | 5–8 | 1–3 | 一 | UTUA20 |

| Iteration E | 5–10 | 1, 2 | 一 | UTUA2 |

| Iteration F | 5–7 | 1–3 | NDVI | UTUA2 |

Table 4. The number of flights flown and ground control points collected by farm.

| Farms | Number of Flights | Grid Pattern | Ground Control Points | Auxiliary Ground Control Points |

|---|---|---|---|---|

| UTUA2 | 1 | Double-grid | 112 | 142 |

| UTUA16 | 1 | Double-grid | 20 | 52 |

| UTUA18 | 1 | Double-grid | 32 | 62 |

| UTUA20 | 1 | Double-grid | 41 | 80 |

| UTUA30 | 2 | Double-grid | 24 | 51 |

| YAUC4 | 1 | Double-grid | 28 | 51 |

| ADJU8 | 7 | Single-grid | 44 | 64 |

| JAYU2_3 | 8 | Single-grid | 63 | 170 |

| Total GCPs | | | 364 | 672 |

Table 5. Accuracy of farm classification using 2022 imagery. The table details the overall accuracy of each farm along with Cohen’s Kappa statistic.

| Farm | Overall Accuracy (%) | Kappa (κ) |

|---|---|---|

| UTUA2 | 57.0 | 0.463 |

| UTUA16 | 49.4 | 0.369 |

| UTUA18 | 58.4 | 0.447 |

| UTUA18_obj | 36.8 | 0.221 |

| UTUA20 | 52.4 | 0.388 |

| UTUA30 | 51.3 | 0.391 |

| YAUC4 | 74.0 | 0.509 |

| ADJU8 | 53.5 | 0.463 |

| JAYU2_3 | 52.6 | 0.430 |

Table 6. Content matrix summarizing interview findings.

| Themes | Quote/Example | Research Finding | Subthemes | Relevance to Land Cover Classification Map and Methodology |

|---|---|---|---|---|

| Utility | “What is the purpose of us seeing this?” | Many farmers were unsure how the classification maps could fit into the farm management but were excited about the maps and being able to keep them. | Beauty | Landcover maps are created with the intention of better understanding the makeup of a given area to enhance land management. However, there were no clear farmer-generated ideas on the implementation of the maps in their own management, nor any motivation to implement the ones suggested by researchers. |

| Novelty | The majority of farmers provided excited exclamations when presented with a map. | Farmers are open to the use of maps and the classification and visuals in their present form. | Pride, Technology | There is still excitement about the prospect of utilizing drone imagery and classifications but there still exists a gap in understanding the applicability of relatively new technology in these contexts. |

| “You can think you know everything. On the contrary, huh. Technology advances, Knowledge is continuous”. | ||||

| Orientation | “I don’t know where it is”. | When relevant personal landmarks were noted, farmers often used them to orient themselves. In the case that they were not present, their absence was noted, and farmers then used other points or direction from interviewers to orient themselves. | Movement, Landmarks, Perspectives | In connection to novelty and utility, a lack of orientation means that the imagery or classification maps may not be implemented and may instead become a barrier for farmers engaging with this technology. |

| “Oh, there’s my lake!” or “I let myself be led by the buildings”. | ||||

| Biodiversity | Many farmers noted that other food crops and vegetation were present on the farm but had not been mapped (i.e., peppers, guaraguao trees, smaller citrus, mangoes). | Within diversified farming, there is a wealth of food crops and non-food crops that farmers prioritize. | Food Crops, Land Management | While capturing biodiversity present in diverse agroecosystems is desired, maps created that highlight such diversity may also be overwhelming or imperceivable to those who have not yet had an introduction to this type of imagery. |

| Clarity | “I know the farm, but that’s not exactly it, but it’s not because I really see it there”. | While farmers express wanting representation of the entirety of crops and vegetation, a cursory introduction to the maps in a simplified form aids synthesis of imagery and content. | Digestibility, Simplification | Understanding the audience of a map is a principal element of cartography. In a setting such as this study, creating a simpler iteration may serve as a tool with which to foster connections and understand where to expound upon classifications or tools in the future. |

| Visual representation provided in a concise format supported outward expressions of map legibility. | ||||

| Land Management | A farmer speaking to the increased heat noted they needed to plant more plants to shade coffee. | Land management techniques often include practices to address climatic conditions. By diversifying crops, farmers are better shielded from economic downturns and a rapidly changing environment. | Crop Selection, Crop Placement | Land management may inform classifications by creating more targeted areas for ground truthing and testing sites. For example, if a farmer noted that coffee was planted under an area of dense canopy, it may make sense to ground truth the area heavily and test the degree to which the coffee in that area was present in the classification. |

| Farmers intercropped coffee with citrus as a means of protecting the coffee (their primary crop). |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

MDPI and ACS Style

Klenke, G.; Brines, S.; Hernandez, N.; Li, K.; Glancy, R.; Cabrera, J.; Neal, B.H.; Adkins, K.A.; Schroeder, R.; Perfecto, I. Farmer Perceptions of Land Cover Classification of UAS Imagery of Coffee Agroecosystems in Puerto Rico. Geographies 2024, 4, 321-342. https://doi.org/10.3390/geographies4020019

AMA Style

Klenke G, Brines S, Hernandez N, Li K, Glancy R, Cabrera J, Neal BH, Adkins KA, Schroeder R, Perfecto I. Farmer Perceptions of Land Cover Classification of UAS Imagery of Coffee Agroecosystems in Puerto Rico. Geographies. 2024; 4(2):321-342. https://doi.org/10.3390/geographies4020019

Chicago/Turabian Style

Klenke, Gwendolyn, Shannon Brines, Nayethzi Hernandez, Kevin Li, Riley Glancy, Jose Cabrera, Blake H. Neal, Kevin A. Adkins, Ronny Schroeder, and Ivette Perfecto. 2024. "Farmer Perceptions of Land Cover Classification of UAS Imagery of Coffee Agroecosystems in Puerto Rico" Geographies 4, no. 2: 321-342. https://doi.org/10.3390/geographies4020019

Article Metrics

Citations

No citations were found for this article, but you may check on Google Scholar

Article Access Statistics

For more information on the journal statistics, click here.

Multiple requests from the same IP address are counted as one view.

No comments:

Post a Comment