Large Language Models for UAVs: Current State and Pathways to the Future

Computer Science > Artificial Intelligence

Summary

1. LLMs like BERT, GPT, T5, XLNet, ERNIE, and BART can significantly improve UAV performance in various tasks such as command interpretation, situation awareness, and decision-making.

2. LLM integration enables UAVs to process and analyze large amounts of data in real-time, enhancing their autonomy and adaptability in dynamic environments.

3. LLMs can optimize UAV communication by improving spectrum sensing and sharing capabilities, leading to more efficient and reliable data transmission.

4. LLM-integrated UAVs have wide applications in surveillance, emergency response, delivery services, environmental monitoring, and satellite communications.

5. Challenges in implementing LLMs in UAVs include computational resource constraints, latency issues, model robustness, integration with existing systems, and data security.

6. Future research directions focus on advancements in LLM algorithms, integration with emerging technologies, computational efficiency optimization, latency reduction, reliability enhancement, and regulatory considerations.

The paper highlights the transformative potential of LLM-UAV integration in creating more sophisticated, intelligent, and responsive autonomous systems across various domains.

Challenges

1. Computational Resources and Power Consumption:

- - LLMs require significant computational power and energy, which can be limited on UAVs due to their lightweight design and power supply constraints.

- - The high power consumption of LLMs can quickly drain UAV batteries, reducing flight duration and operational efficiency.

- - Addressing this challenge involves model simplification, quantization techniques, and the use of advanced AI hardware like GPUs, FPGAs, and ASICs.

- - Real-time data processing and decision-making in UAV operations can be hindered by the latency introduced when offloading LLM computations to cloud servers.

- - This latency can be detrimental in scenarios requiring immediate responses, such as navigation, surveillance, and tactical operations.

- - Mitigating latency involves enhancing onboard processing capabilities, implementing edge computing solutions, and optimizing data transmission protocols.

- - LLMs may produce unpredictable or incorrect outputs in novel or edge-case scenarios, as they rely on patterns learned from training data that may not cover all real-world situations.

- - The risk is particularly high in dynamic UAV environments where quick and accurate decisions are crucial.

- - Ensuring robustness requires continuous model updating with new data, extensive simulation-based testing, fail-safe mechanisms, and redundant systems.

- - Integrating LLMs with UAVs' diverse hardware and software components, each with unique specifications and requirements, can be complex and time-consuming.

- - Seamless interaction between LLMs and flight control, navigation, communication, and data processing units is essential for optimal performance.

- - Addressing integration challenges involves modular system design, standardized data formats, incremental testing, and systematic maintenance frameworks.

- - UAVs equipped with LLMs often process sensitive data, including personal information collected during missions, which can be vulnerable to breaches and unauthorized access.

- - Ensuring data security is crucial to prevent privacy violations and maintain the confidentiality and integrity of the information.

- - Robust data encryption, access control mechanisms, and compliance with data protection regulations are essential for safeguarding sensitive data processed by LLMs in UAVs.

LLMs used on UAVs

1. BERT (Bidirectional Encoder Representations from Transformers):

- - BERT has been used to interpret complex commands, extract relevant information from mission data, and enhance contextual understanding in UAV operations.

- - It has been particularly useful in surveillance and monitoring missions where precise understanding of sensor data and operational directives is critical.

2. GPT (Generative Pre-trained Transformer) Series:

- - GPT models have been employed to generate detailed mission reports, conduct dialogues, and enable natural language interaction with UAVs.

- - They have been used in UAV training simulations for generating realistic scenarios and interactive communications.

3. T5 (Text-to-Text Transfer Transformer):

- - T5 has been utilized for tasks such as translating communications between different languages or protocols, summarizing extensive exploration data, and transforming raw sensor outputs into actionable text formats.

- - Its versatility in handling diverse text-based tasks has made it valuable in various UAV communication scenarios.

4. XLNet (eXtreme Learning NETwork):

- - XLNet has been applied in complex, dynamic operational environments such as search, rescue, and disaster response, where interpreting and responding to context-heavy instructions in real-time is essential.

- - Its flexible and comprehensive language understanding capabilities have been beneficial in these challenging scenarios.

5. ERNIE (Enhanced Representation through kNowledge Integration):

- - ERNIE has been used in UAV missions that require a deep understanding of specific terminologies or concepts, such as environmental monitoring applications involving ecological data.

- - Its ability to integrate external knowledge through knowledge graphs has been valuable in these domain-specific contexts.

- - BART has been employed for tasks such as generating precise and contextually accurate mission reports or instructions, particularly in scenarios involving the summarization of detailed surveillance data.

- - Its dual capabilities in understanding and generating text have made it useful in UAV communication tasks that require both comprehension and generation.

These LLMs have been adapted and integrated into various UAV systems to enhance their natural language processing, decision-making, and communication capabilities. The choice of LLM depends on the specific requirements and challenges of the UAV application, such as the need for contextual understanding, text generation, or domain-specific knowledge integration. As research in this field progresses, it is likely that more LLMs will be developed and optimized specifically for UAV applications, further expanding the possibilities for intelligent and autonomous UAV operations.

Abstract: Unmanned Aerial Vehicles (UAVs) have emerged as a transformative technology across diverse sectors, offering adaptable solutions to complex challenges in both military and civilian domains. Their expanding capabilities present a platform for further advancement by integrating cutting-edge computational tools like Artificial Intelligence (AI) and Machine Learning (ML) algorithms. These advancements have significantly impacted various facets of human life, fostering an era of unparalleled efficiency and convenience.

Large Language Models (LLMs), a key component of AI, exhibit remarkable learning and adaptation capabilities within deployed environments, demonstrating an evolving form of intelligence with the potential to approach human-level proficiency. This work explores the significant potential of integrating UAVs and LLMs to propel the development of autonomous systems.

We comprehensively review LLM architectures, evaluating their suitability for UAV integration. Additionally, we summarize the state-of-the-art LLM-based UAV architectures and identify novel opportunities for LLM embedding within UAV frameworks. Notably, we focus on leveraging LLMs to refine data analysis and decision-making processes, specifically for enhanced spectral sensing and sharing in UAV applications. Furthermore, we investigate how LLM integration expands the scope of existing UAV applications, enabling autonomous data processing, improved decision-making, and faster response times in emergency scenarios like disaster response and network restoration. Finally, we highlight crucial areas for future research that are critical for facilitating the effective integration of LLMs and UAVs.

Submission history

From: Shumaila Javaid [view email][v1] Thu, 2 May 2024 21:30:10 UTC (3,455 KB)

Large Language Models for UAVs: Current State and Pathways to the Future

Shumaila Javaid , Nasir Saeed , Bin He

S. Javaid and B. He are with the Department of Control

Science and Engineering, College of Electronics and Information

Engineering, Tongji University, Shanghai 201804, China, and also with

Frontiers Science Center for Intelligent Autonomous Systems, Shanghai

201210. Email: {shumaila, hebin}@tongji.edu.cn.

N. Saeed is with the Department of Electrical and Communication

Engineering, United Arab Emirates University (UAEU), Al Ain, 15551, UAE.

Email: mr.nasir.saeed@ieee.org.

Index Terms:

UAVs, Large Language Models, spectral sensing, autonomous systems, decision-making

I Introduction

Unmanned Aerial Vehicles (UAVs) have been a focus of attention for over fifty years due to their remarkable autonomy, mobility, and adaptability, enhancing a wide array of applications, including surveillance [1, 2], monitoring [3, 4], search and rescue [5], healthcare [6], maritime communications [7], and wireless network provisioning [8]. These foundational achievements prompted the integration of Artificial Intelligence (AI) with UAVs. Particularly in the 2010s, advances in both UAV technology and AI reached a critical juncture, leading to substantial benefits across various applications. For example, AI-enabled UAVs employ facial recognition and real-time video analysis techniques to enhance security and monitoring of remote areas [9, 10, 11]. In agriculture, UAVs with AI models analyze crop health for precise farming, improving resource efficiency and yields [12, 13]. Meanwhile, AI-driven UAVs optimize logistics route planning and inventory management, streamlining warehouse operations and improving delivery efficiency [14, 15, 16].

Among these advancements, Large Language Models (LLMs) have recently gained significant attention as they enable systems to learn from application behavior and optimize existing systems [17, 18]. Various LLMs employing transformer architectures, such as the Generative Pre-trained Transformer (GPT) series [19], Bidirectional Encoder Representations from Transformers (BERT) [20], and Text-to-Text Transfer Transformer (T5) [21], exhibit fundamental capabilities. Due to extensive training on large datasets, they excel at understanding, generating, and translating human-like text, making them valuable for robotics, healthcare, finance, education, customer service, and content creation applications. Furthermore, the proficiency of these models in real-time data processing, natural language understanding and generation, content recommendation, sentiment analysis, automated response, language translation, and content summarization creates opportunities in the UAV domain. For instance, they enable UAVs to respond swiftly to dynamic environmental changes and communication demands [22, 23]. Their adaptive learning capabilities facilitate continuous improvement in operational strategies based on incoming data, enhancing decision-making processes [24]. Additionally, their ability to support multiple languages broadens their applicability in global operations, particularly valuable for UAV communications in diverse applications such as smart cities, healthcare, rescue operations, emergency response, media, and entertainment [25, 26, 27].

Recent literature [28, 29, 30] has explored incorporating LLMs into UAV communication systems to enhance interaction with human operators and among UAVs. Traditionally, UAVs operate on pre-programmed commands with limited dynamic interaction capabilities. However, integrating LLMs enables support for natural and intuitive communication methods. For example, LLMs can interpret and respond to commands in natural language, simplifying UAV control and allowing the handling of complex, real-time mission adjustments. This transforms UAVs into more adaptable and practical tools across various applications [31]. LLMs can enhance UAVs’ autonomous decision-making based on communication context or environmental data [32, 33]. For example, without human input, LLMs can analyze messages and environmental data in search and rescue operations to determine priorities and actions. In multi-UAV operations, LLMs can facilitate better communication and coordination, managing and optimizing information flow between UAVs and improving overall efficiency and effectiveness. LLMs can also enhance data processing and reporting capabilities by generating summaries, insights, and actionable recommendations from vast amounts of collected data. Furthermore, LLMs can be trained to recognize patterns and anomalies in communication data, crucial for preempting and resolving potential issues [34, 35]. For instance, if a UAV sends inconsistent data, LLMs could quickly detect anomalies and alert operators. LLMs can enhance scalability and adaptation in communication protocols, automatically learning and adapting to new protocols based on new data or operational changes, ensuring seamless communication. Pre-training LLMs with simulated data helps understand mission conditions and requirements, enabling real-time adaptation during missions for optimal performance.

This work is motivated by the potential of integrating LLMs into UAV communication systems. We comprehensively analyze existing LLM methods focusing on UAV integration to highlight advantages and limitations in expanding current UAV communication systems’ capabilities. The review summarizes state-of-the-art LLM-integrated architectures, explores opportunities for LLM incorporation into UAV architecture, and addresses spectrum sensing and sharing concerning LLM integration. We aim to showcase how LLMs can optimize communication, adapt to new missions dynamically, and process complex data streams, enhancing UAV efficiency and versatility across various domains, including emergency response, environmental monitoring, urban planning, and satellite communication. Additionally, we address the legal, ethical, and technical challenges of deploying AI-driven UAVs, emphasizing responsible and effective integration, laying the groundwork for advancing UAV technology to meet future demands, and exploring innovative AI applications in UAV systems.

I-A Contributions

The contributions of this paper are summarized as follows:

-

First, we present an in-depth analysis of various LLM architectures, assessing their suitability and potential for integration within UAV systems. This evaluation helps to understand the capabilities and effectiveness of state-of-the-art LLM models for different UAV applications.

-

Then, we explore various LLM-based UAV architectures, providing a consolidated view of how UAV technologies evolve by integrating sophisticated AI models. Furthermore, areas that can further benefit from LLM integration are highlighted.

-

After that, we discuss enhancing spectral sensing and sharing capabilities in UAVs through LLM integration. The impact of LLM integration on UAVs for optimized spectrum sensing, data processing, and decision-making is presented.

-

Finally, we demonstrate how LLM integration with the UAV framework can extend the capabilities of UAVs in various sectors, including surveillance and reconnaissance, emergency response, delivery, and enhanced network connectivity during emergencies. Moreover, critical areas for future research essential for the successful and effective integration of LLMs with UAV systems are identified and highlighted.

I-B Related Surveys

| Ref. | Main Focus | Key Findings | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| [36] | LLM-based autonomous agents |

|

||||||||

| [37] | Development of LLM-based AI agents |

|

||||||||

| [38] | Aligning LLMs with human expectations |

|

||||||||

| [39] | Deployment challenges of LLMs |

|

||||||||

| [40] | Challenges of LLM |

|

||||||||

| [41] | Limitations of LLM |

|

||||||||

| [30] | GAI in UAV systems |

|

||||||||

| [22] | GAI in UAV swarms |

|

||||||||

| [42] |

|

|

||||||||

| [43] | LLMs in wireless networks |

|

||||||||

| This paper | LLM-integrated UAV systems |

|

The future holds promise for revolutionizing various

domains; therefore, several recent review articles exist on the topic.

For instance, [44, 45, 46] investigate LLMs architectures, [47, 48, 49, 50]

present the overview of the training process, fine-tuning, logical

reasoning, and related challenges to address their limitations for broad

adoption of LLM-based systems across domains.

In [36],

a comprehensive analysis is provided on LLM-based autonomous agents,

focusing on their construction, application, and evaluation. These

agents, equipped with sophisticated natural language understanding and

generation capabilities, operate without human intervention. They

interact with environments and users in complex ways, necessitating the

integration of advanced AI techniques for tasks like communication and

problem-solving across various domains such as social science, natural

science, and engineering. Another work [37]

delves into the development and use of LLM-based AI agents, emphasizing

their role in advancing artificial general intelligence. LLMs are

recognized as foundational for creating versatile AI agents due to their

language capabilities, which are crucial for various autonomous tasks.

The authors propose a framework based on brain, perception, and action

components to enhance agents’ performance in complex environments.

In [38],

challenges and advancements in aligning LLMs with human expectations

are critically examined. Concerns like misunderstanding instructions and

biased outputs are addressed through technologies enhancing LLM

alignment. Data collection strategies, training methodologies, and model

evaluation techniques are explored to improve performance in

understanding and generating human-like responses. Another study [39]

investigates challenges in deploying LLMs, particularly in

resource-constraint settings. Model compression techniques like

quantization, pruning, and knowledge distillation are discussed to

improve efficiency and applicability.

While [40, 41]

investigate challenges of LLMs, including vast dataset management and

high costs, they point out limitations that cannot be overcome merely by

increasing model size.

[30]

explores Generative Artificial Intelligence (GAI) applications in

improving UAV communication, networking, and security performances. A

GAI framework is introduced to advance UAV networking capabilities. [22]

surveys the challenges of UAV swarms in dynamic environments,

discussing various GAI techniques for enhancing coordination and

functionality.

In [42],

the authors explore the potential of Large-GenAI models to enhance

future wireless networks by improving wireless sensing and transmission.

They highlight the benefits of these models, including enhanced

efficiency, reduced training requirements, and improved network

management. In another study [43],

the authors investigated the application of LLMs for developing

advanced signal-processing algorithms in wireless communication and

networking. They explored the potential and challenges of using LLMs to

generate hardware description language code for complex tasks, focusing

on code refactoring, reuse, and validation through software-defined

radios. This approach led to significant productivity improvements and

reduced computational challenges.

Although [30, 22]

focus broadly on GAI, the specific application of LLMs in UAV

communication systems still needs to be explored. This gap highlights an

area poised for investigation. Table I summarizes existing surveys’ primary focus and critical findings.

I-C Organization

The rest of the paper is organized as follows. In Section II, we present an overview of LLMs, introducing foundational concepts and developments in this area. Section III is dedicated to exploring LLMs for UAVs, where we discuss the integration and adaptation of LLM technologies within UAV systems. Section IV focuses on network architectures for LLMs in UAV communications, examining the structural designs that support LLM functionalities in UAV networks. Section V addresses spectrum management and regulation for LLMs in UAVs. Section VI explores applications and use cases of LLMs in UAV communications, outlining practical implementations and the benefits derived from these technologies. Section VII examines the challenges and considerations in implementing LLM-Integrated UAVs, discussing potential obstacles and operational considerations. Section VIII is dedicated to future directions and research opportunities, presenting potential areas for further exploration and development in the LLMs in UAVs. Finally, Section IX concludes the paper with a summary of our findings and reflections on the broader implications of our research.

II Overview of Large Language Models (LLMs)

LLMs undergo extensive data training, demanding high computational power and the integration of ML algorithms and deep neural network architectures to enable sophisticated language processing abilities [51]. This training encompasses diverse and extensive datasets, including text from various sources such as books, websites, and articles, aiding the model in learning language structure, vocabulary, grammar, and contextual nuances [51]. Integrating ML algorithms, LLMs interpret data, adjusting their learning process to language nuances and refining prediction and response generation [52]. Typically based on transformer architecture, LLMs utilize self-attention mechanisms, simultaneously enabling parallel processing of every word in a sentence. This capability allows a comprehensive understanding of context, significantly enhancing the model’s ability to manage long-range dependencies in text, thereby improving context awareness [53]. LLMs consist of multiple neural network layers, each performing complex computations. These layers process inputs sequentially, refining information progressively. With parameters ranging from millions to billions, they dictate how input data is transformed into outputs. During training, these parameters are adjusted to minimize prediction errors, progressively improving the model’s ability to recognize complex patterns and relationships within the data [54].

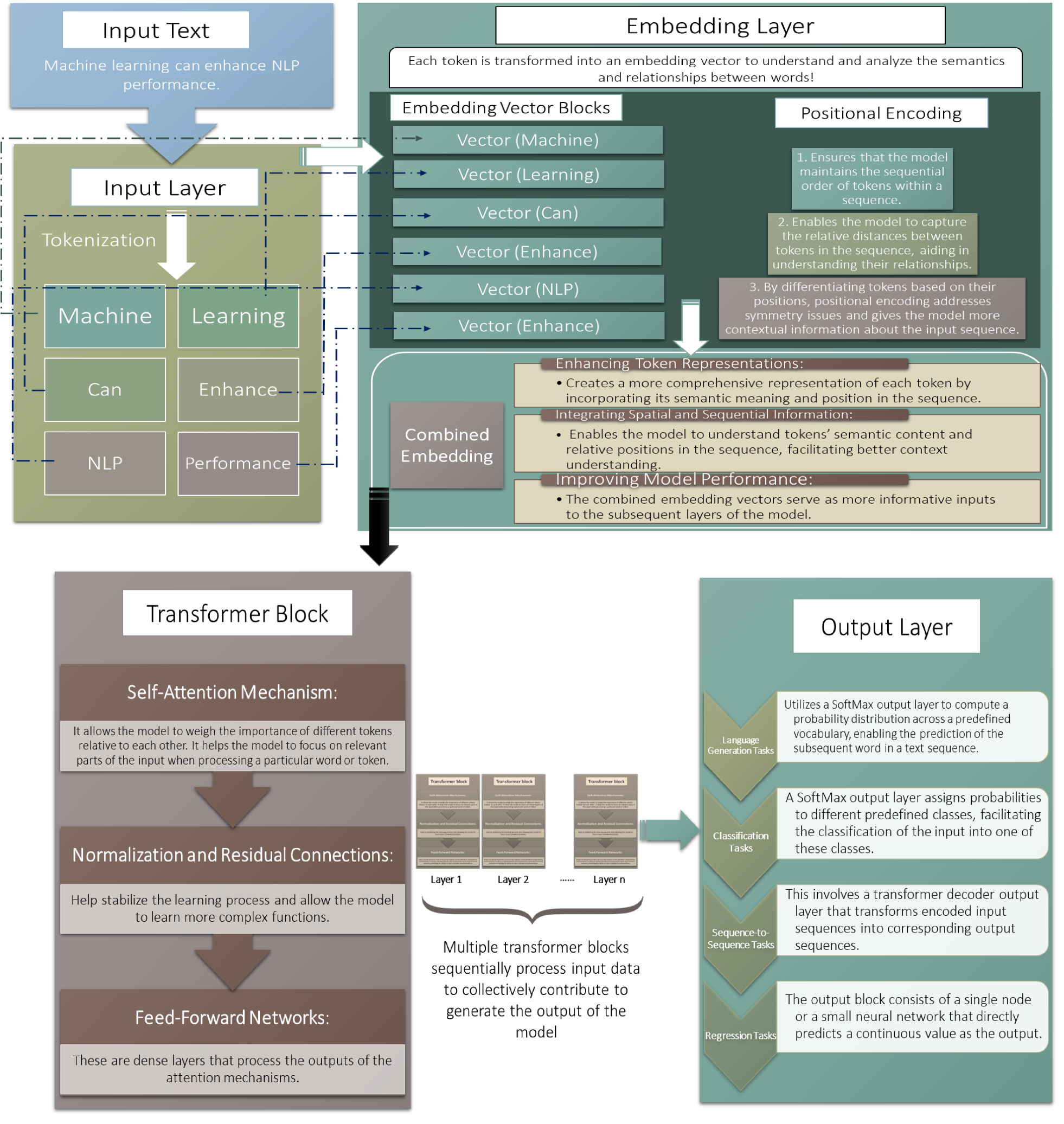

The specific architecture of LLMs, based on transformer architecture, incorporates attention mechanisms to weigh the importance of different parts of input data, essential for tasks like language understanding [55]. Positional encodings maintain word order, which is crucial for sequential natural language. Training involves adjusting parameters through gradient descent and backpropagation. Backpropagation calculates gradients of the loss function for each parameter, guiding parameter updates to enhance model performance. Gradient descent iteratively adjusts parameters to minimize prediction error. Through this process, LLMs continually learn from mistakes, generating coherent and contextually appropriate responses and improving their ability to handle complex language tasks. Fig. 1 illustrates the typical layers involved, showing how input text is transformed through multiple processing stages to produce output.

The broad landscape of LLMs in based on several essential models having unique characteristics tailored to specific linguistic tasks. For example, BERT [20, 56] revolutionized the field by using a bidirectional training of transformers to improve context understanding, making it exceptionally practical for tasks such as question answering and language inference. Since its introduction, BERT has been widely adopted for improving search engine results, enabling more nuanced and context-aware responses to queries. Google has incorporated BERT into its search algorithms, significantly enhancing search accuracy by better understanding the intent behind users’ queries [57]. Moreover, Enhanced Representation through Knowledge Integration (ERNIE) [58] extends BERT by incorporating structured knowledge, such as entity concepts, to enhance language comprehension. ERNIE is used for enhancing language understanding tasks in Chinese, showcasing superior performance in language inference and named entity recognition. It demonstrates the importance of integrating structured knowledge into pre-trained language models, especially for languages with complex structures like Chinese.

While the GPT series [59, 60] focuses on generating human-like text by predicting the next word in a sequence, showcasing remarkable proficiency in various generative tasks. The GPT models, particularly the later versions such as GPT-3, have been instrumental in creating advanced chatbots and virtual assistants. These models have been used to generate creative content, from writing articles to composing poetry, demonstrating their versatility in handling various innovative and conversational tasks.

On the other hand, T5 [21] adopts a unified framework that converts all text-based language problems into a text-to-text format, simplifying the process of training and applying transformers. T5 has been effectively used in summarization, translation, and classification tasks. Its flexible text-to-text approach can be fine-tuned for various language tasks without significantly changing the underlying model architecture [61].

While eXtreme Learning NETwork (XLNet) introduces permutation-based training, which better captures the bidirectional context by predicting all tokens in a sequence rather than one at a time. XLNet’s permutation-based training strategy allows it to outperform models like BERT on tasks requiring a deep understanding of context order, such as question answering and document ranking, making it particularly useful in academic and professional settings where the precise interpretation of information is crucial [62].

Lastly, Bidirectional and Auto-Regressive Transformers (BART) combine an autoencoder and autoregressive approach, making it highly effective for sequence-to-sequence tasks and text generation. It has been used extensively in text summarization applications as it generates concise summaries of long documents without losing critical information. It is particularly useful in law and medicine files where extracting accurate information quickly from large texts is vital. The next section details these models’ working and highlights their opportunities for UAVs [63].

Some less common LLM models have also been integrated into UAVs for innovative applications. In [64], the authors employed UAVs to handle complex tasks involving semantically rich scene understanding by integrating LLMs and Visual Language Models (VLMs). The approach allows UAVs to provide zero-shot literary text descriptions of scenes, which are both instant and data-rich, suitable for applications ranging from the film industry to theme park experiences and advertising. The descriptions generated by this system achieve a high readability score, highlighting the system’s ability to develop highly readable and detailed descriptions, demonstrating the practical use of microdrones in challenging environments for efficient and cost-effective scene interpretation. The authors in [65] integrated LLMs in UAV control by developing a multimodal evaluation dataset that combines text commands with associated utterances and relevant images, enhancing UAV command interpretation. The presented study evaluates the effectiveness of generic versus domain-specific speech recognition systems, adapted with varying data volumes, to optimize command accuracy. It also innovatively integrates visual information into the language model, using semi-automatic methods to link commands with images, providing a richer context for command execution.

In another work [66], the authors focused on enhancing human-drone interaction by implementing a multilingual speech recognition system to control UAVs. This work addresses the complexities and training challenges associated with traditional RF remote controls and ground control stations using natural, user-intuitive interfaces that recognize speech in English, Arabic, and Amazigh. The study developed a two-stage approach by initially creating a deep learning-based model for multilingual speech recognition, which was then implemented in a real-world setting using a quadrotor UAV. The model was trained on extensive records, including commands and unknown words mixed with background noise, to enhance its robustness for controlling drones across linguistic backgrounds.

In [67], the authors explore the application of LLMs to enhance the resilience and adaptability of autonomous multirotors through REsilience and Adaptation (REAL) using the LLMs approach. REAL integrates LLMs into robots’ mission planning and control frameworks, leveraging their extensive capabilities in long-term reasoning, natural language comprehension, and prior knowledge extraction. REAL utilizes LLMs to enhance robot resilience in facing novel or challenging scenarios, interpret natural language and log data for better mission planning, and adjust control inputs based on minimal user-provided information about robot dynamics. Experimental results demonstrate that this integration effectively reduces position-tracking errors under scenarios of controller parameter errors and unmodeled dynamics. Furthermore, it enables the multirotor to make decisions that avoid potentially dangerous situations not previously considered in the design phase, showcasing LLMs’ practical benefits and potential in improving autonomous UAV operations.

III LLMs for UAVs

Due to the growing interest in LLM-integrated UAV systems across various applications, several recent work exists. For instance, in [68], the authors introduced a vision-based autonomous planning system for quadrotor UAVs to enhance safety. The system predicts trajectories of dynamic obstacles and generates safer flight paths using NanoDet for precise obstacle detection and Kalman Filtering for accurate motion estimation. Additionally, the system incorporates LLMs such as GPT-3 and ChatGPT to facilitate more intuitive human-UAV interactions. These LLMs enable Natural Language Processing (NLP), allowing users to control UAVs through simple language commands without requiring complex programming knowledge. They translate user instructions into executable code, enabling UAVs to execute tasks and provide feedback in natural language, simplifying the control process. UAVs can operate in ad-hoc and mesh fashion to form dynamic networks without relying on pre-existing infrastructure. This makes them particularly valuable when establishing permanent network infrastructure is impractical, such as disaster response, military operations, or environmental monitoring. Both ad-hoc and mesh networks enhance UAVs’ ability to configure and maintain connectivity as they move automatically. They continuously discover new neighbors and can adjust routes based on the network’s topology and traffic conditions, improving scalability and flexibility [69]. Integrating LLMs into UAV communications enhances their ability to understand network conditions and generate insights based on the networks’ characteristics, thereby highlighting their adaptability and responsiveness to quickly adapt to changing environmental conditions and operational demands. LLMs also help UAVs understand network traffic patterns for recommending adaptive protocols that reduce latency and increase throughput, particularly in the variable conditions common in these networks. They also assist in simulating or modeling the behavior of the networks under various scenarios, helping in planning and decision-making processes for UAV deployments. Therefore, the LLM incorporation can enhance data analysis, improving data exchange efficiency between UAVs. LLMs, with their capability to process and learn from vast amounts of data, enable UAVs to make informed decisions about route planning, data forwarding, and network configuration. For instance, in response to UAV failures or environmental obstacles, LLMs can swiftly calculate alternative routes or reconfigure the network to sustain connectivity and performance. Moreover, LLMs foster higher levels of autonomy in UAVs by equipping them with advanced cognitive capabilities, allowing UAVs to understand and execute complex commands and interact more naturally with human operators or other autonomous systems.

Furthermore, LLMs can analyze data from UAVs (such as operational logs and telemetry data) to predict potential failures or maintenance needs before they occur. This predictive capability can significantly increase the reliability and lifespan of UAVs, reducing downtime and maintenance costs. Security is also a paramount concern in decentralized ad-hoc networks; LLMs can enhance security protocols by identifying potential threats through pattern recognition and anomaly detection and simulating attack scenarios to develop more robust security measures. LLMs can also optimize allocating critical resources such as bandwidth and power within the UAV network. LLMs dynamically allocate resources by understanding and predicting network demands to maximize efficiency and extend UAV operational times. They improve the interface between human operators and the UAV network, providing more intuitive control and feedback systems, including generating natural language reports on network status or translating complex network data into actionable insights for decision-makers. In addition, LLMs address scalability challenges inherent in ad-hoc networks. They dynamically adjust network protocols and configurations as the number of UAVs changes, ensuring the network remains stable and efficient regardless of size. By integrating LLM capabilities, UAV ad-hoc networks can become more intelligent, responsive, and efficient, significantly enhancing their effectiveness across various applications.

This section provides a detailed overview of different LLMs and discusses the opportunities they bring for UAV-based communication systems.

III-A BERT-enabled UAVs

As discussed in the previous section, BERT is an influential model in NLP, developed by researchers at Google and released in 2018 [20]. BERT’s development represented a turning point in NLP, providing a more nuanced and effective way for machines to process and understand human language by fully leveraging the context surrounding each word. BERT employed pre-training and fine-tuning stages. In pre-training, the model is trained on a large corpus of text with tasks designed to help it learn general language patterns. These tasks include predicting masked words in a sentence (i.e., Masked Language Model (MLM)) and predicting whether two sentences logically follow each other (i.e., Next Sentence Prediction (NSP)). After pre-training, BERT is fine-tuned with additional data tailored to specific tasks (i.e., question answering or sentiment analysis) [70, 71].

The introduction of BERT significantly advanced the state of the art in a wide range of NLP tasks. It showed remarkable performance improvements on leaderboards for tasks such as named entity recognition [72, 73], sentiment analysis [74, 75], and especially question answering and natural language inference, where the full-sentence context from both directions can be crucial for understanding subtleties. In addition, BERT has inspired numerous variations and improvements, leading to the development of different models such as Robustly optimized BERT approach (RoBERTa) [76], Distilled Bidirectional Encoder Representations from Transformers (DistilBERT) [77], and A Lite BERT (ALBERT) [78] that use the original architecture and training procedures of BERT to optimize other factors such as training speed, model size, or enhanced performance.

Integrating BERT can significantly enhance UAV performance across various domains. For instance, in emergency response scenarios, BERT can help UAVs understand complex natural language commands from disaster management teams. Moreover, BERT can interpret and summarize information from UAV sensors and reports, making it particularly valuable in surveillance missions where quick summarization of extensive video data is essential. Moreover, BERT’s ability to rapidly analyze and interpret data from multiple sources enables timely, informed decisions, crucial in environmental monitoring for assessing conditions like forest fires or pollution. Furthermore, its proficiency in parsing and understanding commands ensures precise coordination among multiple UAVs, which is critical for complex logistics operations involving supply delivery in challenging environments. Recently, in [79], the authors introduced an innovative end-to-end Language Model-based fine-grained Address Resolution framework (LMAR) explicitly designed to enhance UAV delivery systems. Traditional address resolution systems rely primarily on user-provided Point of Interest (POI) information, often lacking the necessary precision for accurate deliveries. To address this, LMAR employs a language model to process and refine user-input text data, improving data handling and regularization with enhanced accuracy and efficiency in UAV deliveries. In another work [80, 81], the authors develop enhanced security and forensic analysis protocols for UAVs to support increased drone usage across various sectors, including those vulnerable to criminal misuse. They introduce a named entity recognition system to extract information from drone flight logs. This system utilizes fine-tuned BERT and DistilBERT models with annotated data, significantly improving the identification of relevant entities crucial for forensic investigations of drone-related incidents. The authors in [82] focused on enhancing the target recognition capabilities of UAVs in intelligent warfare by constructing a standardized knowledge graph from large-scale, unstructured UAV data. The authors introduced a two-stage knowledge extraction model with an integrated BERT pre-trained language model to generate character feature encoding, which enhances the efficiency and accuracy of information extraction for future UAV systems.

III-B GPT-enabled UAVs

GPT series developed by OpenAI represents a significant evolution in the design and capabilities of LLMs that enhance various natural language processing tasks such as text generation, translation, summarization, and question answering [83]. The first architecture, GPT-1, was introduced in June 2018, and it was based on the transformer model that uses a stack of decoder blocks from transformer architecture. GPT-1 was pre-trained on a language modeling task (predicting the next word in a sentence) using the BooksCorpus dataset, which comprises over 7,000 unique unpublished books (totaling around 800 million words). After this initial pre-training, supervised learning was fine-tuned for specific tasks [19, 83].

The GPT-2 was released in February 2019 and expanded significantly on its predecessor, featuring up to 48 layers in its largest version, with 1,600 hidden units, 48 attention heads, and 1.5 billion parameters [84]. GPT-2 used a WebText dataset created by scraping web pages linked from Reddit posts with at least three upvotes. This resulted in a diverse dataset of around 40GB of text data. GPT-2 continued using the unsupervised learning approach, leveraging only language modeling for pre-training without task-specific fine-tuning. This demonstrated the model’s ability to generalize from language understanding to specific tasks [85]. While GPT-3 released in June 2020 is one of the largest AI language models ever created, with 175 billion parameters. It includes 96 layers, with 12,288 hidden units and 96 attention heads [86]. It was trained on an even more extensive and diverse dataset, including a mixture of licensed data, data created by human trainers, and publicly available data, significantly larger than GPT-2. GPT-2 and GPT-3 used an unsupervised learning model, demonstrating exceptional capabilities in learning from large datasets [87]. GPT-4, built on an advanced transformer-style architecture, significantly expands in size and complexity compared to its predecessors, GPT-2 and GPT-3. This model has been fine-tuned using Reinforcement Learning from Human Feedback and employs publicly available internet data and data licensed from third-party providers. However, specific details related to the architecture, such as the model size, hardware specifications, computational resources used for training, dataset construction, and training methodology, have not been publicly disclosed [88].

GPT series in UAVs represents an innovative intersection of AI and drone technology that can enhance UAVs’ functionality, autonomy, and interaction capabilities in a broad range spanning from enhanced control systems to fully autonomous task execution [89, 90]. For example, GPT series integration allows UAVs to execute instructions provided in plain language with a high level of proficiency. For instance, the operator command to inspect the condition of a bridge at specific coordinates will instruct the UAV to devise a flight path and carry out all the necessary steps for bridge inspection without requiring manual inputs for each step. Similarly, it can generate detailed reports based on data collected during flights, and integration of these models with the UAV’s sensors and data collection systems can automatically generate textual descriptions highlighting various aspects, such as mission outcomes and anomalies detected [91]. Accordingly, it can make it easier for human operators to understand what the UAV has observed without reviewing extensive raw data. For instance, Tazir et al. in [89] integrated LLM system OpenAI’s GPT-3.5-Turbo with UAV simulation systems (i.e., PX4/Gazebo simulator) to create a natural language-based drone control system. The system architecture is designed to allow seamless interaction between the user and the UAV simulator through a chatbot interface facilitated by a Python-based middleware. The Python middleware is the core component, establishing a communication channel between the chatbot (GPT-3.5-Turbo) and the PX4/Gazebo simulator. It processes natural language inputs from the user, forwards these inputs to the ChatGPT model using the OpenAI API, retrieves the generated responses, and converts them into commands that the simulator understands. ChatGPT provides guidance and support through PX4 commands and explanations, thus enhancing the interactivity and accessibility of UAV simulation systems. It also facilitates the control and management of UAVs through sophisticated AI-driven interfaces. In another work [92], the authors integrated advanced GPT models and dense captioning technologies into autonomous UAVs to enhance their functionality in indoor inspection environments. The proposed system enables UAVs to understand and respond to natural language commands like humans, increasing their accessibility and ease of use for operators without advanced technical skills. The UAVs’ dense captioning models facilitate this human-like interaction by analyzing images captured during flight to generate detailed object dictionaries. These dictionaries allow the UAVs to recognize and understand various elements within their environment, dynamically adapting their behavior in response to both expected and unexpected conditions, thus improving the efficiency and accuracy of UAVs for indoor inspections across various environmental conditions and applications.

Furthermore, in dynamic or complex environments requiring fast decisions, GPT series can assist by processing real-time data and communications, providing suggestions or automated decisions based on the data. For example, a search and rescue operation can analyze live video feeds and text reports from multiple UAVs, synthesize the information, and recommend areas to focus on or adjust search patterns [29]. It can also play a crucial role in enhanced collaborative UAV-to-UAV communication by establishing a decentralized swarm intelligence system where UAVs can share information and make group decisions. For instance, UAVs can use natural language to report their status and findings to each other, coordinate their actions based on shared goals, and optimize task distribution among the group without constant human intervention [93]. GPT series can also simulate various communication scenarios for UAV’s training by generating realistic mission scenarios and responses, providing operators with robust training on handling different situations to enhance their response for real-world operations [91].

III-C Text-to-Text Transfer Transformer (T5) for UAVs

Google introduced the T5 model in October 2019 and adopted a novel and streamlined approach to handling various NLP tasks by reframing them as text-to-text problems [94]. Unlike traditional models that require different architectures for different tasks and produce varied outputs, T5 standardizes the input and output across all tasks [95]. Each NLP task, such as translation, summarization, question answering, or text classification, is treated as generating new text from a given text. Consequently, T5 employs a single consistent model architecture for all tasks. This simplification streamlines both the model training and deployment pipeline, as the same model can be trained across multiple tasks with minimal architectural modifications [21]. For example, in a translation task where the input is English text, and the output is French text, both are treated merely as sequences of words. T5 is pre-trained on a large corpus of text in a self-supervised manner, primarily using a variation of the masked language model task, similar to BERT. This pre-training equips the model to understand and generate natural language effectively. Following this, T5 is fine-tuned on specific tasks by adjusting its training data to suit the text-to-text format. The versatility of T5 makes it suitable for a broad range of applications, including language translation, document summarization, and sentiment analysis, where it interprets text sentiment by producing descriptive tags. It also excels in question answering by generating appropriate textual answers [96].

UAVs can integrate the T5 framework to enhance the efficiency of UAV operations. Similar to GPT and BERT, T5 also improves command interpretation and response generation for UAVs, where complex commands issued by operators in natural language are interpreted and converted into executable instructions for the UAV. T5 is also proficient in generating comprehensive mission reports based on the data collected by the UAV by summarizing key findings, highlighting anomalies, and describing the surveyed area for environmental monitoring or disaster response applications. Moreover, T5 can perform real-time operations by processing data streams from UAV sensors and cameras, providing immediate, actionable insights. For instance, during a search and rescue operation, T5 could quickly summarize visual and sensor data to describe potential areas of interest or hazards, helping guide rescue efforts more effectively. At the same time, T5 can significantly enhance the performance of coordinated UAV missions by interpreting messages from one UAV and generating appropriate responses or commands for others, facilitating seamless teamwork for various applications ranging from managing flight patterns to avoiding collisions or coordinating timings for area surveillance.

T5-assisted UAV communication also enables automated troubleshooting and feedback, such as if a UAV encounters issues or anomalies during its operation, it can help by interpreting error messages or sensor data and generating troubleshooting steps or advice in natural language. This can also extend to providing real-time feedback to operators on mission progress or suggesting adjustments to improve operational efficiency. In addition, T5 can generate simulated mission scenarios and dialogues based on historical data or potential future situations for training purposes.

III-D eXtreme Learning NETwork (XLNet) for UAVs

XLNet is an advanced NLP model developed jointly by researchers from Google and Carnegie Mellon University [62]. Unlike BERT, which employs an MLM approach (i.e., where some words in a sentence are randomly masked and predicted), XLNet uses a permutation-based training strategy. This approach considers all possible permutations of the words in a sentence during training, enabling the model to predict a target word based on all potential contexts provided by other words before and after it. This method significantly enhances the flexibility and depth of contextual understanding. In addition, the permutation-based training allows XLNet to capture a richer understanding of language context, unlike BERT, which focuses only on predicting masked words and might miss contextual nuances [97, 98].

Furthermore, XLNet avoids the discrepancies between pre-training and fine-tuning phases seen in BERT by not relying on word masking during training, leading to more consistent behavior across different operational phases. XLNet also merges strategies from autoregressive language modeling (e.g., GPT series) and autoencoding (e.g., BERT) by training autoregressively without adhering to a fixed sequence order. Instead, it predicts words based on varied permutations, enhancing its comprehension and generation capabilities [99]. Consequently, XLNet has demonstrated superior performance on several NLP tasks, including question answering, natural language inference, and document ranking, by effectively utilizing complete sentence structures for a deeper and more accurate contextual understanding [100, 101, 102].

Integrating XLNet to UAVs can offer unique advantages due to its sophisticated approach to language processing [103, 104]. XLNet’s permutation-based training enables a more nuanced and comprehensive understanding of context, making it particularly effective for interpreting complex instructions or environmental data where the context can vary significantly. For instance, during search and rescue missions, where the operational environment is complex and dynamic, XLNet can provide more reliable interpretations of context-heavy commands in real time. Similarly, since XLNet considers all permutations of input data, it can be more robust against noisy or incomplete inputs, which are common in real-world UAV tasks. This feature is especially beneficial in combat or disaster response scenarios where communications may be disrupted or incomplete. XLNet’s ability to contextually predict missing information can maintain the effectiveness of UAV operations.

III-E Enhanced Representation through kNowledge Integration (ERNIE) for UAVs

Baidu Research introduced ERNIE in June 2019 to integrate world knowledge into pre-trained language models [58]. It represents a significant evolution in language understanding due to its novel approach of integrating structured world knowledge into the training of language models. Unlike conventional models that rely on vast amounts of textual data to learn language patterns, ERNIE enhances these models by incorporating knowledge graphs into the training process. Knowledge graphs are structured databases that store information about the world so that machines can understand and process by including entities (such as people, places, and things) and their relationships.

ERNIE is trained on both traditional text corpora and knowledge graphs. Including knowledge graphs allow ERNIE to understand and represent complex relationships and attributes associated with various entities [105]. This training involves two key components: textual data and knowledge integration. Textual data is similar to other models like BERT or GPT, and ERNIE processes this vast amount of text to learn syntactic and semantic patterns of language. At the same time, the knowledge Integration component enables ERNIE to simultaneously learn from knowledge graphs, where it absorbs structured information about real-world entities and their interrelations. Thus, this process enables ERNIE to understand context from the linear text and a multi-dimensional perspective involving real-world facts and relationships. Integrating knowledge graphs offers ERNIE a deeper understanding of language semantics, as it can relate words and phrases to real-world entities and their properties. This capability allows it to perform better on tasks that require nuanced understanding, such as question answering and named entity recognition [106, 107].

In addition, ERNIE’s ability to draw upon external knowledge helps it provide contextually appropriate responses or analysis, especially when background knowledge about specific topics is crucial. It can also better handle ambiguity in language, as the additional data from knowledge graphs provides clarity on potentially confusing or unclear text based on the broader context of the entities involved [108]. The applications of ERNIE are broad and impactful, particularly in areas where deeper understanding and contextual awareness are necessary. For example, ERNIE can leverage its integrated knowledge base to answer complex questions that require understanding beyond the text, such as historical facts or specific details about people or places. It also improves the performance of semantic search engines by understanding the deeper meanings of queries regarding the knowledge it has learned, offering more relevant and precise answers.

ERNIE’s unique capability to integrate structured world knowledge from knowledge graphs with textual data can substantially benefit UAV communication. For example, ERNIE can interpret complex, context-dependent commands operators issue more effectively than traditional language models. For instance, if an operator gives a command involving geographic or operational terms, ERNIE’s integration of knowledge graphs allows it to understand and execute the command more accurately. This is crucial during complex missions in unfamiliar territories where a precise understanding of local geography and terms is necessary. ERNIE also demonstrates effective autonomous decision-making capabilities based on environmental data and mission objectives, as it can process both the current mission data and integrated knowledge to make informed decisions. For example, in environmental monitoring, ERNIE could identify specific features or anomalies in the landscape based on its broader understanding of environmental science, aiding in more effective data collection and analysis.

ERNIE also exhibits high real-time situational awareness attributes during critical missions such as search and rescue or disaster response, where ERNIE can apply its semantic understanding to interpret real-time data inputs (e.g., visual or sensor data) against its knowledge graph. This helps identify relevant entities or situations quickly, such as recognizing areas historically known to be hazardous or interpreting signs of human activities in remote sensing data. In multiple UAV scenarios, ERNIE can facilitate better communication and coordination by understanding and managing the information exchange between UAVs. It can interpret and prioritize communication-based on the relevance and urgency related to the mission’s objectives, using its semantic understanding to ensure that UAVs operate harmoniously.

Furthermore, in the context of training purposes, ERNIE can generate context-rich simulation scenarios that incorporate real-world knowledge into training exercises that assist in developing a better understanding of how to interact with UAVs in complex scenarios, enhancing their preparedness for real-world operations. Similarly to other LLMs, after mission completion, ERNIE can assist in generating detailed incident reports and debriefings that include observational data and contextual insights based on the integrated knowledge to provide a semantic analysis of the mission outcomes. Accordingly, by leveraging its ability to integrate and utilize extensive knowledge graphs alongside textual data, ERNIE can significantly enhance the capabilities of UAV communication systems, making them more intelligent, responsive, and effective in complex operational environments. This makes ERNIE particularly valuable for advanced UAV applications where conventional language models might fail to understand and process complex contextual information.

III-F Bidirectional and Auto-Regressive Transformers (BART) for UAVs

Facebook developed BART that combines the strengths of both auto-encoding and auto-regressive techniques within the transformer framework, making it exceptionally effective for sequence-to-sequence tasks [109]. Unlike BERT, which is primarily designed for understanding and predicting elements within the same input text, BART is optimized for tasks requiring text generation or transformation. It is trained by corrupting text with various noising functions, such as token masking, text infilling, and learning to reconstruct the original text [110, 70]. The BART training equips it to handle a wide range of applications, including text summarization, where it can generate concise versions of longer documents, and text generation, suitable for creating content or generating dialogue. In addition, BART’s capabilities extend to machine translation and data augmentation, making it a versatile tool for transforming input text into coherent and contextually appropriate output sequences [111].

BART integration into UAVs offers several advantages, particularly in tasks that involve complex text processing and generation. For example, BART can enhance the formulation and interpretation of mission reports, automatically generating concise summaries from extensive surveillance data or sensor readings, thus aiding in quicker decision-making and briefing. BART is also proficient in generating coherent text sequences for automated responses or instructions to UAV operators, specifically in scenarios requiring fast and accurate communication.

Furthermore, BART can improve real-time strategy adjustments during search and rescue operations to interpret incoming data and provide updated mission objectives or directions based on the evolving scenario. It also can transform noisy, incomplete textual data into intelligible information, making it particularly valuable in dynamic and challenging environments for UAV operations to ensure that communications remain clear and contextually relevant despite the complexities involved.

III-G Comparison of different LLMs for UAVs

The comparison of different LLMs (i.e., BERT, GPT, T5, XLNet, ERNIE, and BART) for UAVs reveals distinct capabilities tailored to various aspects of UAV operations, reflecting their unique architectures and training approaches. For instance, BERT excels at understanding context from both directions around a word, making it highly effective for interpreting complex commands and extracting relevant information from mission data. It is particularly suited for tasks where a precise understanding of sensor data or operational directives is critical, such as in surveillance or monitoring missions where deep contextual knowledge is crucial. In contrast, GPT specializes in generating coherent and extended text outputs, which are beneficial for creating detailed mission reports or conducting dialogues. This model is ideal for UAV training simulations that require narrative-style updates or interactive communications for generating operational logs or debriefing reports.

Whereas T5 exhibits high versatility and converts any text-based task into a text-to-text format, simplifying the processing of diverse types of communication. It proves effective in UAV communication tasks such as translating communications between different languages or protocols, summarizing extensive exploration data, and transforming raw sensor outputs into actionable text formats. On the other hand, XLNet employs permutation-based training and understands language context more flexibly and comprehensively than BERT. This model is helpful in complex, dynamic operational environments such as search, rescue, and disaster response, where interpreting and responding to context-heavy instructions in real-time is essential.

Similarly, ERNIE enhances the semantic understanding of language by integrating external knowledge through knowledge graphs, making it well-suited for missions that require a deep understanding of specific terminologies or concepts, such as environmental monitoring applications that involve specific ecological data. While BART compromises the benefits of auto-encoding and auto-regressive models, it excels at understanding and generating text. It is ideal for developing precise and contextually accurate mission reports or instructions for summarizing detailed surveillance data, where maintaining the integrity of information and its concise presentation are crucial.

Therefore, in conclusion, BERT and XLNet are highly effective at understanding commands due to their profound contextual understanding, with XLNet providing additional flexibility in dynamic contexts. Meanwhile, GPT and BART excel at creating coherent, extensive texts, with BART offering additional capabilities in text transformation tasks. T5 offers broad applicability across text transformation tasks, making it versatile for various communication needs. ERNIE stands out in scenarios where integrating specialized knowledge is essential for accurate operation and decision-making. Accordingly, each model can be incorporated based on the UAV mission’s specific requirements to ensure that communication remains effective and efficient, tailored to the complexities and challenges of UAV operations. Table II highlights various LLM models, including their key features, applications in the UAV domain, and challenges for integration in UAV systems.

IV Network Architectures for Integrating LLMs in UAVs

Integrating LLMs with UAVs involves deploying advanced language processing capabilities to enable sophisticated decision-making and interaction abilities. The UAV platform consists of essential hardware, including the UAV itself equipped with flight control hardware, sensors like cameras and LiDAR, and communication modules such as Wi-Fi, LTE, and satellite. It also includes small-scale onboard computers for real-time data processing. In LLM integration, lightweight versions of LLMs are directly deployed on UAVs for rapid and autonomous decision-making through Edge AI. For more complex computations, UAV data is transmitted to cloud servers where more robust LLMs perform analyses, and the results are then sent back to the UAV. Ground control stations support these operations, allowing operators to monitor and control UAVs remotely via direct line-of-sight or satellite communication, using secure data links for data transmission. The operation of this system involves several key functions. UAVs collect data via onboard sensors, capturing visual imagery, environmental data, or specific readings relevant to their missions. This data is either processed locally or sent to a ground station or cloud server, depending on the task’s complexity and onboard processing unit capabilities. The embedded LLM on the UAV processes data for simple tasks to make real-time decisions. For more complex decisions, data is sent to the cloud, where powerful LLMs analyze it, make decisions, or generate insights, which are then transmitted back to the UAV. Based on this processed data and decisions made by the LLMs, the UAV executes actions such as optimizing its flight path, interacting with the environment, or performing specific tasks like delivery, surveillance, or data collection. Feedback and learning are integral to this system, where data from missions are used to retrain or refine LLMs, improving their accuracy and decision-making capabilities. This continuous feedback loop helps the model adapt to specific environments for optimal task performance. The integration of LLMs with UAVs thus offers significant enhancements to UAV operations, opening up vast possibilities for improved capabilities and effectiveness.

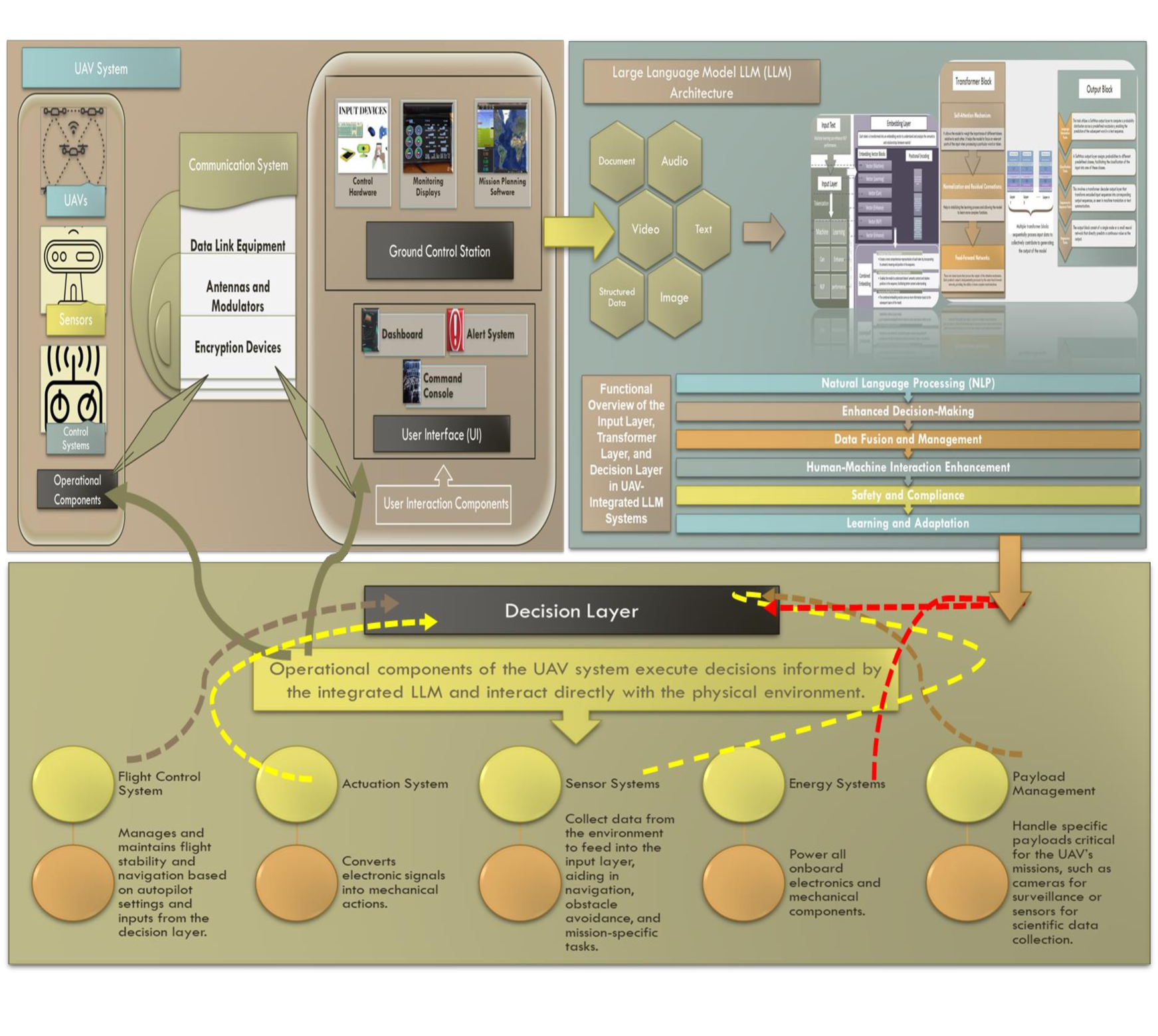

Fig. 2 illustrates the comprehensive architecture of a UAV system integrated with an LLM, where the UAV collects data from sensors. This data, encompassing various types such as text, audio, and video, is input into the integrated LLM architecture. The LLM processes this data and outputs results to the decision layer, which then issues commands to operational components, including the flight controller, sensor systems, energy systems, and payload management systems.

Besides, ground control and base stations are key elements in the UAV operations infrastructure, serving as command and control centers that handle everything from flight authorization and monitoring to data processing and deployment management. Integrating LLMs with ground control and base stations significantly enhances UAV management and operation. For example, LLMs can significantly improve communication between UAVs and their control stations by interpreting and processing natural language commands or queries. It allows operators to interact with UAVs more intuitively, making complex commands simpler to execute and reducing the potential for human error.

LLMs can process real-time data received from UAVs at the ground control stations to make instant decisions regarding flight paths, mission adjustments, and responses to changing environmental conditions. LLMs can also analyze vast quantities of data much faster than humans, providing critical insights that enable quick decision-making to optimize UAV operations and ensure mission success. In addition, LLMs can utilize historical and real-time data to predict potential issues before they arise, such as mechanical failures, battery depletion, or adverse weather conditions. This predictive capability ensures that preventative measures can be taken in advance, enhancing the safety and reliability of UAV operations.

Furthermore, LLMs can automate routine tasks such as flight scheduling, monitoring UAV conditions, and managing data collection to improve efficiency for complex decision-making and operational strategy. LLMs can also signficantly contribute to improved data handling and analyzing by automatically categorizing data, extracting relevant information, and generating comprehensive reports. Moreover, they can analyze imagery and sensor data to identify patterns or anomalies, aiding in missions such as surveillance, environmental monitoring, and infrastructure inspection. LLMs can create detailed simulations and training scenarios based on accumulated data, providing operators with realistic and varied training experiences and improving the skills of UAV operators to ensure they are better prepared for complex operational scenarios.

Moreover, with their advanced pattern recognition capabilities, LLMs integrated into ground and base stations can enhance security protocols. They can detect potential cyber threats and unauthorized access attempts, ensuring UAV operations are protected against digital intrusions. In addition, LLMs can optimize resource allocation by predicting the best use of available UAVs and support equipment based on mission requirements. LLMs can also facilitate better interoperability between systems and software used in UAV operations to ensure seamless integration and communication across diverse platforms by acting as a bridge that understands and translates between various data formats and protocols. Thus, leading to efficient management, superior decision-making support, enhanced safety, and improved effectiveness of UAV missions. This broad application of LLMs lays the groundwork for their targeted use in enhancing spectrum sensing capabilities.

Moreover, given the pivotal role of spectrum sensing in ensuring effective Radio Frequency (RF) communication for UAVs, especially in complex or congested environments, the integration of LLMs proves immensely beneficial and can significantly enhance UAVs’ spectrum sensing capabilities through sophisticated data processing techniques. This integration deepens the understanding of dynamic RF conditions, which are prevalent in areas with shared frequencies or high interference levels and enables UAV systems to identify and utilize optimal frequency bands intelligently. Such capabilities drastically improve the reliability and efficiency of their communication networks, which are crucial for maintaining robust links and ensuring the successful execution of UAV operations in RF-dense environments where traditional methods might fail. Consequently, this survey highlights the critical need for LLM integration in spectrum sensing and thoroughly explores its opportunities and challenges in the subsequent section.

V Spectrum Management and Regulation in LLMs-assisted UAVs

UAVs depend on RF communication for various tasks, including remote control, telemetry, data transmission, and connectivity with ground stations. Spectrum sensing is a key technology that enhances UAVs’ RF communication capabilities by enabling them to identify and utilize appropriate frequency ranges crucial for their missions. Moreover, it is particularly critical in environments where UAVs share frequency bands or encounter rapidly changing RF conditions [112, 113]. Accordingly, by accurately sensing the spectrum, UAVs can dynamically adjust their communication parameters, such as channel selection and power control, to prevent interference with primary users and optimize their communication performance [114]. In addition, spectrum sensing enhances the operational efficiency of UAVs by enabling them to make informed decisions about frequency band selection, thereby ensuring efficient utilization of available spectrum resources and minimizing the risk of interference with existing wireless systems [115, 116].

Furthermore, spectrum sensing also plays an essential role in enabling cognitive radio capabilities [117, 112], dynamic spectrum access [118], interference avoidance [117], and ensuring regulatory compliance for UAV communication systems [119]. For example, cognitive radio allows UAV systems to adaptively select and switch between different frequency channels or bands based on real-time spectrum sensing results, enabling UAVs to locate and utilize the most suitable, least congested, and interference-free frequency bands for reliable and efficient communication [120]. Dynamic spectrum access allows UAVs to access available spectrum resources, dynamically ensuring that UAVs can avoid interference with existing users while optimizing their communication links. In addition, spectrum sensing facilitates coexistence and interference avoidance by enhancing UAVs’ capabilities in detecting the presence of other RF devices or systems in their vicinity. If interference or potential conflicts are detected, UAVs can autonomously or semi-autonomously change their operating frequency or adjust their communication protocols to avoid interference [121].

V-A Regulatory Frameworks and Compliance Considerations

Regulatory bodies worldwide, such as the Federal Communications Commission (FCC) in the United States, establish guidelines for spectrum use to ensure fair access and prevent conflicts among various technologies and services, including UAVs. These guidelines designate specific frequency bands for UAV use to avoid conflicts with commercial, residential, and emergency communications to balance the growing demand for UAV services with the needs of traditional spectrum users. These authorities have established rules for dynamic spectrum access, particularly in bands where UAVs share the spectrum with other devices. This framework involves protocols and technologies that enable UAVs to detect and utilize vacant frequencies without interfering with incumbent users. Compliance with these frameworks is essential for legal and efficient UAV operations.

To ensure compliance, UAV operators must consider several key aspects. For instance, UAVs must be equipped with advanced spectrum sensing technologies that reliably identify available and occupied channels to prevent unauthorized use of occupied frequencies. UAVs must also operate to minimize interference with other spectrum users, adhering to power limits, frequency boundaries, and operational protocols designed to mitigate the risk of signal interference [122]. Moreover, it is necessary to implement software solutions that help manage spectrum usage and ensure adherence to local and international regulations to automate many aspects of spectrum management, reducing the burden on UAV operators and decreasing the risk of non-compliance.

V-B Integrating LLMs in UAVs Spectrum Management

Recent research has significantly advanced the application of spectrum sensing and sharing in UAV operations, focusing on several key directions to enhance communication efficiency and mitigate interference. Shen et al. [123] introduced a 3D spatial-temporal sensing approach that leverages UAV mobility for dynamic spectrum opportunity detection in heterogeneous environments. The authors in [124] and [125, 126] developed methods to optimize spectrum sensing and sharing in cognitive radio systems, improving UAV communication performance by managing interference with ground links. Chen et al. [127] focused on spectrum access management among UAV clusters to reduce interference, while Xu et al. [128] focused on transmit power allocation and trajectory planning in UAV relay systems for effective data relay between devices.

In another work [129], Qiu et al. utilized blockchain technology to ensure privacy and efficiency in spectrum transactions between terrestrial and aerial systems. Hu et al. [130] focused on the strategies for spectrum allocation using contract theory to balance the interests of macro base stations and UAV operators. Azari et al. [131] compared underlay and overlay spectrum sharing mechanisms in densely populated urban scenarios, emphasizing the effectiveness of overlay strategies for maintaining service quality for both UAVs and ground users. While significant advancements have been made in spectrum sensing and sharing technologies for UAVs, the integration of LLMs has not been widely explored in the existing research. Integrating LLMs can revolutionize the UAV domain by enhancing spectrum sensing capabilities, enabling more dynamic and efficient use of communication frequencies [132]. LLMs can interpret and analyze the vast amounts of data generated by spectrum sensors on UAVs. With their advanced natural language processing capabilities, they can extract meaningful insights from unstructured data, facilitating intelligent decision-making in real time. LLMs can also predict spectrum availability and potential interference by analyzing historical data and current communication patterns. Thus, UAVs can proactively adjust their communication parameters, such as channel selection and power levels, to maintain optimal performance.

Furthermore, LLMs can process sensor data and identify patterns indicating potential frequency conflicts or areas of congestion. UAVs can then autonomously make adjustments to avoid these issues, enhancing operational efficiency and reducing the risk of communication failures. In addition, LLMs can contribute to cognitive radio enhancements by assisting in making more informed choices about frequency selection by providing a deeper analysis of spectrum conditions and user behavior. This integration enhances UAVs’ ability to select the least congested and most efficient channels. LLM’s continuous learning and adaptation ability can also optimize UAVs’ spectrum access strategies, ensuring they utilize the best available frequencies based on real-time data and sophisticated algorithms. LLMs can also ensure that UAVs operate within the legal spectrum allocations by continuously monitoring compliance parameters and adapting to regulatory changes. LLMs can also contribute significantly to interference management and adherence to regulatory frameworks by analyzing communication patterns and environmental data. LLMs can detect potential interference sources more accurately and suggest immediate corrective actions to avoid them.

VI Applications and Use Cases of LLMs in UAVs

VI-A Surveillance and Reconnaissance Applications

LLMs offer advanced cognitive and analytical abilities that can significantly enhance the efficiency, accuracy, and effectiveness of UAV surveillance systems [2]. With LLM integration, UAVs can process and analyze large volumes of visual data more efficiently, enabling real-time image recognition, object detection, and situation awareness. LLMs are exceptional at identifying specific objects, individuals, vehicles, or activities in video streams or images, providing detailed insights crucial for military and civilian surveillance operations. It also enables UAVs to operate more autonomously by interpreting and reacting to their surroundings without constant human oversight, significantly benefiting in complex or hostile environments with critical response times.

Moreover, UAVs equipped with LLMs can make real-time decisions about flight paths, areas to focus on, and when to capture critical footage based on the mission’s objectives and evolving ground realities. NLP allows UAVs to understand and process human language, enabling them to receive and interpret more complex commands and queries. In addition, LLMs can predict potential security threats or points of interest by analyzing patterns and historical data. This predictive capability allows for proactive surveillance measures, where UAVs can monitor suspected areas more closely or alert human operators about unusual activities or anomalies detected based on learned patterns. It can also enhance real-time decision support by processing and analyzing data on the fly by summarizing vast amounts of collected data into actionable intelligence. It enables quick and informed decisions crucial in surveillance and reconnaissance missions where conditions can change rapidly [133].