Robust Low-Cost Drone Detection and Classification in Low SNR Environments

Electrical Engineering and Systems Science > Signal Processing

The proliferation of drones, or unmanned aerial vehicles (UAVs), has raised significant safety concerns due to their potential misuse in activities such as espionage, smuggling, and infrastructure disruption. This paper addresses the critical need for effective drone detection and classification systems that operate independently of UAV cooperation.

We evaluate various convolutional neural networks (CNNs) for their ability to detect and classify drones using spectrogram data derived from consecutive Fourier transforms of signal components. The focus is on model robustness in low signal-to-noise ratio (SNR) environments, which is critical for real-world applications.

A comprehensive dataset is provided to support future model development. In addition, we demonstrate a low-cost drone detection system using a standard computer, software-defined radio (SDR) and antenna, validated through real-world field testing. On our development dataset, all models consistently achieved an average balanced classification accuracy of >= 85% at SNR > -12dB.

In the field test, these models achieved an average balance accuracy of > 80%, depending on transmitter distance and antenna direction. Our contributions include: a publicly available dataset for model development, a comparative analysis of CNN for drone detection under low SNR conditions, and the deployment and field evaluation of a practical, low-cost detection system.

Submission history

From: Stefan Glüge [view email][v1] Wed, 26 Jun 2024 12:50:55 UTC (16,275 KB)

Summary

The paper focuses on radio frequency (RF) signals from several types of consumer and hobbyist drones. Specifically:

- 1. The development dataset included signals from 6 drones and 4 remote controllers:

- - DJI Phantom 4 Pro drone and its remote control

- - Futaba T7C remote control

- - Futaba T14SG remote control and R7008SB receiver

- - Graupner mx-16 remote control and GR-16 receiver

- - Taranis ACCST X8R Receiver

- - Turnigy 9X remote control

- 2. The signals were recorded in the 2.4 GHz ISM band, which is commonly used by consumer drones for communication between the drone and its remote control.

- 3. The paper mentions that these signals occur in short bursts, typically 1.3-2 ms long, with repetition periods ranging from about 60 to 600 ms depending on the specific drone model.

- 4. The signals were recorded at a sampling frequency of 56 MHz initially, then downsampled to 14 MHz for processing.

- 5. For the field test, they used a slightly different set of drones/controllers, including:

- - DJI Phantom Pro 4 drone and remote

- - Futaba T14 remote control

- - Futaba T7 remote control

- - FrySky Taranis Q X7 remote control

- - Turnigy Evolution remote control

The focus was on the RF signals emitted by these consumer-grade drones and their remote controls, as detecting these signals can indicate the presence of a drone even when it's not visible. This paper presents research on detecting and classifying drones using radio frequency (RF) signals and convolutional neural networks (CNNs). Key points include:

- 1. The authors developed CNN models to detect and classify drones using spectrogram data from RF signals.

- 2. They focused on model robustness in low signal-to-noise ratio (SNR) environments, which is important for real-world applications.

- 3. A comprehensive dataset was created and made publicly available to support future research.

- 4. The authors implemented a low-cost drone detection system using standard computer hardware, software-defined radio, and an antenna.

- 5. In lab tests, the models achieved ≥85% balanced accuracy at SNRs above -12 dB.

- 6. Field tests showed >80% average balanced accuracy, varying based on transmitter distance and antenna direction.

- 7. The simplest model (VGG11 BN) performed as well as more complex models.

- 8. Most misclassifications occurred between noise and drone signals, rather than between different drone types.

- 9. The system could reliably detect drones up to 670 meters away in real-world conditions.

- 10. Limitations included potential interference in field tests and the inability to detect multiple imultaneous transmitters.

The research demonstrates the feasibility of using CNNs for drone detection with RF signals in challenging real-world conditions, while also providing resources for further research in this area.

The paper primarily focused on using variations of the Visual Geometry Group (VGG) CNN architecture. Specifically:

1. VGG11 BN

2. VGG13 BN

3. VGG16 BN

4. VGG19 BN

Key points about these models:

- The "BN" suffix indicates that these versions include batch normalization layers after the convolutions.

- The main idea of the VGG architecture is to use multiple layers of small (3x3) convolutional filters instead of larger ones.

- The number in each model name (11, 13, 16, 19) refers to the number of layers with weights in the network.

- For the dense classification layer, they used 256 linear units followed by 7 linear units at the output (one unit per class).

- The models were trained on 2D spectrogram data derived from the RF signals.

- Interestingly, the authors found no significant performance advantage in using the more complex models (like VGG19 BN) over the simplest model (VGG11 BN) for this specific task.

- The VGG11 BN model, being the least complex, required the least number of training epochs to achieve optimal performance on the validation set.

The authors chose these VGG variants due to their proven effectiveness in image classification tasks, adapting them to work with the 2D spectrogram representation of the RF signals. They focused on comparing the performance of these different VGG variants rather than exploring other types of CNN architectures.

Corresponding author: Stefan Glüge (email: stefan.gluege@zhaw.ch).

Robust Low-Cost Drone Detection and Classification in Low SNR Environments

Abstract

The proliferation of drones, or unmanned aerial vehicles (UAVs), has raised significant safety concerns due to their potential misuse in activities such as espionage, smuggling, and infrastructure disruption. This paper addresses the critical need for effective drone detection and classification systems that operate independently of UAV cooperation. We evaluate various convolutional neural networks (CNNs) for their ability to detect and classify drones using spectrogram data derived from consecutive Fourier transforms of signal components. The focus is on model robustness in low signal-to-noise ratio (SNR) environments, which is critical for real-world applications. A comprehensive dataset is provided to support future model development. In addition, we demonstrate a low-cost drone detection system using a standard computer, software-defined radio (SDR) and antenna, validated through real-world field testing. On our development dataset, all models consistently achieved an average balanced classification accuracy of at SNR dB. In the field test, these models achieved an average balance accuracy of , depending on transmitter distance and antenna direction. Our contributions include: a publicly available dataset for model development, a comparative analysis of CNN for drone detection under low SNR conditions, and the deployment and field evaluation of a practical, low-cost detection system.

{IEEEkeywords}Deep neural networks, Robustness, Signal detection, Unmanned aerial vehicles

1 INTRODUCTION

\IEEEPARstartDrones, or civil UAVs, have evolved from hobby toys to commercial systems with many applications. In particular, mini/amateur drones have become ubiquitous. With the proliferation of these low-cost, small and easy-to-fly drones, safety issues have became more pressing (e.g. spying, transfer of illegal or dangerous goods, disruption of infrastructure, assault). Although regulations and technical solutions (such as transponder systems) are in place to safely integrate UAVs into the airspace, detection and classification systems that do not rely on the cooperation of the UAV are necessary. Various technologies such as audio, video, radar, or radio frequency (RF) scanners have been proposed for this task [1].

In this paper, we evaluate different CNNs for drone detection and classification using the spectrogram data computed with consecutive Fourier transforms for the real and imaginary parts of the signal. To facilitate future model development, we make the dataset publicly available. In terms of performance, we focus on the robustness of the models to low SNRs, as this is the most relevant aspect for a real-world application of the system. Furthermore, we evaluate a low-cost drone detection system consisting of a standard computer, SDR, and antenna in a real-world field test.

Our contributions can therefore be summarised as follows:

-

• We provide the dataset used to

develop the model. Together with the code to load and transform the

data, it can be easily used for future model development.

-

• We compare different CNNs using D spectrogram data for detection and classification of drones based on their RF signals under challenging conditions, i.e. low SNRs down to dB.

-

• We visualise the model embeddings to

understand how the model clusters and separates different classes, to

identify potential overlaps or ambiguities, and to examine the

hierarchical relationships within the learned features.

-

• We implement the models in a low-cost detection system and evaluate them in a field test.

1.1 RELATED WORK

A literature review on drone detection methods based on deep learning (DL) is given in [1] and [2]. Both works reflect the state of the art in . Different DL algorithms are discussed with respect to the techniques used to detect drones based on visual, radar, acoustic, and RF signals. Given these general overviews, we briefly summarise recent work based on RF data, with a particular focus on the data side of the problem to motivate our work.

With the advent of DL-based methods, the data used to train models became the cornerstone of any detection system. Table 1 provides an overview of openly available datasets of RF drone signals. The DroneRF dataset [3] is one of the first openly available datasets. It contains RF time series data from three drones in four flight modes (i.e. on, hovering, flying, video recording) recorded by two universal software radio peripheral (USRP) SDR transceivers [4]. The dataset is widely used and enabled follow-up work with different approaches to classification systems, i.e. DL-based [5, 6], focused on pre-processing and combining signals from two frequency bands [7], genetic algorithm-based heterogeneous integrated k-nearest neighbour [8], and hierarchical reinforcement learning-based [9]. In general, the classification accuracies reported in the papers on the DroneRF dataset are close to . Specifically, [4], [5], and [6] report an average accuracy of , , and , respectively, to detect the presence of a drone. There is therefore an obvious need for a harder, more realistic dataset.

Consequently, [10] investigate the detection and classification of drones in the presence of Bluetooth and Wi-Fi signals. Their system used a multi-stage detector to distinguish drone signals from the background noise and interfering signals. Once a signal was identified as a drone signal, it was classified using machine learning (ML) techniques. The detection performance of the proposed system was evaluated for different SNRs. The corresponding recordings ( drone controls from eight different manufacturers) are openly available [11]. Unfortunately, the Bluetooth/Wi-Fi noise is not part of the dataset. Ozturk et al. [12] used the dataset to further investigate the classification of RF fingerprints at low SNRs by adding white Gaussian noise to the raw data. Using a CNN, they achieved classification accuracies ranging from to for SNR dB.

The openly available DroneDetect dataset [13] was created by Swinney and Woods [14]. It contains raw in-phase and quadrature (IQ) data recorded with a BladeRF SDR. Seven drone models were recorded in three different flight modes (on, hovering, flying). Measurements were also repeated with different types of noise, such as interference from a Bluetooth speaker, a Wi-Fi hotspot, and simultaneous Bluetooth and Wi-Fi interference. The dataset does not include measurements without drones, which would be necessary to evaluate a drone detection system. The results in [14] show that Bluetooth signals are more likely to interfere with detection and classification accuracy than Wi-Fi signals. Overall, frequency domain features extracted from a CNN were shown to be more robust than time domain features in the presence of interference. In [15] the drone signals from the DroneDetect dataset were augmented with Gaussian noise and SDR recorded background noise. Hence, the proposed approach could be evaluated regrading its capability to detect drones. They trained a CNN end-to-end on the raw IQ data and report an accuracy of for detection and between and for classification.

The Cardinal RF dataset [16] consists of the raw time series data from six drones + controller, two Wi-Fi and two Bluetooth devices. Based on this dataset, Medaiyese et al. [17] proposed a semi-supervised framework for UAV detection using wavelet analysis. Accuracy between and was achieved at SNRs of dB and dB, while it dropped to chance level for SNRs below dB to dB. In addition, [18] investigated different wavelet transforms for the feature extraction from the RF signals. Using the wavelet scattering transform from the steady state of the RF signals at dB SNR to train SqueezeNet [19], they achieved an accuracy of at dB SNR.

In our previous work [20], we created the noisy drone RF signals dataset11https://www.kaggle.com/datasets/sgluege/noisy-drone-rf-signal-classification from six drones and four remote controllers. It consists of non-overlapping signal vectors of samples, corresponding to ms at MHz. We added Labnoise (Bluetooth, Wi-Fi, Amplifier) and Gaussian noise to the dataset and mixed it with the drone signals with SNR dB. Using IQ data and spectrogram data to train different CNNs, we found an advantage in favour of the D spectrogram representation of the data. There was no performance difference at SNR dB but a major improvement in the balanced accuracy at low SNR levels, i.e. % on the spectrogram data compared to % on the IQ data at dB SNR.

Recently, [21] proposed an anchor-free object detector based on keypoints for drone RF signal spectograms. They also proposed an adversarial learning-based data adaptation method to generate domain independent and domain aligned features. Given five different types of drones, they report a mean average precision of , which drops to when adding Gaussian noise with dB SNR. The raw data used in their work is available22https://www.kaggle.com/datasets/zhaoericry/drone-rf-dataset, but yet, unfortunately not usable without any further documentation.

Table 1: Overview on openly available drone RF datasets. Dataset Year Datatype UAV Noise Size DroneRF [3] 2019 Raw Amplitude drones + controller Background RF activities GB Drone remote controller RF signal dataset [11] 2020 Raw Amplitude controller none GB DroneDetect dataset [13] 2020 Raw IQ drones + controller Bluetooth, Wi-Fi devices GB Cardinal RF [16] 2022 Raw Amplitude drones + controller Bluetooth, Wi-Fi GB Noisy drone RF signals [20] 2023 Pre-processed IQ and Spectrogram drones + controller Bluetooth, Wi-Fi, Gauss GB 1.2 MOTIVATION

As we have seen in other fields, such as computer vision, the success of DL can be attributed to: (a) high-capacity models; (b) increased computational power; and (c) the availability of large amounts of labelled data [22]. Thus, given the large amount of available raw RF signals (cf. Tab. 1) we promote the idea of open and reusable data, to facilitate model development and model comparison.

With the noisy drone RF signals dataset [20], we have provided a first ready-to-use dataset to enable rapid model development, without the need for any data preparation. Furthermore, the dataset contains samples that can be considered as “hard” in terms of noise, i.e. Bluetooth + Wi-Fi + Gaussian noise at very low SNRs, and allows a direct comparison with the published results.

While the models proposed in [20] performed reasonably well in the training/lab setting, we found it difficult to transfer their performance to practical application. The reason was the choice of rather short signal vectors of samples, corresponding to ms at MHz. Since the drone signals occur in short bursts of ms with a repetition period of ms, our continuously running classifier predicts a drone whenever a burst occurs and noise during the repetition period of the signal. Therefore, in order to provide a stable and reliable classification per every second, one would need an additional “layer” to pool the classifier outputs given every ms.

In the present work, we follow a data-centric approach and simply increase the length of the input signal to ms to train a classifier in an end-to-end manner. Again, we provide the data used for model development in the hope that it will inspire others to develop better models.

In the next section, we briefly describe the data collection and preprocessing procedure. Section 3 describes the model architectures and their training/validation method. In addition, we describe the setup of a low-cost drone detection system and of the field test. The resulting performance metrics are presented in Section 4 and are further discussed in Section 5.

2 MATERIALS

We used the raw RF signals from the drones that were collected in [20]. Nevertheless, we briefly describe the data acquisition process again to provide a complete picture of the development from the raw RF signal to the deployment of a detection system within a single manuscript.

2.1 DATA ACQUISITION

The drone’s remote control and, if present, the drone itself were placed in an anechoic chamber to record the raw RF signal without interference for at least one minute. The signals were received by a log-periodic antenna and sampled and stored by an Ettus Research USRP B210, see Fig. 1. In the static measurement, the respective signals of the remote control (TX) alone or with the drone (RX) were measured. In the dynamic measurement, one person at a time was inside the anechoic chamber and operated the remote control (TX) to generate a signal that is as close to reality as possible. All signals were recorded at a sampling frequency of MHz (highest possible real-time bandwidth). All drone models and recording parameters are listed in Tab. 2, including both uplink and downlink signals.

Figure 1: Recording of drone signals in the anechoic chamber. A DJI Phantom 4 Pro drone with the DJI Phantom GL300F remote control. Table 2: Transmitters and receivers recorded in the development dataset and their respective class labels. Additionally, we show the center frequency (GHz), the channel spacing (MHz), the burst duration (ms), and the repetition period of the respective signals (ms). Transmitter Receiver Label Center Freq. (GHz) Spacing (MHz) Duration (ms) Repetition (ms) DJI Phantom GL300F DJI Phantom 4 Pro DJI Futaba T7C - FutabaT7 Futaba T14SG Futaba R7008SB FutabeT14 Graupner mx-16 Graupner GR-16 Graupner Bluetooth/Wi-Fi Noise - Noise Taranis ACCST X8R Receiver Taranis Turnigy 9X - Turnigy , - a a The repetition period of the Turnigy transmitter is not static. First bursts were observed after ms, the following signal bursts were observed in the interval ms We also recorded three types of noise and interference. First, Bluetooth/Wi-Fi noise was recorded using the hardware setup described above. Measurements were taken in a public and busy university building. In this open recording setup, we had no control over the exact number or types of active Bluetooth/Wi-Fi devices and the actual traffic in progress.

Second, artificial white Gaussian noise was used, and third, receiver noise was recorded for seconds from the USRP at various gain settings ( db in steps of dB) without the antenna attached. This should prevent the final model from misclassifying quantisation noise in the absence of a signal, especially at low gain settings.

2.2 DATA PREPARATION

To reduce memory consumption and computational effort, we reduced the bandwidth of the signals by downsampling from MHz to MHz using the SciPy [23] signal.decimate function with an th order Chebyshev type I filter.

The drone signals occur in short bursts with some low power gain or background noise in between (cf. Tab. 2). We divided the signals into non-overlapping vectors of samples ( ms) and only vectors containing a burst, or at least a partial burst, were used for the development dataset. This was achieved by applying an energy threshold. As the recordings were made in an echo-free chamber, the signal burst is always clearly visible. Hence, we only used vectors that contained a portion of the signal whose energy was above the threshold, which was arbitrarily set at of the average energy of the entire recording.

The selected drone signal vectors with were normalised to a carrier power of per sample, i.e. only the part of the signal vector containing drone bursts was considered for the power calculation ( samples out of ). This was achieved by identifying the bursts as those samples where a smoothed energy was above a threshold. The signal vectors are thus normalised by

(1) Noise vectors (Bluetooth, Wi-Fi, Amplifier, Gauss) with samples were normalised to a mean power of with

(2) Finally, the normalised drone signal vectors were mixed with the normalised noise vectors by

(3) to generate the noisy drone signal vectors at different SNRs.

2.3 DEVELOPMENT DATASET

To facilitate future model development, we provide our resulting dataset33https://www.kaggle.com/datasets/sgluege/noisy-drone-rf-signal-classification-v2 along with a code example44https://github.com/sgluege/noisy-drone-rf-signal-classification-v2 to load and inspect the data. The dataset consists of the non-overlapping signal vectors of samples, corresponding to ms at MHz.

As described in Sec. 2.2, the drone signals were mixed with noise. More specifically, of the drone signals were mixed with Labnoise (Bluetooth + Wi-Fi + Amplifier) and with Gaussian noise. In addition, we created a separate noise class by mixing Labnoise and Gaussian noise in all possible combinations (i.e., Labnoise Labnoise, Labnoise Gaussian noise, Gaussian noise Labnoise, and Gaussian noise Gaussian noise). For the drone signal classes, as for the noise class, the number of samples for each SNR level was evenly distributed over the interval of SNRs dB in steps of dB, i.e., - samples per SNR level. The resulting number of samples per class is given in Tab. 3.

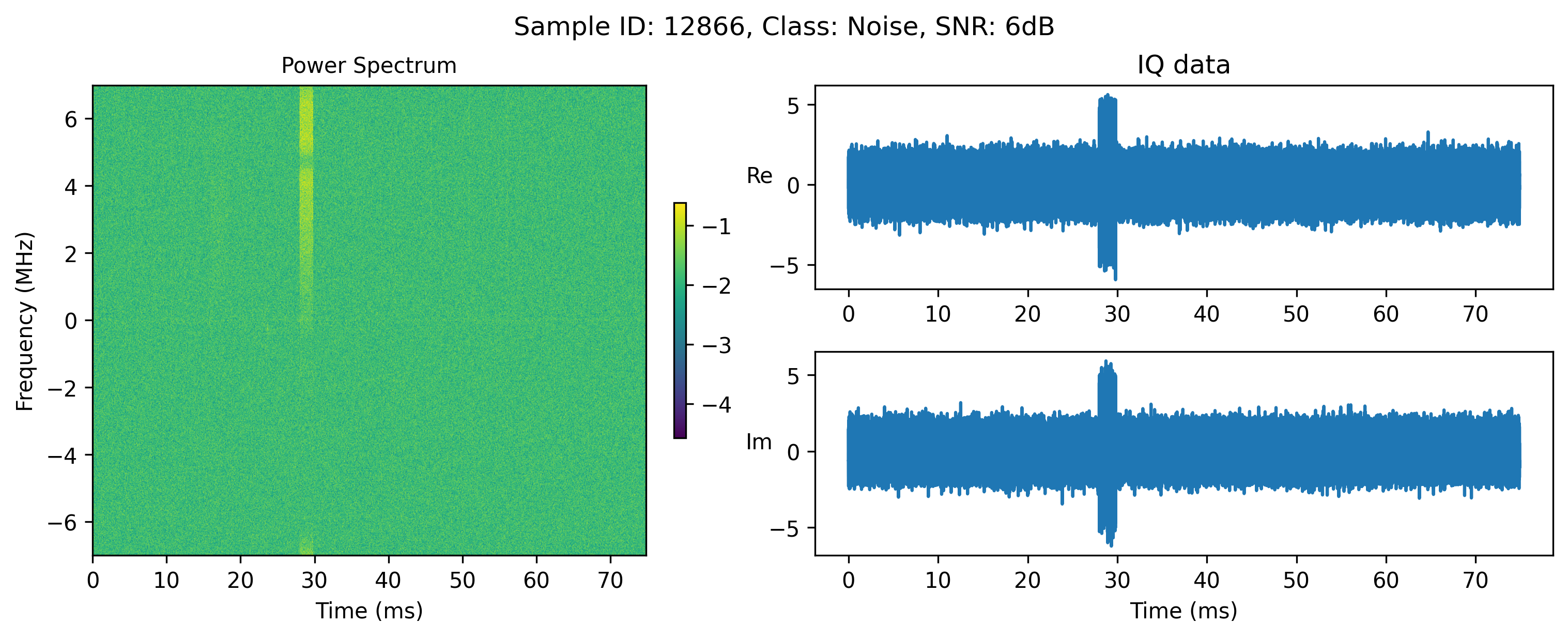

Table 3: Number of samples in the different classes in the development dataset. Class DJI FutabaT14 FutabaT7 Graupner Taranis Turnigy Noise #samples In our previous work [20] we found an advantage in using the spectrogram representation of the data compared to the IQ representation, especially at low SNRs levels. Therefore, we transform the raw IQ signals by computing the spectrum of each sample with consecutive Fourier transforms with non-overlapping segments of length for the real and imaginary parts of the signal. That is, the two IQ signal vectors () are represented as two matrices (). Fig. 2 shows four samples of the dataset at different SNRs. Note that we have plotted the log power spectrogram of the complex spectrum as

(4)

(a) FutabaT14 at SNR dB

(b) DJI at SNR dB

(c) Taranais at SNR dB

(d) Noise at SNR dB Figure 2: Log power spectrogram and IQ data samples from the development dataset at different SNRs (2(a)-2(d)) 2.4 DETECTION SYSTEM PROTOTYPE

For field use, a system based on a mobile computer was used as shown in Fig. 3 and illustrated in Fig. 4. The RF signals were received using a directional left-hand circularly polarised antenna (H&S SPA 2400/70/9/0/CP). The antenna gain of dBi and the front-to-back ratio of dB helped to increase the detection range and to attenuate the unwanted interferers in the opposite direction. Circular polarisation has been chosen to eliminate the alignment problem as the transmitting antennas have a linear polarisation. The USRP B210 was used to down-convert and digitise the RF signal at a sampling rate of Msps. On the mobile computer, the GNU Radio program collected the baseband IQ samples in batches of one second and send one batch at a time to our PyTorch model, which classified the signal. To speed up the computations in the model we utilised an Nvidia GPU in computer. The classification results were then visualised in real time in a dedicated GUI.

Figure 3: Block diagram of the mobile drone detection system.

Figure 4: Detection prototype at the Zurich Lake in Rapperswil. 3 METHODS

3.1 MODEL ARCHITECTURE AND TRAINING

As in [20] we chose the Visual Geometry Group (VGG) CNN architecture [24]. The main idea of this architecture is to use multiple layers of small () convolutional filters instead of larger ones. This is intended to increase the depth and expressiveness of the network, while reducing the number of parameters. There are several variants of this architecture, which differ in the number of convolutional layers ( and , respectively). We used a variant with a batch normalisation [25] layer after the convolutions, denoted as VGG11_BN to VGG19_BN. For the dense classification layer, we used linear units followed by linear units at the output (one unit per class).

A stratified -fold train-validation-test split was used as follows. In each fold, we trained a network using and of the available samples of each class for training and testing, respectively. Repeating the stratified split five times ensures that each sample was in the test set once in each experiment. Within the training set, of the samples were used as the validation set during training.

3.2 MODEL EVALUATION

During training, the model was evaluated on the validation set after each epoch. If the balanced accuracy on the validation set increased, it was saved. After training, the model with the highest balanced accuracy on the validation set was evaluated on the withheld test data. The performance of the models on the test data was accessed in terms of classification accuracy and balanced accuracy.

As accuracy simply measures the proportion of correct predictions out of the total number of observations, it can be misleading for unbalanced datasets. In our case, the noise class is over-represented in the dataset (cf. Tab. 3). Therefor, we also report the balanced accuracy, which is defined as the average of the recall obtained for each class, i.e. it gives equal weight to each class regardless of how frequent or rare it is.

3.3 VISUALISATION OF MODEL EMBEDDINGS

Despite their effectiveness, CNNs are often criticised for being “black boxes”. Understanding the feature representations, or embeddings, learned by the CNN helps to demystify these models and provide some understanding of their capabilities and limitations. In general, embeddings are high-dimensional vectors generated by the intermediate layers that capture essential patterns from the input data.

In our case, we chose the least complex VGG11_BN model to visualise its embeddings. When inferencing the test data, we collected the activations at the last dense classification layer, which consists of units. Given test samples, this results in a matrix. Using t-distributed Stochastic Neighbor Embedding (t-SNE) [28] and Uniform Manifold Approximation and Projection (UMAP) [29] as dimensionality reduction techniques, we project these high-dimensional embeddings into a lower-dimensional space, creating interpretable visualisations that reveal the model’s internal data representations.

Our goals were to understand how the model clusters and separates different classes, to identify potential overlaps or ambiguities, and to examine the hierarchical relationships within the learned features.

3.4 DETECTION SYSTEM FIELD TEST

We conducted a field test of the detection system in Rapperswil at the Zurich Lake. The drone detection prototype was placed on the shore (cf. Fig. 4) in line of sight of a wooden boardwalk across the lake, with no buildings to interfere with the signals. The transmitters were mounted on a m long wooden pole. The signals from the transmitters were recorded (and classified in real time) at four positions along the walkway at approximately m, m, m and m from the detection system. Figure 5 shows an overview of the experimental setup. At each recording position, we measured with the directional antenna at three different angles, i.e. at – facing the drones and/or remote controls, at – perpendicular to the direction of the transmitters, and at – in the opposite direction. Directing the antenna in the opposite direction should result in dB attenuation of the radio signals.

Figure 5: Experimental measurement setup at the Zurich Lake in Rapperswil. One can see the four recording positions along the wooden walkway and the detection system positioned at the lake side. Further, recordings were done at different angels of the directional antenna indicated by the arrows at the detection system. Table 4 lists the drones and/or remote controls used in the field test. Note that the Graupner drone and remote control are part of the development dataset (cf. Tab. 2), but were not measured in the field experiment. We assume that no other drones were present during the measurements, so recordings where none of our transmitters were used are labelled as “Noise”.

Table 4: Drones and/or remotes used in the field test Class Drone/remote control DJI DJI Phantom Pro 4 drone and remote FutabaT14 Futaba T14 remote control FutabaT7 Futaba T14 remote control Taranis FrySky Taranis Q X7 remote control Turnigy Turnigy Evolution remote control For each transmitter, distance, and angle, to s, or approximately spectrograms were live classified and recorded. The resulting number of samples for each class, distance, and angle are shown in Tab. 5.

Table 5: Number of samples (#samples) for each class, distance and antenna direction (angle) recorded in the field test. Recordings at m distance have no active transmitter and were therefore labelled “Noise” class #samples Distance [m] #samples Angle [∘] #samples DJI FutabaT14 FutabaT7 Noise Taranis Turnigy 4 RESULTS

4.1 CLASSIFICATION PERFORMANCE ON THE DEVELOPMENT DATASET

Table 6 shows the general mean standard deviation of accuracy and balanced accuracy on the test data of the development dataset (cf. Sec. 2.3), obtained in the -fold cross-validation of the different models.

There is no meaningful difference in performance between the models, even when the model complexity increases from VGG11_BN to VGG19_BN. The number of epochs for training (#epochs) shows when the highest balanced accuracy was reached on the validation set. It can be seen that the least complex model, VGG11_BN, required the least number of epochs compared to the more complex models. However, the resulting classification performance is the same.

Table 6: Mean standard deviation of the accuracy (Acc.) and the balanced accuracy (balanced Acc.) obtained in -fold cross-validation of the different models on the test data of the development dataset. An indication of the model training time is given with the mean standard deviation of the number of training epochs (#epochs), i.e. when the highest balanced accuracy on the validation set was reached. The number of trainable parameters (#params) indicates the complexity of the model. Model Acc. balanced Acc. #epochs #params VGG11_BN VGG13_BN VGG16_BN VGG19_BN Figure 6 shows the resulting -fold mean balanced accuracy over SNRs dB in dB steps. Note that we do not show the standard deviation to keep the plot readable. In general, we observe a drastic degradation in performance from dB down to near chance level at dB.

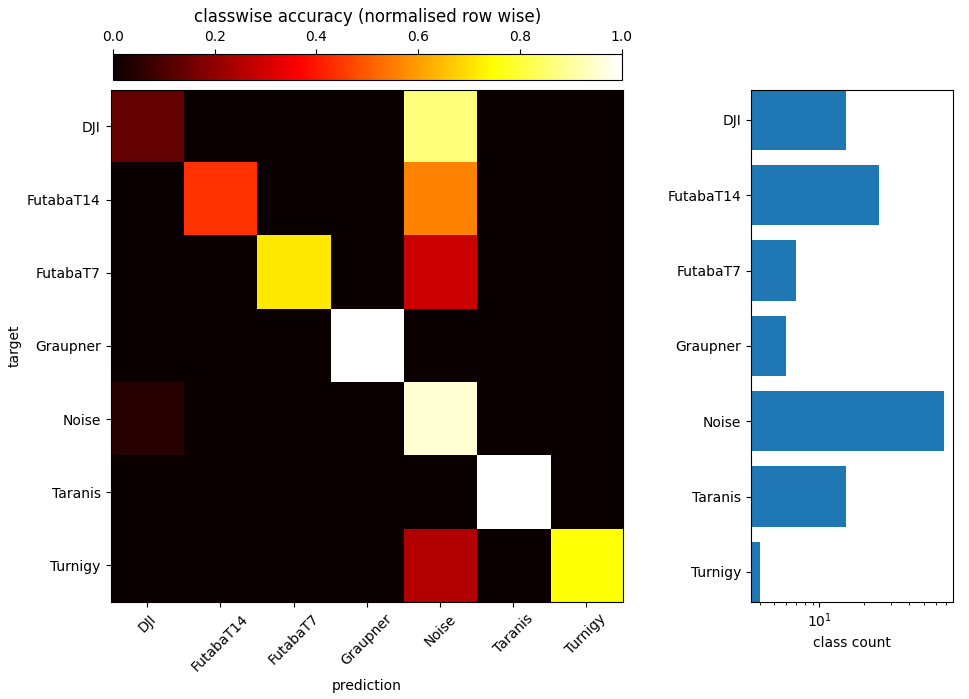

The vast majority of misclassifications occurred between noise and drones and not between different types of drones. Figure 7 illustrates this fact. It shows the confusion matrix for the VGGG11_BN model for a single validation on the test data for the samples with dB SNR.

Figure 6: Mean balanced accuracy obtained in the -fold cross-validation of the different models on the test set of the development dataset over the SNRs levels.

Figure 7: Confusion matrix of the outputs of the VGG11_BN model on a single fold for the samples at dB SNR from the test data. The average balanced accuracy is . 4.2 EMBEDDING SPACE VISUALISATION

Figure 8 shows the 2D t-SNE visualisation of the VGG11_BN embeddings of test samples from the development dataset. It can be seen that each class forms a separate cluster. While the different drone signal clusters are rather small and dense, the noise cluster takes up most of the embedding space and even forms several sub-clusters. This is most likely due to the variety of the signals used in the noise class, i.e. Bluetooth and Wi-Fi signals plus Gaussian noise.

We used t-SNE for dimensionality reduction because of its ability to preserve local structure within the high-dimensional embedding space. Furthermore, t-SNE has been widely adopted in the ML community and has a well-established track record for high-dimensional data visualisation. However, it is sensitive to hyperparameters such as perplexity and requires some tuning, i.e. different parameters can lead to considerable different results.

It can be argued that UMAP would be a better choice due to its balanced preservation of local and global structure together with its robustness to hyperparameters. Therefore, we created a web application55https://visvgg11bndronerfembeddings.streamlit.app that allows users to test and compare both approaches with different hyperparameters.

Figure 8: 2D t-SNE visualisation of the VGG11_BN embeddings of test samples from the development dataset. The hyperparameters for t-SNE were: metric “eucleidean”, number of iterations , perplexity and method for gradient approximation “barnes_hut” 4.3 CLASSIFICATION PERFORMANCE IN THE FIELD TEST

For each model architecture, we performed -fold cross-validation on the development dataset (cf. Sec. 3.1), resulting in five trained models per architecture. Thus, we also evaluated all five trained models on the field test data. We report the balanced accuracy standard deviation for each model architecture for the complete field test dataset averaged over all directions and distances in Tab. LABEL:tab:field_test_acc.

Table 7: Mean standard deviation of the balanced accuracy (balanced Acc.) of the complete field test recordings for the different models. Model balanced Acc. VGG11_BN VGG13_BN VGG16_BN VGG19_BN As observed on the development dataset (cf. Tab. 6), there is no meaningful difference in performance between the model architectures. We therefore focus on VGG11_BN, the simplest model trained, in the more detailed analysis of the field test results.

A live system should trigger an alarm when a drone is present. Therefore, the question of whether the signal is from a drone at all is more important than predicting the correct type of drone. Therefore, we also evaluated the models in terms of a binary problem with two classes “Drone” (for all six classes of drones in the development dataset) and “Noise”.

Table LABEL:tab:field_test_acc_type_direction shows that the accuracies were highly depend on the class. Our models generalise well to the drones in the dataset, with the exception of the DJI. The dependence on direction is not as strong as expected. Orienting the antenna 180∘ away from the transmitter reduces the signal power by about dB, resulting in lower SNR and lower classification accuracy. However, as the transmitters were still quite close to the antenna, the effect is not pronounced. As we have seen on the development dataset in Fig. 6, there is a clear drop in accuracy once the SNR is below dB. Apparently we were still above this threshold, regardless of the direction of the antenna.

Table 8: Mean balanced accuracy standard deviation of the VGG11_BN models on the field test recordings for the different classes for each direction (, and ). The upper part shows the accuracies for the classification problem (seven classes) and the lower part the accuracies for the detection problem “Drone” or “Noise” Class DJI FutabaT14 FutabaT7 Noise Taranis Turnigy Drone Noise What may be surprising is the low accuracy on the signals with no active transmitter, labelled as “Noise”, in the direction of the lake (). Given the uncontrolled nature of a field test, it could well be that there a drone was actually flying on the other side of the km wide lake. This could explain the false positives we observed in that direction.

Table LABEL:tab:field_test_acc_distance shows the average balanced accuracy of the VGG11_BN models on the field test data collected at different distances for each antenna direction. There is a slight decrease in accuracy with distance. However, the longest distance of m appears to be too short to be a problem for the system. Unfortunately, this was the longest distance within line-of-sight that could be recorded at this location.

Table 9: Mean balanced accuracy standard deviation of the VGG11_BN models on the field test data with active transmitters collected at different distances for each antenna direction (, and ). The upper part shows the accuracies for the classification problem (seven classes) and the lower part the accuracies for the detection problem “Drone” or “Noise” Classification Distance (m) 110 340 560 670 Detection Distance (m) 110 340 560 670 Figure 9 shows the confusion matrix for the outputs of the VGG11_BN model of a single fold on the field test data. As with the development dataset (cf. Fig. 7), most of the confusion is between noise and drones rather than between different types of drones.

Figure 9: Confusion matrix of the outputs of the VGG11_BN model on a single fold for the samples from the field test data. The average balanced accuracy is . 5 DISCUSSION

We were able to show that a standard CNN, trained on drone RF signals recorded in a controlled laboratory environment and artificially augmented with noise, generalised well to the more challenging conditions of a real-world field test.

The drone detection system consisted of rather simple and low budget hardware (consumer grade notebook with GPU + SDR). Recording parameters such as sampling frequency, length of input vectors, etc. were set to enable real-time detection with the limited amount of memory and computing power. This means that data acquisition, pre-processing and model inference did not take longer than the signal being processed ( ms per sample in our case).

Obviously, the VGG models were able to learn the relevant features for the drone classification from the complex spectrograms of the RF signal. In this respect, we did not find any advantage for the use of more complex models, such as VGG19_BN, over the least complex model, VGG11_BN (cf. Tabs. 6 and LABEL:tab:field_test_acc).

Furthermore, we have seen that the misclassifications mainly occur between the noise class and the drones, and not between the different drones themselves (cf. Figs. 7 and 9). This is particularly relevant for the application of drone detection systems in security sensitive areas. The first priority is to detect any kind of UAV, regardless of its type.

Based on our experience and results, we see the following limitations of our work. The field test showed that the models can be used and work reliably (cf. Tab. LABEL:tab:field_test_acc_type_direction). However, it is the nature of a field test that the level of interference from WiFi/Bluetooth noise and the possible presence of other drones cannot be fully controlled. Furthermore, due to the limited space/distance between the transmitter and receiver in our field test setup, we were not able to clearly demonstrate the effect of free space attenuation on detection performance (cf. Tab. LABEL:tab:field_test_acc_distance).

Regarding the use of simple CNNs as classifiers, it is not possible to reliably predict whether multiple transmitters are present. In that case, an object detection approach on the spectrogams could provide a more fine-grained prediction, see for example the works [30, 31] and [21]. Nevertheless, the current approach will still detect a drone if one or more are present.

We have only tested a limited set of VGG architectures. It remains to be seen whether more recent architectures, such as the pre-trained Vision Transformer [32], generalise as well or better. We hope that our development dataset will inspire others to further optimise the model side of the problem and perhaps find a model architecture with better performance.

Another issue to consider is the occurrence of unknown drones, i.e. drones that are not part of the train set. Examining the embedding space (cf. 4.2) gives a first idea of whether a signal is clearly part of a known dense drone cluster or rather falls into the larger, less dense, noise cluster. We believe that a combination of an unsupervised deep autoencoder approach [33, 34] with an additional classification part (cf. [35]) would allow, first, to provide a stable classification of known samples and, second, to indicate whether a sample is known or rather an anomaly.

References

[1] N. Al-lQubaydhi, A. Alenezi, T. Alanazi, A. Senyor, N. Alanezi, B. Alotaibi, M. Alotaibi, A. Razaque, and S. Hariri, “Deep learning for unmanned aerial vehicles detection: A review,” Computer Science Review, vol. 51, p. 100614, 2 2024. [Online]. Available: https://linkinghub.elsevier.com/retrieve/pii/S1574013723000813-

• We provide the dataset used to

develop the model. Together with the code to load and transform the

data, it can be easily used for future model development.

- [2] M. H. Rahman, M. A. S. Sejan, M. A. Aziz, R. Tabassum, J.-I. Baik, and H.-K. Song, “A comprehensive survey of unmanned aerial vehicles detection and classification using machine learning approach: Challenges, solutions, and future directions,” Remote Sensing, vol. 16, p. 879, 3 2024. [Online]. Available: https://www.mdpi.com/2072-4292/16/5/879

- [3] M. S. Allahham, M. F. Al-Sa’d, A. Al-Ali, A. Mohamed, T. Khattab, and A. Erbad, “Dronerf dataset: A dataset of drones for rf-based detection, classification and identification,” Data in Brief, vol. 26, p. 104313, 10 2019. [Online]. Available: https://linkinghub.elsevier.com/retrieve/pii/S2352340919306675

- [4] M. F. Al-Sa’d, A. Al-Ali, A. Mohamed, T. Khattab, and A. Erbad, “Rf-based drone detection and identification using deep learning approaches: An initiative towards a large open source drone database,” Future Generation Computer Systems, vol. 100, pp. 86–97, 11 2019.

- [5] C. J. Swinney and J. C. Woods, “Unmanned aerial vehicle flight mode classification using convolutional neural network and transfer learning,” in 2020 16th International Computer Engineering Conference (ICENCO), 2020, pp. 83–87.

- [6] Y. Zhang, “Rf-based drone detection using machine learning,” in 2021 2nd International Conference on Computing and Data Science (CDS), 2021, pp. 425–428.

- [7] C. Ge, S. Yang, W. Sun, Y. Luo, and C. Luo, “For rf signal-based uav states recognition, is pre-processing still important at the era of deep learning?” in 2021 7th International Conference on Computer and Communications (ICCC), 2021, pp. 2292–2296.

- [8] Y. Xue, Y. Chang, Y. Zhang, J. Sun, Z. Ji, H. Li, Y. Peng, and J. Zuo, “Uav signal recognition of heterogeneous integrated knn based on genetic algorithm,” Telecommunication Systems, vol. 85, pp. 591–599, 4 2024. [Online]. Available: https://link.springer.com/10.1007/s11235-023-01099-x

- [9] A. AlKhonaini, T. Sheltami, A. Mahmoud, and M. Imam, “Uav detection using reinforcement learning,” Sensors, vol. 24, no. 6, 2024. [Online]. Available: https://www.mdpi.com/1424-8220/24/6/1870

- [10] M. Ezuma, F. Erden, C. K. Anjinappa, O. Ozdemir, and I. Guvenc, “Detection and classification of uavs using rf fingerprints in the presence of wi-fi and bluetooth interference,” IEEE Open Journal of the Communications Society, vol. 1, pp. 60–76, 2020. [Online]. Available: https://ieeexplore.ieee.org/document/8913640/

- [11] ——, “Drone remote controller rf signal dataset,” 2020. [Online]. Available: https://dx.doi.org/10.21227/ss99-8d56

- [12] E. Ozturk, F. Erden, and I. Guvenc, “Rf-based low-snr classification of uavs using convolutional neural networks,” ITU Journal on Future and Evolving Technologies, vol. 2, pp. 39–52, 7 2021. [Online]. Available: https://www.itu.int/pub/S-JNL-VOL2.ISSUE5-2021-A04

- [13] C. J. Swinney and J. C. Woods, “Dronedetect dataset: A radio frequency dataset of unmanned aerial system (uas) signals for machine learning detection & classification,” 2021. [Online]. Available: https://dx.doi.org/10.21227/5jjj-1m32

- [14] ——, “Rf detection and classification of unmanned aerial vehicles in environments with wireless interference,” in 2021 International Conference on Unmanned Aircraft Systems (ICUAS), 2021, pp. 1494–1498.

- [15] S. Kunze and B. Saha, “Drone classification with a convolutional neural network applied to raw iq data,” in 2022 3rd URSI Atlantic and Asia Pacific Radio Science Meeting (AT-AP-RASC), May 2022, pp. 1–4. [Online]. Available: https://ieeexplore.ieee.org/document/9814170/

- [16] O. Medaiyese, M. Ezuma, A. Lauf, and A. Adeniran, “Cardinal rf (cardrf): An outdoor uav/uas/drone rf signals with bluetooth and wifi signals dataset,” 2022. [Online]. Available: https://dx.doi.org/10.21227/1xp7-ge95

- [17] O. O. Medaiyese, M. Ezuma, A. P. Lauf, and A. A. Adeniran, “Hierarchical learning framework for uav detection and identification,” IEEE Journal of Radio Frequency Identification, vol. 6, pp. 176–188, 2022.

- [18] O. O. Medaiyese, M. Ezuma, A. P. Lauf, and I. Guvenc, “Wavelet transform analytics for rf-based uav detection and identification system using machine learning,” Pervasive and Mobile Computing, vol. 82, p. 101569, 6 2022. [Online]. Available: https://linkinghub.elsevier.com/retrieve/pii/S1574119222000219

- [19] F. N. Iandola, M. W. Moskewicz, K. Ashraf, S. Han, W. J. Dally, and K. Keutzer, “Squeezenet: Alexnet-level accuracy with 50x fewer parameters and <1mb model size,” CoRR, vol. abs/1602.07360, 2016. [Online]. Available: http://arxiv.org/abs/1602.07360

- [20] S. Glüge., M. Nyfeler., N. Ramagnano., C. Horn., and C. Schüpbach., “Robust drone detection and classification from radio frequency signals using convolutional neural networks,” in Proceedings of the 15th International Joint Conference on Computational Intelligence - NCTA, INSTICC. SciTePress, 2023, pp. 496–504.

- [21] R. Zhao, T. Li, Y. Li, Y. Ruan, and R. Zhang, “Anchor-free multi-uav detection and classification using spectrogram,” IEEE Internet of Things Journal, vol. 11, pp. 5259–5272, 2 2024. [Online]. Available: https://ieeexplore.ieee.org/document/10221859/

- [22] C. Sun, A. Shrivastava, S. Singh, and A. Gupta, “Revisiting unreasonable effectiveness of data in deep learning era,” in 2017 IEEE International Conference on Computer Vision (ICCV), 2017, pp. 843–852.

- [23] P. Virtanen, R. Gommers, T. E. Oliphant, M. Haberland, T. Reddy, D. Cournapeau, E. Burovski, P. Peterson, W. Weckesser, J. Bright, S. J. van der Walt, M. Brett, J. Wilson, K. J. Millman, N. Mayorov, A. R. J. Nelson, E. Jones, R. Kern, E. Larson, C. J. Carey, İ. Polat, Y. Feng, E. W. Moore, J. VanderPlas, D. Laxalde, J. Perktold, R. Cimrman, I. Henriksen, E. A. Quintero, C. R. Harris, A. M. Archibald, A. H. Ribeiro, F. Pedregosa, P. van Mulbregt, and SciPy 1.0 Contributors, “SciPy 1.0: Fundamental Algorithms for Scientific Computing in Python,” Nature Methods, vol. 17, pp. 261–272, 2020.

- [24] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” in 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, May 7-9, 2015, Conference Track Proceedings, Y. Bengio and Y. LeCun, Eds., 2015. [Online]. Available: http://arxiv.org/abs/1409.1556

- [25] S. Ioffe and C. Szegedy, “Batch normalization: accelerating deep network training by reducing internal covariate shift,” in Proceedings of the 32nd International Conference on International Conference on Machine Learning - Volume 37, ser. ICML’15. JMLR.org, 2015, p. 448–456.

- [26] A. Paszke, S. Gross, F. Massa, A. Lerer, J. Bradbury, G. Chanan, T. Killeen, Z. Lin, N. Gimelshein, L. Antiga, A. Desmaison, A. Kopf, E. Yang, Z. DeVito, M. Raison, A. Tejani, S. Chilamkurthy, B. Steiner, L. Fang, J. Bai, and S. Chintala, “Pytorch: An imperative style, high-performance deep learning library,” in Advances in Neural Information Processing Systems 32, H. Wallach, H. Larochelle, A. Beygelzimer, F. d'Alché-Buc, E. Fox, and R. Garnett, Eds. Curran Associates, Inc., 2019, pp. 8024–8035.

- [27] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” in 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, May 7-9, 2015, Conference Track Proceedings, Y. Bengio and Y. LeCun, Eds., 2015. [Online]. Available: http://arxiv.org/abs/1412.6980

- [28] L. van der Maaten and G. Hinton, “Visualizing data using t-sne,” Journal of Machine Learning Research, vol. 9, no. 86, pp. 2579–2605, 2008. [Online]. Available: http://jmlr.org/papers/v9/vandermaaten08a.html

- [29] L. McInnes, J. Healy, N. Saul, and L. Großberger, “Umap: Uniform manifold approximation and projection,” Journal of Open Source Software, vol. 3, no. 29, p. 861, 2018. [Online]. Available: https://doi.org/10.21105/joss.00861

- [30] K. N. R. Surya Vara Prasad and V. K. Bhargava, “A classification algorithm for blind uav detection in wideband rf systems,” in 2020 IEEE 92nd Vehicular Technology Conference (VTC2020-Fall), 2020, pp. 1–7.

- [31] S. Basak, S. Rajendran, S. Pollin, and B. Scheers, “Combined rf-based drone detection and classification,” IEEE Transactions on Cognitive Communications and Networking, vol. 8, no. 1, pp. 111–120, 2022.

- [32] A. Dosovitskiy, L. Beyer, A. Kolesnikov, D. Weissenborn, X. Zhai, T. Unterthiner, M. Dehghani, M. Minderer, G. Heigold, S. Gelly, J. Uszkoreit, and N. Houlsby, “An image is worth 16x16 words: Transformers for image recognition at scale,” ICLR, 2021.

- [33] S. Lu and R. Li, DAC–Deep Autoencoder-Based Clustering: A General Deep Learning Framework of Representation Learning. Springer Science and Business Media Deutschland GmbH, 2 2022, vol. 294, pp. 205–216. [Online]. Available: https://link.springer.com/10.1007/978-3-030-82193-7_13

- [34] H. Zhou, J. Bai, Y. Wang, J. Ren, X. Yang, and L. Jiao, “Deep radio signal clustering with interpretability analysis based on saliency map,” Digital Communications and Networks, 1 2023. [Online]. Available: https://linkinghub.elsevier.com/retrieve/pii/S2352864823000238

- [35] E. Pintelas, I. E. Livieris, and P. E. Pintelas, “A convolutional autoencoder topology for classification in high-dimensional noisy image datasets,” Sensors, vol. 21, p. 7731, 11 2021. [Online]. Available: https://www.mdpi.com/1424-8220/21/22/7731

No comments:

Post a Comment